Leverage AI and centralized processing for L-5 autonomous vehicles

Mentor, a Siemens business, has launched what it claims is the first sensor platform capable of meeting the demands of an ‘SAE Level 5’ fully autonomous vehicle, DRS360.

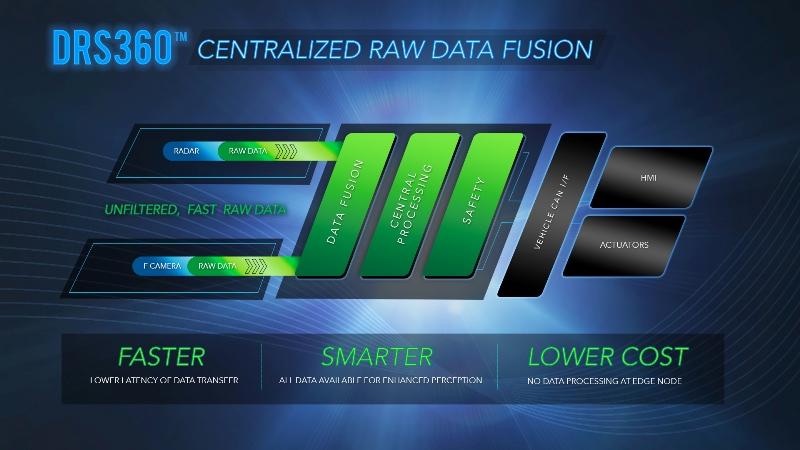

The main innovations within the DRS360 platform enable the centralized processing of raw data from the various sensors on a vehicle to provide real-time responsiveness in safety-critical situations, while reducing power consumption and latency.

Mentor Automotive is targeting systems that comply with the ASIL-D classification within the ISO 26262 functional safety standard. ASIL-D refers to the highest level of vehicle preparedness for a potentially hazardous event (i.e., one where there is reasonable likelihood of a threat to life).

So far, automotive sensor systems have tended to locate a microcontroller alongside each individual sensor to pre-process its output before data is passed to the vehicle’s hub.

The current number of sensors feeding hubs in a new car is thought to be between 60 and 100. But analysts expect that this number will likely double by 2020, as the fully autonomous vehicle becomes more of a reality. The kind of sensor technologies used include radar, LIDAR, vision and more.

Mentor’s argument is that by pre-processing data in an MCU at the source, unnecessary latency is imposed upon the final analysis and that as sensors proliferate, so will power consumption under such a distributed processing model. This constitutes a road block to full ASIL-D autonomy.

Real-time autonomous vehicle data analysis

The DRS360 architecture is therefore based on collecting only raw data from edge sensors. This obviates edge MCUs, thus cutting latency and holding power consumption within the target of a 100W envelope. All those distributed MCUs also add cost.

The raw sensor outputs are fed into a hub processing unit in the autonomous vehicle that performs “centralized raw data fusion”. This is based upon advanced neural network technology and machine learning algorithms to enable Level 5/ASIL-D autonomy (Figure 1).

Figure 1. Centralized raw data fusion architecture for an autonomous vehicle (Mentor – click to enlarge)

“With access to a high-resolution model of the vehicle’s surrounding environment, our perception algorithms make decisions faster and with more processing efficiency than other ADAS/AD solutions,” Mentor says.

“And our use of advanced neural networking caps DRS360 power consumption at just 100W.”

The load on the CPU is controlled also because the raw data is only processed in real-time in “the region of interest”.

Mentor’s launch version of DRS360 uses an FPGA, the Xilinx Zynq UltraScale+ MPSoC. The platform has also been designed for use within SoCs based on both x86 and ARM processor architectures.

DRS360 incorporates headroom for advances in AI and machine learning.