Virtual prototyping case study focuses on address mapping, clocking and QoS in DDR memory interface optimisation

How do you optimise a generic DDR memory controller to deliver a specified performance?

A case-study follow-up to an earlier white paper on optimising DDR memory subsystems applies virtual-prototyping techniques to optimise the address mapping, clock frequency, and Quality of Service (QoS) configuration of a DDR controller to do so.

The cases study takes a generic mobile application processor SoC as its subject, modelling the performance of the SoC and the workload of the application running on it.

The SoC has a multi-ported DDR3 memory subsystem, four bus nodes, multiple bus masters representing two CPU clusters, DMA, GPU, and an I/O cluster with camera interface and two LCD controllers. Traffic is modelled as a set of flows generated by the bus masters, in a scenario in which one CPU handles high-priority tasks for users, while it and a second CPU handle background tasks, and the attached camera, GPU, and LCD do time-critical tasks to capture, process and display an image.

The case study uses Synopsys’ Platform Architect as a tool within which to assemble a performance model of the SoC, drawing on library elements for features such as bus masters, AXI interconnects, and a generic multi-port memory controller, which models the memory subsystem including port-handler, arbiter, scheduler, protocol controller, and DDR memory.

Platform Architect is also used to model trace-based elastic workloads and provide deadline analysis. Each traffic flow is described as a sequence of reads and writes with particular transaction attributes, such as burst size, commands, addresses, and byte enables. The performance requirements of individual and concatenated traffic flows can be specified using timer commands.

This approach leads to an elastic workload model that captures the intended transaction sequence and throughput per flow and the dependencies between flows, as well as responding realistically to contention and starvation in the interconnect and memory subsystem.

The case study goes in to describe how a combination of the performance model of the SoC and the workload model was used to run multiple simulations, with a variety of parameters, to optimize the memory sub-system.

This work included an effort to tailor the address-mapping strategy to the traffic scenario, find the lowest-cost DDR3 speed bin parts to deliver the necessary performance, and to tune the overall memory subsystem to meet QoS requirements.

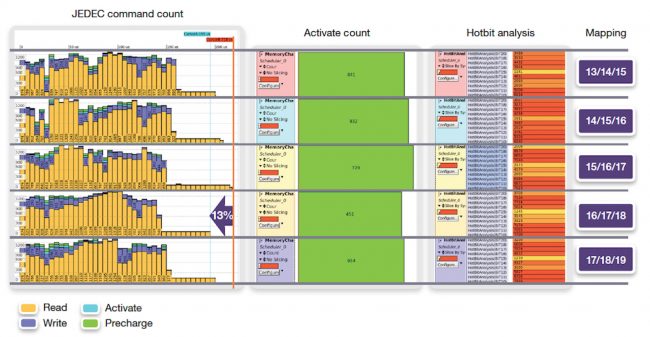

The study describes techniques for analysing the mapping of AXI address bits to memory bits for the traffic scenario, using Platform Architect to provide visualisations of the impact of various strategies, such as Figure 1.

Figure 1 Analysing various address mapping optimisation strategies (Source: Synopsys)

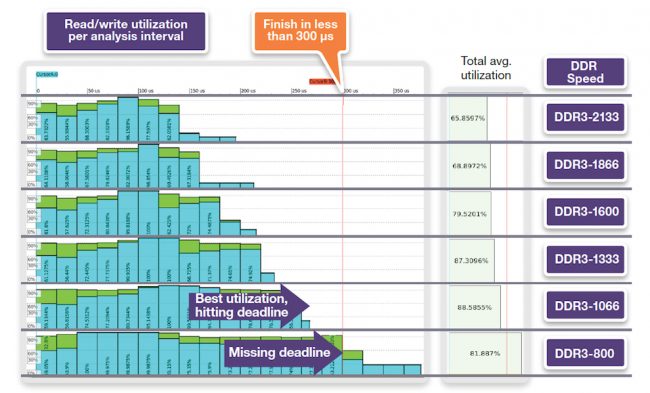

The tool was also used to visualise how to minimise the trade-off between minimising DDR clock frequency (which saves power and cost) and meeting performance requirements, as shown in Figure 2.

Figure 2 Trading off the speed of DDR3 memory with meeting system deadlines (Source: Synopsys)

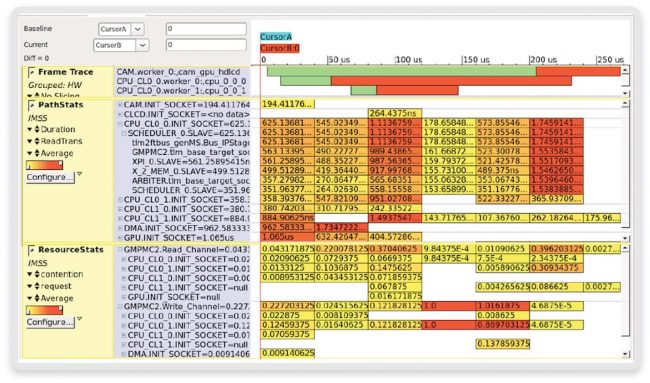

The case study goes on to describe how the tool can be used to ensure that optimising the choice of DDR3 memory speed bin does not clash with the requirements of meeting QoS goals. A further simulation results view (in Figure 3) relates the deadline trace of the combined real-time traffic and the two high-priority CPU tasks for the DDR3-1066 memory configuration. It shows that all three of these QoS related deadlines are violated by in the configuration analysed.

Figure 3 Detailed analysis of deadlines (top), transaction duration (middle), and memory-scheduler contention (bottom) (Source: Synopsys)

The figure also shows (at middle) a Path Statistics chart, with a heat map of the average read transaction duration per initiator over time. This shows how transactions can be delayed in the port interface waiting for the memory arbiter, but most of the delay occurs during the final step inside the memory scheduler. The resource statistics view at the bottom of the Figure shows the read and write queue inside the memory scheduler, revealing total contention and the contribution from each initiator.

The case study goes on to use a similar approach to explore options for optimising QoS, and discusses various approaches to doing so, including changing the speed of the DDR3 memory used, adjusting how different types of traffic are mapped to QoS classes, and options to use a bigger content addressable memory to increase the size of the command array, or to provide separate input queues.

The combination of five design parameters (speed bin, CPU priority, real-time task priority, CAM size and number of ports) yields 48 parameter combinations, demonstrating the value of having a tool such as Platform Architect that can sweep through a set of scenarios and aggregate the results into a spreadsheet for analysis.

To read the full case study, including further details of how the modelling, simulation and analysis strategy was implemented, please click here.