Neural networks and vector processors deployed by Ceva for 5G handsets

The increased complexity of 5G cellular compared to its predecessors has led Ceva to take a number of different approaches to delivering an IP platform for the first wave of handsets to deploy support for the New Radio (NR) standard.

Ceva has decided to embrace machine learning, hardware acceleration, specialized instructions and vector processing to provide enough horsepower to support the latency reductions and bandwidth increases that NR is meant to deliver.

“Before, we addressed [cellular standards] with standard DSPs for both basestations and terminals. This is not a complete modem but a subsystem that addresses the specific challenges of 5G,” said Emmanuel Gresset, director of business development at Ceva.

The subsystem is designed to support Release 15 and then Release 16 with a software upgrade. Although the polar and LDPC encoders and decoders used for error detection and correction have been implemented in hardware, most of the 5G-specific functions are designed to support software tuning. For example, instructions added to the control-oriented X2 DSPs that are responsible for scheduling activity across the PentaG subsystem to support functions such as channel-state information (CSI) reporting are implemented as primitives rather than complete microcoded operations. According to Ceva, the CSI reporting instructions provide a tenfold improvement in performance over standard instructions.

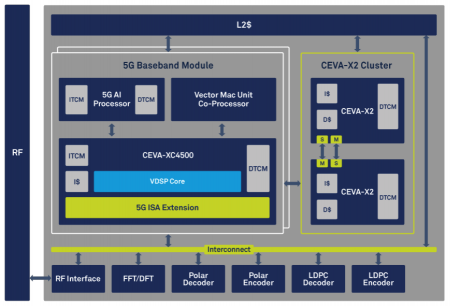

Image Block diagram of the Ceva PentaG subsystem

The main components of the PentaG subsystem are an extended version of the XC4500 DSP originally developed for LTE handsets, a vectorized 64-MAC unit for estimating channel state and MIMO processing, a cluster of X2 control-oriented DSPs, the error-coding units and a programmable neural-network processor. Gresset said the company found it was better to extend the XC4500 with custom operations rather than attempt to build a lower-power version of the XC12 that was developed for 5G basestations.

Marking a change in approach in the way cellular transceivers are built, the neural-network processor is a reflection of the complexity faced by 5G implementors dealing with the combination of MIMO and beamforming on sub-6GHz and millimeter-wave channels.

“All the technology is enabled by massive MIMO and beamforming. This is efficient only if link adaptation is very well done,” Gresset said. “But it’s a complex task. If the link adaptation is not accurate you will not achieve the throughput and you will consume a lot more power [per bit]. The burden of finding the best parameters for link adaptation is on the UE [handset or terminal].

“That’s why we came up with a neural network-based approach. The AI processor takes as input the channel conditions and it computes the best transmit parameters. It’s a programmable neural network but it’s optimized for link adaptation,” Gresset added. “It can be configured for multiple neural networks potentially. We provide tools for customers to train the networks and generate microcode for the AI processor.

“We looked at traditional, more algorithmic approaches. The [parameter] dimensions are so large that it would require a lot of memory to do that. The complexity increases exponentially.”