Arm Cortex-A processor team focuses on formal

Arm is on the way to making formal a fundamental part of its verification strategy for ARM Cortex-A processors as it increases its emphasis on designing high-end processors for safety-critical systems and to kill off bugs as early as possible during each project.

Vikram Khosa, principal engineer in Arm’s processor-design team based in Austin, explained the formal-verification methodology the company is following in an international seminar organized by TV&S in mid-May. “We started late 2013 and did some initial work on the Cortex-A57 and A72. We inherited some homegrown methods and developed some of our own.” The initial infrastructure was built around Cadence Design Systems’ JasperGold, with tools such as Synopsys’ Hector employed for C-to-RTL verification in specific units, the multiplier being one of them.

“Late 2014 we started on a more serious effort on the current generation of ARM Cortex CPUs. Management support was absolutely critical to sign off on this. We got some initial help from Oski Technology to bootstrap. And along the way built up a small dedicated formal team.”

The second formal effort aimed to use formal verification on most of the functional units in the processor. Ultimately, formal will extend to the entire processor subsystem. “For functional safety we are trying to to make it a formal-only verification effort,” Khosa said.

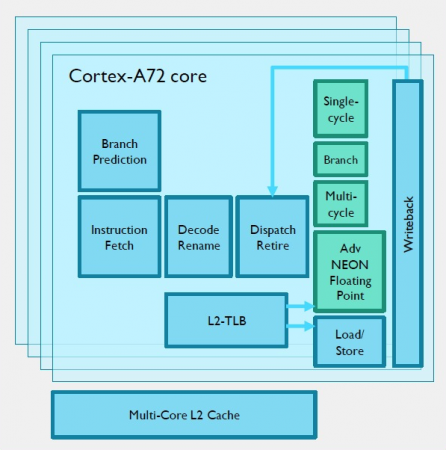

Image The complexity of processor cores such as the Cortex-A72 has helped drive formal adoption

Multiple goals

The verification team had a number of goals in the formal program that stretched from bringing designers into the process with a number of ‘light’ techniques focused on assertions through a number of focused techniques to sign-off proofs. “One of the key sign-off goals was ‘no unexplained fails’,” Khosa said.

To help designers, the team provided a collection of macros to help with the writing of embedded properties, training and demonstrations of tool techniques, and shared traces and environments to help designers debug their blocks. “We want to make everyone formal literate to some degree,” Khosa said.

During the program, Khosa said they found designers split into three broad groups. The majority group developed a rich set of embedded properties as well as some interface properties. “There were pockets of deeper engagement,” Khosa added, with some designers using formal more extensively for bring-up and debug on their own. “Overall formal was perceived as a viable form of verification.”

Bugs were often found ahead of simulation, sometimes because simulation had not reached into the extreme corners. In some cases, bugs were caught because the simulation stimulus that would have triggered them had been turned off accidentally.

Custom flows

For deeper verification, the team created several custom flows either entirely inhouse or in collaboration with tool vendors. They included tools for deep bug-hunting, equivalence checking, primarily to verify the floating-point units, and deadlock hunting. As the program developed, formal became the primary vehicle for verifying the logic in floating-point units.

“Most bugs were found pre-alpha. There was a slow but steady trickle through beta but most were found in alpha,” Khosa said. “There were no surprises [in terms of] bug locations. The memory system tends to be the buggiest area.”

In terms of the percentage of bugs tracked since the end of 2015, those in the memory systems accounted for close to two-thirds of the total. Instruction fetch was a distant second at around 20 per cent.

At the start of the program, the verification team made a number of assumptions of how formal would be used. “We assumed there was no need to craft elaborate stimulus: we could start off relatively unconstrained and build as needed. Also, we assumed some cross-feed with simulation: we help simulation and simulation helps us. The reality was different,” Khosa said.

One issue was that the design of constraints that suit formal do not necessarily mesh well with simulation: there is often a small intersection of properties that offer high value for both formal and dynamic verification. Another issue was that formal’s ability to reach into extreme corners was overkill for designers working to bring up a block. “Debug bandwidth itself became a bit of a bottleneck,” Khosa said.

Verification tradeoffs

Interfaces between blocks could be particularly problematic, becoming hard to constrain and to model effectively as things changed. Elements such as initial value abstractions that allow inconclusive results to be used to get to a useful state faster could prove counterproductive at times, leading to an overly complex web of dependencies. “We had to replace some of them with simpler abstraction models,” Khosa added.

The program exposed a tradeoff in measuring the performance of verification. One issue, Khosa said, is that bug-hunting engines do not contribute to formal coverage. So, the question becomes how much time does the team invest in either to get closer to sign-off. For new projects, the team favors an emphasis on bug-hunting rather than chasing progress in metrics, encouraging designers to write “interesting covers early” and with earlier use of complexity reduction and bug-hunting techniques.

A further tradeoff is between compute resource for formal versus simulation. There were other valuable lessons, such as the need to to identify fertile areas of design that are highly suited to formal verification. Highly complex logic in instruction fetch and dispatch, interactions with memory, and the huge state space of a floating-point unit were key examples. For a follow-up project to help scale up the formal effort, a trace cache in the instruction-fetch unit of a derivative processor design became a candidate for a formal-oriented bring-up process. “What else pays off? Rigorous ground-up planning and early investment in tools and flows.”

Future plans

To move the strategy forward, the team decided formal literacy of some form is vital at all levels, but that flows should be simplified “to where they can be almost pushbutton. We want to move to a point where the formal lead is more decoupled and unit teams are more self-sufficient,” Khosa said.

As part of the revised flow, the Arm aims to make formal a permanent part of unit-level smoke testing as well as regression testing, with rotating set of configurations run each night. But the work is far from done, Khosa said: “We’ve gone a long way and have a long way to go still.”