Triage without tears: improving debug’s most human challenge

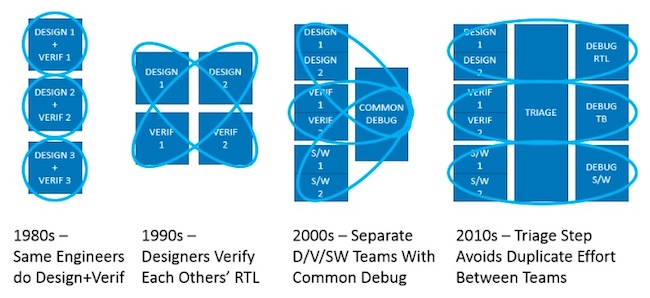

Triage remains one of the design and verification tasks most resistant to automation. Regression tests can deliver results, but deciding which bugs are most important and how to address them inherently requires human decision-making.

Not surprisingly, the triage task has grown in line with design complexity. Overall debug is thought to account for 40% of verification time. Within that, the analysis, categorization, prioritization and the assignment of debug tasks itself can take up 20%-50% of the debug effort. Obviously, an efficient verification team wants to be at the lower end of that range.

Toward a triage checklist

To that end, Gordon Allan, Product Manager for Questa Simulation at Mentor, has put together a useful set of vendor-and-tool-neutral tips and techniques to help control the process, “Evolution of Triage: Real-Time Improvements in Debug Productivity”.

The main takeaways lie in Allan’s breakdown of the increasingly complex triage task into four dominant ‘patterns’ and then four models within which techniques can be used to improve these processes.

The patterns are:

i. Triage of a single failing test to disposition corrective action.

ii. Triage of a failing test or failure mode in historical context to disposition longer term corrective action.

iii. Triage of multiple failing tests to disposition resource focus and priority.

iv. Triage to identify systemic failures.

The corresponding models, discussed in greater detail in Allan’s article, are:

A. A four-step categorization of an individual failure, stretching from the failure mode to comparison against a ‘golden reference’.

B. A three-step analysis of multiple failures to identify commonality across groups, beginning with error-message comparison and moving on to the comparison of failure modes.

C. A two-pronged approach to analyzing a greater number of fails look for potential root causes common to groups drawing on information from both formal and root cause data from your debug software.

D. Analyzing a single fail across multiple regression runs drawing on its frequency, whether or not it is sporadic and so on, applying the results to current and future triage sessions.

“Tools cannot perform the triage role per se,” Allan observes, “but they can help with data gathering, reporting and initial analysis, with the remainder of the analysis being manual based on our experience.

“Part of the effort is having good data at our fingertips during regression analysis and results analysis phases, including a historical context. Next is to develop a precision in the way decisions and factors of interest are communicated to the next person in line to continue with deeper debug.”

“Evolution of Triage: Real-Time Improvements in Debug Productivity” is available for immediate download.