Synopsys claims 35TOPS performance from new family of embedded vision cores

Synopsys has updated its ARC line of processor IP with the launch of the EV7x family of embedded vision processors, which it says will have up to four times the performance of the EV6x generation.

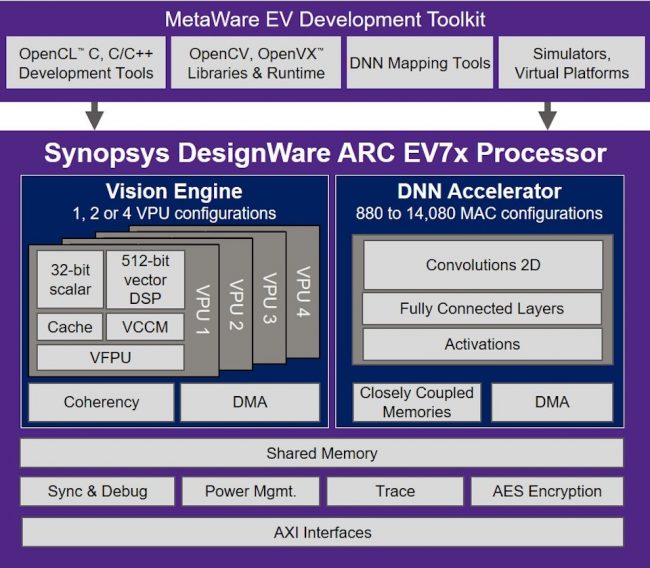

The cores include a Vision Engine with up to four enhanced vector processing units (VPUs), and a deep neural network (DNN) accelerator with up to 14,080 MACs, for machine learning (ML) and artificial intelligence (AI) edge applications. This configuration of the core gives a theoretical performance of up to 35 TOPS performance in a 16nm finFET process at typical conditions.

The EV7x cores have been designed with architectural enhancements including more granular clock and power gating to cut power consumption. Development tool support includes Synopsys’ MetaWare EV Development Toolkit, which works with common embedded vision standards such as OpenVXTM and OpenCLTM C.

Potential applications include advanced driver assist systems (ADAS), video surveillance, smart home, and augmented and virtual reality.

Figure 1 The ARC EV7x processor core has been designed for embedded vision tasks (Source: Synopsys)

Each of the EV7x VPUs has a 32bit scalar unit and a 512bit wide vector DSP. It can be configured for 8, 16, or 32bit operations so it can perform simultaneous multiply-accumulates on different streams of data.

The optional DNN accelerator can be configured with between 880 and 14,080 MACs. It has been designed for fast memory access, performance, and power efficiency. The DNN accelerator’s architecture has been tuned to support convolutional neural networks (CNNs), as well as batched LSTMs (long short-term memories) for applications that require time-based results, such as predicting the future path of a pedestrian.

The Vision Engine and the DNN accelerator can work in parallel so, for example, the Vision Engine handles any custom layers of a CNN. Alternatively, the processing power of the two blocks can be used to handle multiple cameras and vision algorithms concurrently.

Synopsys has worked hard to try and manage the memory bandwidth challenges of accelerating ML and AI workloads on chip. For example, algorithms can be partitioned by with respect to the feature maps, and then when the output of one layer of a graph is to be used as the input to the next, these intermediate results can be broadcast over DMA channels to other engines. Similarly, Synopsys is implementing techniques to merge pooling and convolution layer calculations, in a process it calls multilevel layer fusion, to reduce memory accesses. At a less granular level, processes such as Simultaneous Location and Mapping, as used in robotics and autonomous vehicles, are being merged with convolutional neural networks for object recognition and location tasks.

The size of the largest DNN configuration, with 14,080 MACs, also makes it easier to map large algorithms onto the processor, and therefore reduces the amount of external memory accesses needed. Synopsys also suggest that it may be possible to connect up to four of the most highly configured EV7x processor cores over an on-chip network to achieve aggregate performance of 100TOPS.

Other enhancements to the EV7X family over the EV6x family include an optional AES-XTS encryption engine to protect data passing from on-chip memory to the Vision Engine and DNN accelerator. This would make sense to protect high-value data such as training datasets and personal biometric data from being exploited.

The cores are also available in ASIL B and ASIL D compliant versions, known as the ARC EV7xFS parts, to speed up ISO 26262 certification of automotive SoCs. The functional safety-enhanced processors offer hardware safety features, safety monitors, and lockstep capabilities that enable designers to achieve stringent levels of functional safety and fault coverage without significant impact on power or performance.

The supporting MetaWare EV Development Toolkit enables the development of efficient computer vision applications on the EV7x processor’s vision engine as well as automatic mapping and optimization of neural networks graphs on the dedicated DNN accelerator. The mapping tools support Caffe and Tensorflow frameworks, as well as the ONNX neural network interchange format.

Further information

ARC EV7x Embedded Vision Processors, DNN accelerator option up to 14,080 MACs, and MetaWare EV software is expected to be available for lead customers in Q1 2020.

The DNN accelerator option with up to 3,520 MACs is available now. For more information on Synopsys ARC EV7x Processor IP: