How cloud computing is now delivering efficiencies for IC design

A Mentor-Microsoft-AMD pathfinder demonstrates the potential benefits of cloud-based physical verification.

The concept and use of shared computing resources have been around since the 1960s. But the term ‘cloud computing’ did not emerge until the 1990s, along with vigorous efforts to standardize, optimize, and simplify the use of virtual computing resources. At this early stage, cloud security structures and processes were often deemed inadequate by the semiconductor industry because of concerns about the protection of intellectual property (IP).

Since then, the companies providing cloud computing have improved security measures to the point where proprietary IP can be adequately safeguarded. Such a level of security, combined with the overwhelming need to increase resources to keep up with the growth in verification runtimes, has led IC design companies to add cloud computing to the processing toolbox.

As IC companies increasingly look to leverage cloud capacity for faster turnaround times on advanced process node designs, they are seeking assurances that running EDA software in the cloud will deliver the same sign-off verification results they know and trust from traditional environments, and allow them to adjust their resource usage to best fit business requirements and market demands.

Mentor, a Siemens Business (Mentor) teamed up with Advanced Micro Devices, Inc. (AMD) and Microsoft Azure (Azure) to evaluate how well the Calibre physical verification platform could be used in the cloud to reduce design closure times, based on employing more compute resources to get designs to market faster. For a production 7nm design, the team achieved a 2.5X speed up in the cycle time. Real-world results from test projects such as this provide the confidence IC companies need to move forward and adopt new processing models.

Let’s look at some key elements of such an effort, with specific use to this test, which was based on the final metal tape-out database of a production 7nm Radeon Instinct Vega20. It is currently AMD’s largest 7nm design and contains more than 13 billion transistors.

Best practices

Any time new processes are introduced, certain methods will emerge as the optimal means of achieving the desired or intended results. Around this test project, Mentor developed proposed cloud usage guidelines and best practices for running Calibre operations in the cloud. Particular areas of focus included:

- Foundry rule decks;

- Software versions; and

- Cloud server selection.

Foundry rule decks

Design companies should always use the most recent foundry-qualified rule deck to ensure that the most recent best practices for coding are implemented. These decks may include optimizations specifically designed to improve efficiency during remote distributed processing.

Software versions

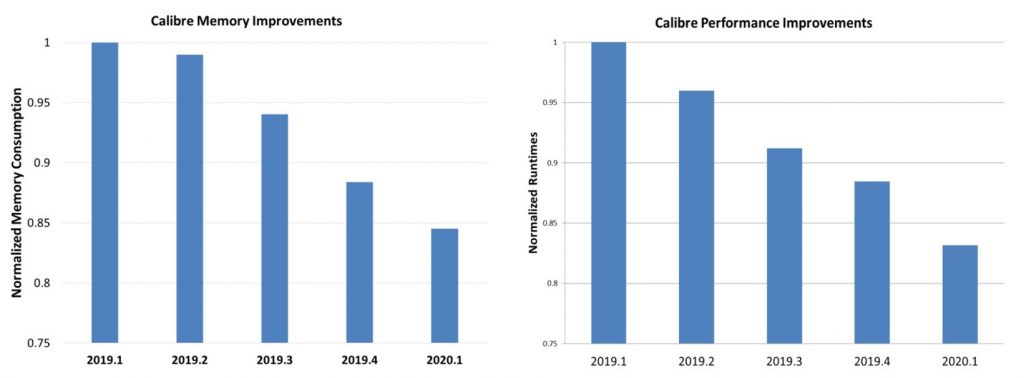

Likewise, companies should always use the most current version of their EDA software to ensure the best performance possible. For instance, because Mentor optimizes and updates the Calibre engines for every Calibre release, using the most current version always ensures optimized runtimes and memory consumption (Figure 1).

Figure 1: Normalized memory usage vs. Calibre release versions (left); Normalized runtime vs. Calibre release versions (right) (Mentor)

Cloud server selection

The market offers a variety of server types for cloud operations, with the “best” selection dependent on the customer’s needs and applications.

For the collaborative project, the team selected the AMD EPYC servers available for the Microsoft Azure public cloud. Each EPYC server type has different core, memory, interface, and performance characteristics, enabling cloud users to select the EPYC server best suited for their applications.

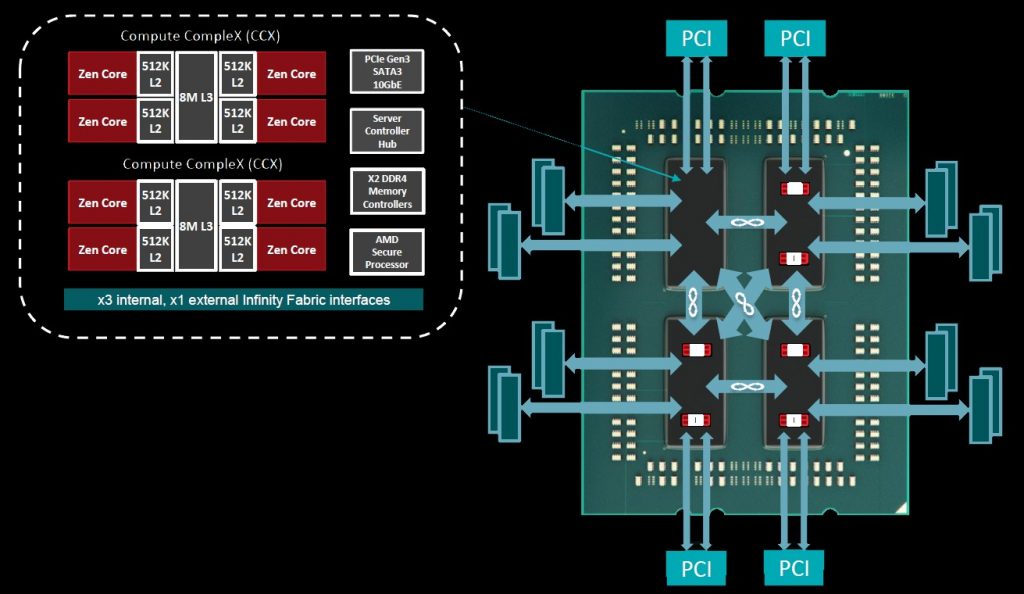

The AMD EPYC architecture (Figure 2) provides an excellent medium for massive parallelism due to its 32 core/64 threads per socket that supports computationally-heavy runs. Eight DDR4 channels add another dimension to the server, further optimizing its ability to handle machine-intensive computation runs. Then, the hierarchical design of an 8MB L3 memory cache per 4 cores further boosts the computational speed.

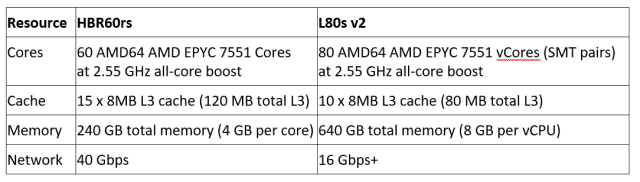

These cloud servers are used to create virtual machines (instances) in the cloud service. The project team determined the two most appropriate Azure instances for Calibre applications are the HB60rs and the L80s v2. Both run on EPYC 7551 processors but with different configurations and functionality.

For example, the HB60rs instance uses the same EPYC 7551 processor as the Lv2 instances, but only 60 of the 64 cores in the two-socket machine are accessible to the instance, and hyperthreading is turned off. That is because the HB series instances are optimized for applications driven by memory bandwidth, such as fluid dynamics and explicit finite element analysis, while the Lv2 instances are designed to support demanding workloads that are storage intensive and require high levels of I/O. Table 1 provides a summary comparison of the attributes for each server type.

EDA in the cloud

Calibre setup

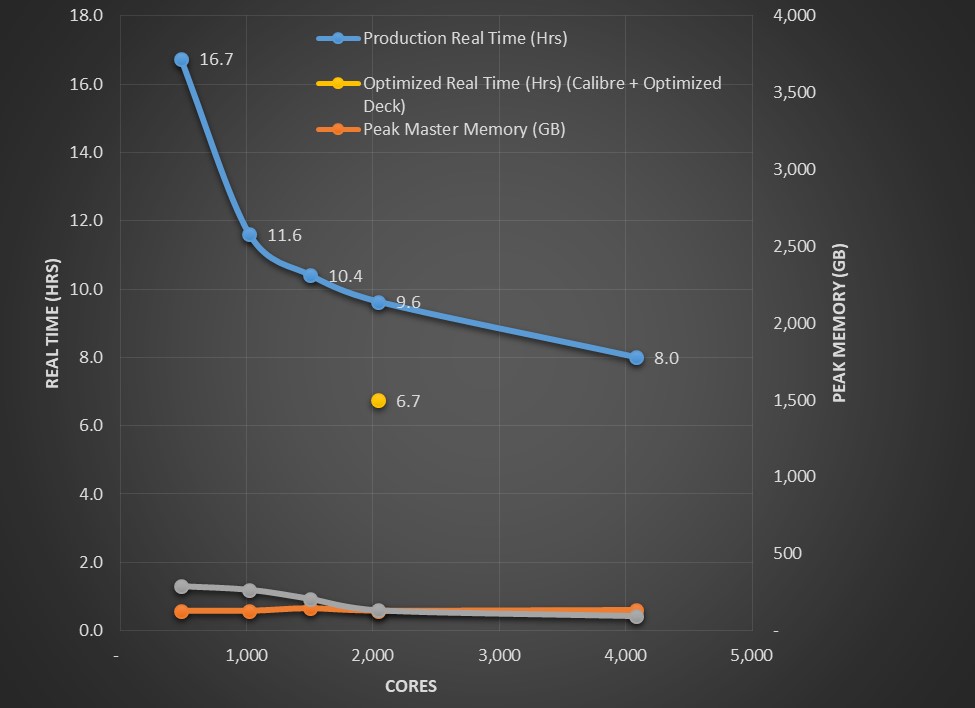

The Calibre 2019.2 release was used with a production version of the foundry deck for the 7nm technology node to perform design rule checking (DRC) on the design. For the Calibre nmDRC run, the team used Calibre hyper-remote distributed computing capability that supports up to 4,000 cores (Figure 3).

As in all Calibre distributed computing runs, a master was assigned to manage all other resources used in the run. For the purposes of the collaboration, both the designated master and the remote servers were AMD EPYC 7551 servers with 32 CPU cores and 256 GB RAM of memory.

Cloud setup

The project team ran all experiments using the AMD EPYC servers for both the master and remote servers, using the following hardware configuration:

- Microsoft Azure HB60rs instances: For a single HB60rs, a master was run in conjunction with 17, 25, 34, and 68 remote HB60rs instances, each dedicated entirely to running Calibre jobs, using the Azure CycleCloud interface to invoke and manage the jobs.

- Geographically close servers: All cloud servers on the project were in the region of the Azure Cloud closest to the physical location of the hardware to initiate and control the cloud usage.

- Assembly: To minimize the latency between initiating a job and actual execution, the design was assembled in the cloud as blocks were ready.

Results

The results of the collaborative project provided the following performance metrics:

- The speed of the Calibre nmDRC run continued to increase all the way up to 4K cores.

- There is always a ‘knee’ in this scaling curve where the best value for money is achieved. For this design and node, the knee was reached between 1.5K and 2K cores.

- Peak cumulative memory used on the master and remote servers was less than 500GB.

- Peak remote server memory decreased as more cores were added.

In an on-premise Calibre nmDRC run, Mentor normally recommends using 256 cores for full-chip DRC because that is the number of on-site resources most design teams typically have access to during a tape-out. The turnaround time using 256 cores cab be as long as 24 hours for a large, complex 7nm design like the AMD Radeon VII/MI60 GPU. Using this number of resources means a team will typically only complete one design iteration per day, which is far slower than what most of today’s time-to-market schedules demand.

By increasing that resource to 2K cores for the test, the team reduced runtime to 12 hours, allowing two iterations per day. Going all the way to 4K cores resulted in a runtime of less than eight hours, and three iterations per day. The experiment clearly demonstrated that combining the power and efficiency of Calibre scaling with a significant increase in the number of available cores enables companies using Calibre software in the cloud to dramatically improve the rate of design closure simply by reducing runtimes.

Mentor continuously works to improve Calibre performance, and collaborates with foundries to identify and deploy performance-focused deck optimizations (while ensuring the same or better accuracy). The team ran an additional experiment to see if there was any benefit from using the latest version of Calibre and the latest optimized deck. The results, shown by the optimized real time (yellow dot) in Figure 2, demonstrated that an additional three hours could be saved at the knee of the scaling curve (this occurred at about 2K cores).

Summary

Cloud computing is now accessible and practical for silicon system design. However, like any new technology, whether or not it provides a benefit to your company is a matter of analysis and careful preparation.

This collaboration between Mentor, AMD, and Azure highlights the reductions in time and costs of cloud usage that can be achieved by implementing best practices and usage guidelines for EDA cloud computing. Companies can use these experiences to guide their own EDA-in-the-cloud implementations. By adopting similar strategies and practices, companies can achieve faster overall runtimes, and thereby both reduce their time to market and speed up innovation.

About the author

Omar El-Sewefy is a DRC Technical Lead in the Design to Silicon division of Mentor, a Siemens Business. He received his BS and MS in electronics engineering from Ain Shams University in Cairo, Egypt, and is currently pursuing a PhD in silicon photonics physical verification at Ain Shams University. Hisindustry experience includes semiconductor resolution enhancement techniques (RET), source mask optimization, design rule checking, and silicon photonics physical verification. He may be reached at omarUNDERSCOREelsewefyATmentorDOTcom.