Skeet shooting and design debug

Historically, the first method to be deployed and to this day most popular method for using a hardware emulator is the in-circuit-emulation (ICE) mode. In this mode, the emulator is plugged into a socket on the physical target system in place of a yet-to-be-built chip to support exercising and debugging the design-under-test (DUT) mapped inside the emulator with live data.

This admittedly attractive verification approach though comes with a bag of issues, the most severe being its random nature. That is, its lack of deterministic or repeatable behavior when debugging the DUT. An analogy may help to put the problem into perspective.

Let’s consider skeet shooting, a shooting sport in which a clay target is thrown from a trap to simulate the flight of a bird. And let’s add to it a twist to make it even more challenging. By limiting the vision of the shooter via a pair of glasses that restrain the eyesight to a narrow window, the shooter must now “guess” the trajectory of the clay target which changes on every pitch. This is exactly the challenge that the verification engineer has to cope with when debugging a chip design in ICE mode. Finding a bug in ICE mode is like trying to hit the clay target hoping to get a glimpse of it in the narrow and limited sight.

Figure 1 Skeet shooting with constrained vision poses a big challenge to the shooter

A little history may be in order to set the stage. For over 20 years now, surveys have consistently reported that design verification consumes somewhere near 70 per cent of the design cycle. On the bright side, design verification is an activity that more or less can be organized in advance in that it is predictable. But design debugging is a pursuit that cannot be planned ahead because bugs show up unexpectedly in an unknown location, at an unknown time, due to an unknown cause.

It is conceivable that in system-on-chip (SoC) designs with vast amounts of embedded software, some bugs, whether in hardware or software, sit deep in unknown corners of the design that can be unearthed only after long execution. It is entirely possible that it can take billions of clock cycles before coming across a bug.

What is even more frustrating stems from the random nature of debugging that requires repeated runs before finding the culprit. If a bug isn’t deterministic, that is to say, it cannot be replicated in subsequent runs, the search can turn out to be a nightmare.

Critical unknowns

When applied to designs of hundreds of million gates that require long sequences of billions of verification cycles for debugging, the three critical unknowns –– location, time and cause –– can considerably delay the schedule of even the most well thought out test plan. A three-month delay to new product in a highly competitive market will cut 33 per cent off its total potential revenues. This is large enough to justify the most expensive verification solution. This brings me to hardware emulation. Hardware emulators accelerate execution and debugging time by virtue of their extremely fast performance. In fact, their speedy execution was the reason for devising them.

The concept was simple: check the DUT against the actual physical target system where the yet-to-be-built chip will ultimately be plugged in. No more writing test vectors or testbenches. Let the real world do the job thoroughly and quickly. Supposedly, the real world is better at finding nasty bugs dormant in obscure design areas than any software testbench, isn’t it?

In-circuit debug issues

As it turned out, not all that sparkles is gold or silver. For all its alluring promises, debugging a chip design in ICE mode is cumbersome and frustrating for two reasons. First, the emulator must be connected to the target system. For that, a speed adapter is needed to accommodate the fast clock speed of the physical target system to the relatively slow clock speed of the emulator, which may run three orders of magnitude slower. Basically, the adapter is a first-in-first-out (FIFO) register that trades functionality and accuracy for performance. A high-speed protocol such as PCIe or Ethernet is down-graded to cope with the intrinsic capacity limitation of the FIFO.

The adapter and connecting cables add physical dependencies and weaknesses to the setup with negative impact on the reliability of the system. Moreover, the setting is limited to only one test case per protocol and it does not allow for corner case testing or any sort of ‘what if’ analysis. And, finally, an ICE mode setting cannot be accessed remotely without onsite help to plug and unplug the target system.

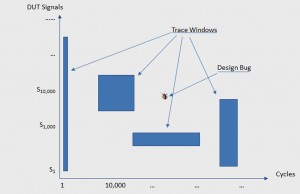

Second, and the most underestimated drawback, is the lack of deterministic behavior that compromises and prolongs the finding of a bug in ICE mode when the target system clocks the design. Tracing a bug in the DUT with hardware emulators in ICE mode requires the activity capture of each register in the design at full speed, triggered on specific events. In emulators based on custom silicon, each design register is connected to a trace memory without compiling the connection. At run-time, the verification engineer can trade-off the number of tracing clock cycles against the number of design registers to be traced.

In commercial FPGA-based emulators, only a limited number of design registers can be connected to a trace memory via the compilation process. Adding or changing a register for tracing requires recompilation of the design with a rather dramatic drop in design/iteration time. In both emulator types the user can increase the tracing depth by decreasing the number of traced registers, or vice versa.

Figure 2 The trace window (cycles x signals) is limited by the memory size in the logic analyzer

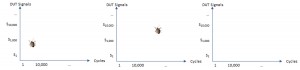

As a result, the user ends up making multiple runs –– possibly in the hundreds –– to find the debugging window of interest and dump the right waveforms. But the real problem is that each run may detect a bug –– the same bug –– at different time stamps or, even worse, may not detect any bug due to the unpredictable behavior. Remember the skeet shooting example?

Consider the case of an SoC populated with third-party IP. Time and again, an IP core that works in isolation does not work when embedded in the SoC. Debugging such IP deeply embedded in the DUT via the ICE mode may cause countless sleepless nights to the verification team.

Figure 3 In consecutive runs, the same design bug shows up at different time/zone stamps or not at all

The good news is that the emulation world has changed dramatically with the advent of the transaction-based, co-modeling technology. By removing the physical target system and replacing it with a virtual test environment described at high level of abstraction via C++/SystemVerilog and connected to the DUT via transactors, an entire set of problems disappears.

Testbench availability

High-level testbenches can be created through SystemVerilog in a fraction of the time required by traditional Verilog with fewer errors. Transactors can be purchased off the shelf. Even better, entire test environments targeting specific market applications such as multimedia, networking and storage, inclusive of virtual testbenches and transactors, are available under the brand name of VirtuaLAB from Mentor Graphics to support their Veloce2 emulation platform.

Most important, the design stimulus is now deterministic, thus leading to a faster debugging closure. Transactors provide a smooth transition from hardware description language (HDL) simulation to emulation. Clocks can be stopped and stepped through, ‘what if’ scenarios are now possible and corner cases can be modeled. Nowadays, engineers design chips by writing register transfer level (RTL) code and do not need to be exposed to lab equipment for testing their designs. Remote access is now possible and growing in popularity.

By combining SystemVerilog assertions with transactors, verification engineers can monitor their designs without speed degradation with unlimited debugging capabilities. This gives them a window into the design as wide as they wish, unconstrained by memory space or time limitations for quickly zeroing in on a bug. And engineers will find they have a lot less in common with the constrained-vision skeet shooter.

Dr Lauro Rizzatti is a verification consultant and industry expert on hardware emulation. He holds a doctorate in Electronic Engineering from the Universita` degli Studi di Trieste in Italy and presently divides his time between the US and Europe.

Dr Lauro Rizzatti is a verification consultant and industry expert on hardware emulation. He holds a doctorate in Electronic Engineering from the Universita` degli Studi di Trieste in Italy and presently divides his time between the US and Europe.