Harness virtual machines to create an efficient ‘live’ hybrid testbench

This ‘how to’ guide shows how to combine the power of emerging and existing technologies for faster, more comprehensive test.

Ask yourself this question, “Is live traffic the best stimulus for testing RTL models?” Your answer almost certainly is “Yes.” But how we achieve that within a broad verification flow is changing. We need to combine different technologies to get the best test results for both quality and speed. We need to think in terms of hybrid testbenches that combine various modeling technologies.

I want to discuss some key components in these hybrids and then give you an example of how to pull them together.

Looking at the options now available, a good place to start is virtual machine (VM) technology.

VMs as building blocks

Most of you are familiar with VMs; such as VMware, VirtualBox, QEMU, and KVM. They are used in a wide range of applications —from cloud server droplets that provide web services to alternative ‘guest’ operating systems.

For example, I run OpenSuse 12.3 Linux as the host OS on my laptop. When I need to run a Windows-only applications, I boot Windows7 to my VirtualBox VM. Many colleagues do the reverse. They have Windows as the host OS with a VMware VM running RedHat Linux for Linux-only EDA applications.

VMs can be used to create temporary droplets on cloud service providers to serve specific functions, such as acting as a dedicated web server. They can be ‘spun up’ and destroyed in seconds, yet provide the equivalent functionality, power, and performance of a full blown workstation running the desired target OS. A good example is digitalocean.com.

For SoC verification, you can harness the same technology to create a virtual platform (VP) that brings VMs into your verification testbench. You then use ‘live traffic’ coming in and out of the VM as real life stimulus applied to the RTL design-under-test (DUT).

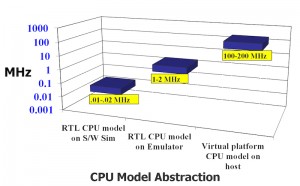

Beyond that, VPs have tremendous speed advantages. A workstation-class 3+ GHz host computer can typically achieve speeds in the 100–200 MHz range for a guest processor platform, such as ARM, and VM-based applications running on it. An RTL model of the same processor runs at 1–2 MHz on an emulator and, worse still, at mere 1/100ths of a MHz on an RTL simulator (Figure 1).

Figure 1 CPU model abstraction (Mentor Graphics)

But this is only the beginning. We can further exploit the advantages VPs offer and match them to the equally important capabilities of other test technologies.

VMs within the hybrid testbench

As noted, hybrid testbenches combine different modeling methodologies within a single testbench. Let’s look at a scenario where you introduce a VP alongside more traditional technologies, such as SystemVerilog (SV) UVM and SystemC models.

I recently developed a working prototype of a hybrid VP testbench that used a dual core ARM Cortex A9 as the VM guest processor, Mentor Graphics’ Vista product as the VP engine, and the Questa simulator or Veloce emulator to run the RTL portion of the DUT. The configuration booted embedded Linux in just 10 seconds. Even when running the RTL on the simulator, boot-up time remained at 10 seconds: the RTL itself was not active during the boot-up phase.

Had we run an RTL model of the ARM A9 processor platform solely on the emulator, boot time would have been about 1,000 seconds and, I estimate, about another one hundred times that on the simulator (although we did not wait around to find out).

Creating a VP and coupling it to an RTL model to harness such compelling advantages is not as difficult as you might imagine.

Established standards can be applied to the task. Among these, TLM-2.0 (formally defined in the IEEE 1666 SystemC LRM) is especially useful. TLM-2.0 can be the basis for a “common connectivity fabric” you can use in a variety of ways to interconnect compliant testbench models.

For example, the Vista VP simulator is based on a SystemC engine that uses TLM-2.0 channels for component interconnects. So for the ARM Cortex A9 design mentioned above, it deploys an abstracted AXI bus to connect all the VP components, based on TLM-2.0 interfaces.

Using cross-language connectivity fabrics, such as Mentor’s open-source UVM-Connect package, TLM-2.0 connections can also cross the boundaries between, say, SystemC and SV-UVM portions of a hybrid testbench.

Because the VP and the RTL portions of the design run at different time bases (a VP runs at a fast rate, RTL runs at a comparatively slow rate), TLM-2.0 allows you to create a ‘speed bridge’ between the VP and the DUT.

Further, because TLM-2.0 is baked in to both SystemC and SV-UVM, the hybrid testbench can be expanded to incorporate a UVM portion of the testbench that performs the functions at which UVM excels.

This means that you leverage the strengths of the differerent languages and modeling methodologies within those areas of the testbench where they are most needed.

- The power of SystemC/TLM-2.0:

- Good interfacing capability and access to Linux host system APIs (invariably written in C). SystemC is written in C++ so gets all this capability as a given.

- Direct access to host resources; such as disk, networks, device drivers, and X-windows displays.

- Stimulus via “real” host machine system interfaces and virtual platforms

- The power of SystemVerilog UVM:

- Constrained random traffic and sequence generation

- Coverage and scoreboarding facilities

Assembling the hybrid testbench

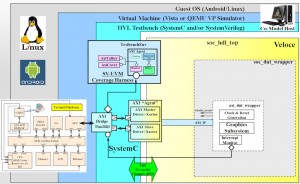

Figure 2 shows a hybrid testbench consisting of an ARM VP running an embedded OS (such as Linux or Android), which is running on a VM (such as Vista or QEMU) that interfaces to a mobile device graphics subsystem, which in turn is running at the RTL abstraction over a TLM <-> RTL AXI bridge.

Figure 2 Hybrid virtual platform testbench (Mentor Graphics)

To complete the hybrid testbench, an SV-UVM based coverage harness is thrown into the mix. UVM-Connect can be used to manage the TLM connections between the SystemC and SV portions of the testbench.

With this platform, we go some way beyond simply using ‘live’ traffic to stress test a DUT. It’s certainly important that we can now generate that traffic using VPs. However, good DUT test involves a variety of stimulus techniques including, but not limited to, live traffic, constrained random traffic, directed testing, and captured test vector playback. Hybrid testbenches give us the power to combine some of these technologies — either all at once or separately — using a common, configurable test harness. We can then take best advantage of what they each have to offer and thereby set up high-quality test processes.

About the author

John Stickley is a Verification Technologist at Mentor Graphics.