Near-threshold and subthreshold logic

Engineers working on low-power VLSI design are looking at reducing circuit supply voltages significantly below current levels, to the level of the threshold voltage or even below. The aim is to take as much advantage as possible of the quadratic relationship between voltage and switching power consumption: power is proportional to CV2f.

As voltage drops, the power required to switch the transistor falls rapidly. However, this reduction is accompanied by a dramatic drop in performance. If the voltage is reduced to below the nominal threshold voltage for a transistor, there is a further problem: a large increase in energy lost through leakage. In effect, subthreshold operation applies the leakage of the transistor as it begins to turn on to charge the capacitance of the downstream logic. The growth in the contribution to power from leakage is due to the massive increase in switching time of the transistor.

A circuit that might be able to switch at hundreds of megahertz on a deep submicron process may be reduced to sub-megahertz operation at and below the threshold voltage. Some companies have successfully applied subthreshold circuits. Toumaz Technology was created as a spinout from research at Imperial College London, UK, to market ‘smart plasters’ for the healthcare market – an early foray into the Internet of Things (IoT) market now seen as an important target for processors based on subthreshold circuitry.

Subthreshold vs near-threshold logic

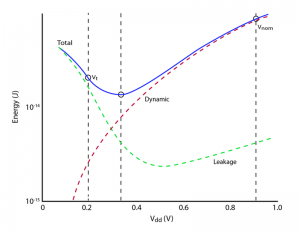

Concern over the tradeoff between performance and leakage, particularly on more advanced processes, has caused designers to focus more on near-threshold than subthreshold logic. By moving the supply voltage to slightly more than the threshold voltage, the effects of leakage can be dramatically curtailed. Research by David Blaauw’s group at the University of Michigan at Ann Arbor, which has worked closely on near-threshold technology with IP supplier ARM, shows an energy minimum close to 0.3V for a typical 32nm process with a threshold voltage of 0.2V, although this shows a significant contribution from leakage current. The crossover between dynamic power and static power consumption is only slightly below the energy minimum, assuming logic activity where 15 per cent of the gates within a block switch each clock cycle.

Figure 1 Energy minimum and contributors to total energy for a 32nm process (Source: University of Michigan)

An older process, such as the 130nm process used by the University of Michigan Subliminal processor designed in the mid-2000s, suffers less leakage. This processor pushed the energy minimum to around 0.05V lower than the nominal threshold voltage of 0.4V. At this point the operating frequency was a few hundred kilohertz. Pushing the voltage up into the near-threshold region at 0.5V increased energy per operation by around 70 per cent, compared to sub threshold usage, but frequency increased to 20MHz. Energy consumption, compared to a normal logic circuit for that process, operating above 1V, was cut almost seven-fold. Blaauw said in a special session on near-threshold computing at DAC 49 that the energy advantage for 32nm dropped to around four-fold.

In the case of the 130nm Subliminal processor, the normal circuit could run 10 times faster than the near-threshold design, making latency a major issue in any circuit that seeks to replace conventional electronics. However, low-performance, energy-harvesting IoT sensor nodes could realistically make use of the technology by moving to a higher duty cycle.

Researchers have proposed massive parallelism as a way to cut latency without increasing energy demand. Although instantaneous power consumption increases with parallelism, as does circuit cost, total energy should remain approximately constant assuming ideal parallelism. The operation will simply finish more quickly and spend longer in sleep between periods of activity. Unfortunately, few applications exhibit ideal parallelism. One possibility is to use temporary voltage boosts to increase single-thread performance for code that does not replicate well onto multiple cores, falling back closer to the threshold voltage if the workload can be distributed across many processors. If temporary boosting is used, it is possible to deploy more cores for parallelizing suitable code. According to the Michigan group, the number of cores for the Splash-2 benchmark set can almost double from 12 to 20 on a 32nm process.

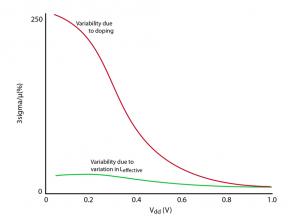

Figure 2 Sources of variability for a 0.3µm-wide planar CMOS device (Source: University of Michigan)

A limit to the lowest practical voltage achievable in the near-threshold region is process variation. The per-transistor variation in threshold voltage can cost around 40mV – and cause a two-fold variation in frequency close to the threshold voltage. The threshold makes the circuitry very sensitive to IR drop on the supply rails. One way to deal with this issue is to actively monitor circuit operation and adjust the supply voltage dynamically so that logic paths do not suddenly fail if the supply droops too far.

Process options

The adoption of finFETs in advanced processes should ameliorate some of the problems with variability. Intel’s work in this area has picked up since the company adopted the finFET architecture and senior circuits researcher Shekhar Borkar has been active in promoting the idea of near-threshold logic for low-power systems. The finFET’s chief advantage is its undoped channel. In 2005, Michigan researchers showed that although the effect of process variability increases significantly as voltage drops close to the threshold voltage, the main contributor to this variation in Ioff is random doping fluctuation (RDF), with the contribution from doping increasing dramatically as channel width reduces.

The finFET’s undoped channel should avoid this sudden increase in threshold variation, leaving variation in the effective length of the transistor channel, fin width and line-edge roughness as the main sources. IMEC has reported that SOI finFETs exhibit lower overall variation, which may encourage the development of specialized versions of the process for ultralow-power applications.

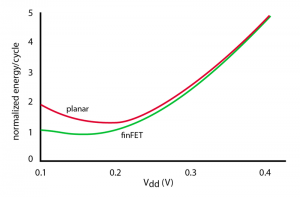

A further advantage of the finFET over planar for near-threshold devices lies in its improved Ion/Ioff ratio. Simulations by Felice Crupi of the University of Calabria with colleagues from IMEC and the universities of Bologna and Siena showed that a finFET-based switch could be more than twice as fast as equivalent planar devices at the threshold voltage. Thanks to leakage improvements, the minimum energy point also shifted back towards the left.

Figure 3 Lower subthreshold leakage should allow finFETs to operate at a lower voltage than planar CMOS (Source: Crupi et al)

Similarly, because it also uses an undoped channel, FD-SOI should offer similar improvements in variability. Analysis by Steven Vitale and coworkers from MIT showed a similar improvement in Ion/Ioff to finFET-based designs. The team proposed manufacturing changes to the transistor to further improve energy usage. As it provides easier access for applying voltage from a back-gate, FD-SOI can also use dynamic body biasing to control leakage and boost switching performance in subthreshold or near-threshold circuits. Such biasing has been used in experimental devices, both planar and FD-SOI.

In 2002 a group from McMaster University in Hamilton, Ontario created a voltage-controlled oscillator that ran from a supply voltage of just 80mV on a 180nm process, a fraction of the process’ nominal supply voltage of 1.8V. The McMaster design made extensive use of both forward and back biasing to allow it to run from its lowest supply voltage up to 1.8V.

At ISSCC 2014, CEA-Leti described a DSP that employed extensive body-biasing to achieve a four-fold increase in clock frequency at 500mV. Although leakage increases due to this boosting, as with planar devices, using back bias when the logic is quiescent can cut leakage dramatically. To allow the voltage to be cut to 450mV, the team used ‘canary circuits’ as active monitors of behavior, working on the basis that canaries close to a particular logic path would, because of the way that local variations now tend to correlate, act as useful proxies. At the same ISSCC, Intel demonstrated a graphics processor based on its 22nm finFET process that used measurements taken during test to tune the operating voltage for a given clock speed.

Architectural changes

As well as promoting a shift to manycore processors, subthreshold and near-threshold circuitry can have less obvious effects on microarchitecture. Memories such as SRAMs suffer reliability issues if operated at too low a voltage, although they can be put into a low-voltage ‘drowsy’ state when not being accessed to save energy.

In practical systems, SRAM arrays will often run at higher voltages than the processor logic and so be able to support faster clock cycles than the logic they serve. This, according to Blaauw at DAC 49, could lead to an inversion in the cache hierarchy where multiple cores share access to the same cache and have their accesses interleaved. However, if designs move toward eight-transistor or ten-transistor SRAM arrays, the need to run the memory at a higher voltage may not emerge.

Borkar has warned that interconnect energy, which does not scale as well as logic energy in these systems, may limit the amount of achievable parallelism and with it performance that can be achieved. System-level optimization will be needed to determine the true energy minimum for a given near-threshold system.

There will be further knock-on effects on logic design according to researchers:

- ARM, for example, has put forward for ultra-slow subthreshold logic the idea of using intra-clock retention schemes to cut leakage.

- Intel has used ‘vector flops’, averaging the effects of variation with the help of clock inverters shared between flops in an approach similar to multibit merging.

- Because of variability problems, writes to register-file flops could be problematic. Intel proposed the use of dual-ended transmission gates to increase reliability for these circuits.

- To avoid problems with voltage droop, to which wide multiplexers are prone, it can be better to use encoded muxes rather than one-hot structures.

- The number of transistors in a stack may also need to be limited in general.

On the plus side, subthreshold and near-threshold circuitry may exhibit better long-term reliability simply because they do not suffer the same degree of temperature and current stress that higher-voltage systems do. Conversely, I2R losses will increase proportionally, which could be problematic on longer power rails. The solution may be greater use of local voltage regulation, which will also help with the granularity of providing boost voltages to high-activity blocks.