Using portable stimulus for automotive random error analysis

Functional verification is hard enough. When placed in the context of automotive, it takes on additional dimensions. The Automotive Safety ISO 26262 Standard specifies two required verification activities, the Systematic and the Random verification flows.

The Systematic verification aspects of ISO 26262 ensure that requirements for the hardware design are specified correctly, fully considered with safety in mind, and that the implementation of these requirements has been wholly and rigorously verified. This poses significant challenges, but still leverages standard verification practices.

The Random verification process ensures that the correct operation of the device is maintained even if internal components are affected due to environmental or other effects. It requires new techniques and methodologies that are still evolving.

Safety mechanisms designed to correct errors introduced during the operation of the device due mainly to environmental effects are built into in the design itself. Part of the Systematic Verification Process ensures that these safety mechanisms do not interfere with the correct operation of the device.

In addition, a significant analysis of the device is performed to ensure that it will self-correct in the event of a random error, to a tolerable level of risk. This involves injecting faults at points in the design during the special “FMEDA” verification process, and measuring the coverage of detected versus dangerous faults. Once tests have been derived for the Systematic phase, they may also be leveraged for the Random analysis process. Being able to reuse the same tests proven at the block level for the full system can save a lot of time and increase reliability.

Random fault analysis consists of running tools such as fault simulation, sometimes combined with formal verification, to observe the effect on device operation of inserting a fault at specific locations in the design. Fault simulation is inherently a slow process. In effect, a simulation of the entire test suite must be run for every potential fault in the design, excluding optimizations that may be applied to this fault list. While special simulators have been developed that can handle multiple faults in parallel or concurrently, this is not possible when using hardware emulation (often needed to accelerate the execution of large designs and enable more reasonable execution times).

Fault simulation technology has been around for a long time and was in general use in the early 1980s for determining the effectiveness of vector sets for the purpose of manufacturing test. However there is a significant difference between fault simulation for manufacturing test and fault simulation for verifying functionality in the presence of faults.

In the case of manufacturing test, the objective is only to demonstrate that the effect of a fault can be observed. As soon as that happens, the fault simulation run for that fault can usually be terminated because continuing the run provides no additional information. The test has to continue until no effect of the fault remains in the design to ensure continued safety under a random error.

Given a normal Universal Verification Methodology (UVM) environment, this is impossible to determine –– all tests would have to run to completion. With a Portable Stimulus Standard (PSS) flow that employs continuous checking of intermediate results, methodologies can be developed that allow tests to terminate earlier, speeding up analysis.

The total number of faults, even if using a simple stuck-at fault model, is huge. This makes it effectively impossible to perform a fault simulation for every fault. Many fault lists can be pruned, where it can be proven that if one specific fault can be detected, then a number of other faults will exhibit the same symptoms, thus requiring one fault to be analyzed. However the number of faults remains too large to be considered, and this problem is further compounded by a requirement to measure transient and other fault models.

In the 2000s, technology was developed to assess the quality of testbenches. It was argued that a statistical sample could be used with high confidence, and techniques were developed to find high-quality faults. If they were found, it would be a good indicator that many other, simpler faults would also be found. In this manner, high confidence could still be achieved based on a much smaller total number of simulated faults.

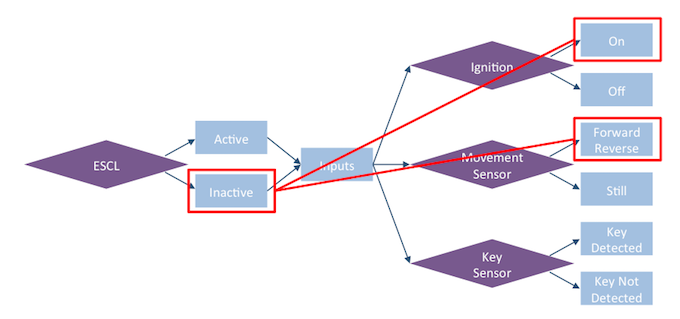

Figure 1: Path constraints/coverage depict which paths are used to generate test content (Breker Verification Systems)

The other side of this is ensuring that the stimulus used for these runs is as compact as possible. This means that every step in every test must be unique, or as close to unique as possible. The better this stimulus is at targeting faults, the more efficient the analysis will be.

PSS has an advantage over UVM. Tests synthesized from a PSS model are based on design intent and can be controlled to focus observations on specific critical functionality. As a result, there is no stimulus activity that does not cause something important to happen in the design, unlike typical UVM test sets.

Hierarchical composition is another important aspect of a PSS methodology. To achieve an ASIL D safety rating, greater than 99% fault coverage must be achieved across the final design. In practice, a design team will perform various analyses at different verification levels starting at the block level and processing through subsystem and full system test to achieve these results. For example, a team might perform a verification step at the block level to make sure a single fault will correctly trigger an alarm signal while waiting for the subsystem level to run rigorous fault analysis to fully exercise safety mechanisms. Perhaps, it will then perform a Monte Carlo statistical analysis at the full system level.

Alternatively, a large number of faults can be run at the block level with the goal of showing that all faults raise an alarm. Then, at the system level, it becomes unnecessary to simulate each block-level fault because it has been shown that they can be collapsed down to any fault that triggers an alarm.

An ability to reuse the same stimulus, as well as checkers and coverage models at all levels, saves time in crafting tests so that you can better target a near-exhaustive fault analysis and coverage comparison at different abstraction levels.

It should be noted that Portable Stimulus also provides improvements to the ISO 26262 Systematic flow. While modeling requirements for verification normally is not easy, PSS allows for a relatively clean mapping from requirements to test scenarios for easily assessed coverage, especially if Breker’s Path Coverage overlay is applied. This simplified completion of the classic V-Model saves time and increases accuracy.

Tools will continue to be enhanced that reduce the total number of faults necessary, the quality of the stimulus and the performance of the fault simulation technology. It will not be possible to do any of this using traditional brute force techniques. Significant progress has been made in all areas. Some build on the technology of the past. Other pieces are new.

Adnan Hamid is co-founder and CEO of Breker Verification Systems, and the inventor of its core technology. He has more than 20 years of experience in functional verification automation. He received his Bachelor of Science degree in Electrical Engineering and Computer Science from Princeton University, and an MBA from the University of Texas at Austin.

Adnan Hamid is co-founder and CEO of Breker Verification Systems, and the inventor of its core technology. He has more than 20 years of experience in functional verification automation. He received his Bachelor of Science degree in Electrical Engineering and Computer Science from Princeton University, and an MBA from the University of Texas at Austin.