Streaming Scan Network technology delivers ‘no compromise’ DFT for AI designs

A new technique is especially efficient for AI chips with modular, tiled design strategies leveraging multiple instantiations of the same cores.

The market for AI chips is booming and moving fast. There are more than 100 startups and established semiconductor companies racing to capture portions of the emerging segment. For semiconductor companies designing AI chips, getting to market fast is a primary project requirement. There are no second chances if you miss your window in this environment.

Getting these large, complex AI chips taped-out on time requires skillful engineering plus design flows and tools that support the common AI design features. Part of a tight engineering plan includes design-for-test (DFT) that can exploit AI chip regularity, support tiled designs, and offer a streamlined implementation flow that eliminates iterations.

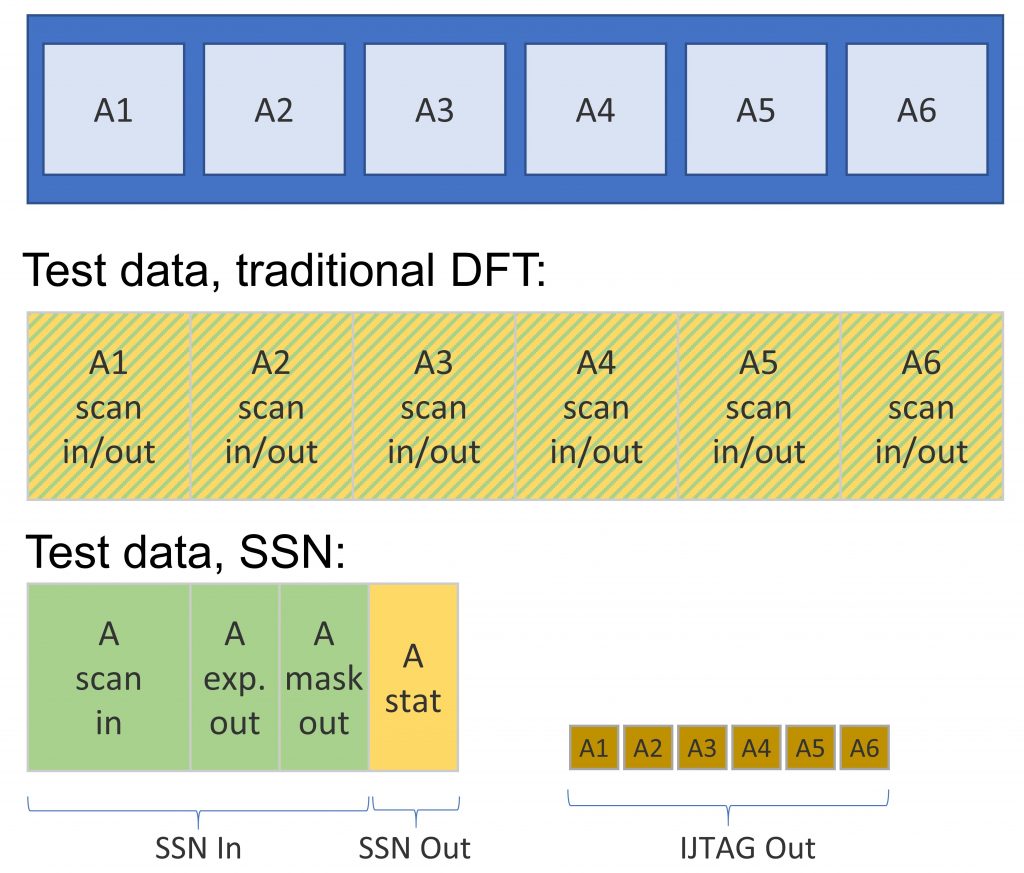

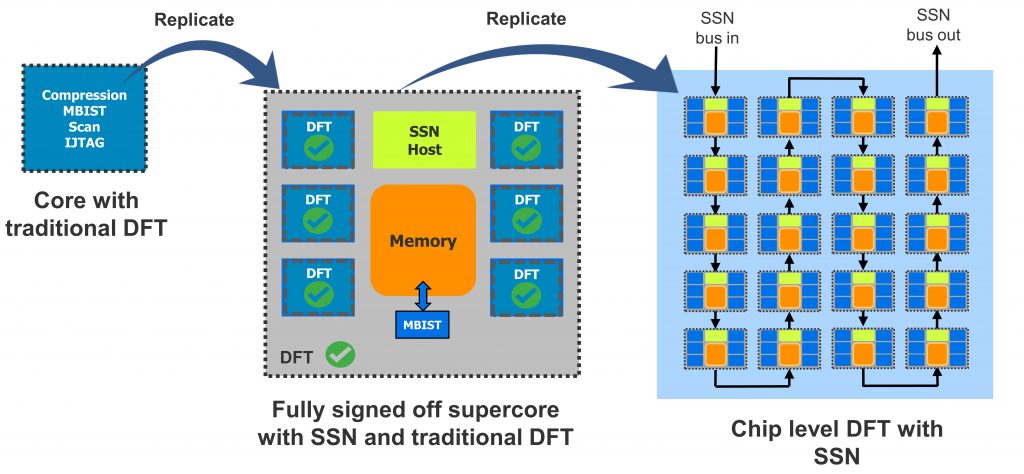

Many AI designs are modular, use tiling, and often have many instances of the same cores (Figure 1). The modular design style is ideal for applying hierarchical, bottom-up DFT flows. A true bottom-up flow is now possible with a new bus-based scan data distribution architecture developed by the Tessent DFT team at Siemens EDA.

Figure 1. Streaming Scan Network is an ideal DFT solution for AI designs that are modular, use tiling, and have many instances of the same cores (Siemens EDA).

What makes AI designs different?

Although ICs in the AI segment serve different purposes and have different implementations, many have a few characteristics in common. These include:

- A large design with billions of gates;

- A large number of replicated processing cores;

- The use of leading-edge process nodes and implementation styles such as tiled designs with abutment; and

- A design schedule under intense time-to-market pressure.

The enormous size and complexity of AI chips can lead to unreasonable DFT planning and implementation effort. The problem is rooted in the use of traditional approaches to moving scan test data from chip-level pins to core-level scan channels.

Traditionally, core-level scan channels are connected to chip-level pins through the use of a pin-multiplexing (mux) network. This works fine for smaller designs, but becomes problematic as the number of cores grows, the levels of hierarchy increase, and designs become more complex. It presents barriers to efficiently testing cores in parallel to save on time and cost. Challenges include:

- Limited available IOs for scan test;

- Limited channels at the core level;

- Test configurations that have been fixed during the design process; and

- Potential routing congestion due to additional scan channels.

In a hierarchical approach, DFT engineers typically allocate a fixed number of scan channels for each core early in the flow, usually the same number for each core. This is the easiest approach, but it can end up wasting bandwidth because the different cores that are grouped together for testing can have different scan chain lengths and pattern counts. You can reduce wasted bandwidth and save test time by reallocating scan resources after you know the required data per core, but doing so involves reconfiguring compression, rerouting the scan channels, and regenerating patterns. Is the additional effort worth the savings in test time? As things stand, each DFT team must grapple these tradeoffs.

But what if you didn’t have to make these kinds of tradeoffs? The next revolution in DFT tools eliminates the challenges of the pin-mux approach by decoupling the core-level DFT requirements from the chip-level test delivery resources.

The Streaming Scan Network approach

Our new approach to distributing scan test data across an SoC — called Streaming Scan Network (SSN) — reduces both DFT effort and test time, while offering full support for tiled designs and optimization for identical cores. The SSN approach is based on the principle of decoupling core-level test requirements from chip-level test resources by using a high-speed synchronous bus to deliver packetized scan test data to the cores.

The number of scan channels per core is independent of the width of the SSN bus, the number of scan channels at chip level, and the number of cores in the design. Delivering test data in this way simplifies planning and implementation and allows core grouping to be defined later in the flow, during pattern retargeting rather than during the initial design. The SSN architecture is flexible — the bus width is determined by the number of scan pins available — and eases routing congestion and timing closure because it eliminates top-level test mode muxing. That last feature also makes it ideal for abutted tile-based designs.

Part of the SSN architecture is the use of core-level host nodes that generate the DFT signals locally. The host nodes ensure that the right data is picked up from the SSN bus and sent to the scan inputs of the core, and that the output data is placed back onto the bus. Each node knows what to do and when to do it based on a simple configuration step leveraging IJTAG (IEEE 1687) infrastructure. With the SSN approach, the determination as to which groups of cores will be tested together and which will be tested sequentially is configurable, not hardwired. The configuration is done as a setup step once per pattern set; once it is done, all the data on the SSN bus is payload.

What is packetized scan test data delivery?

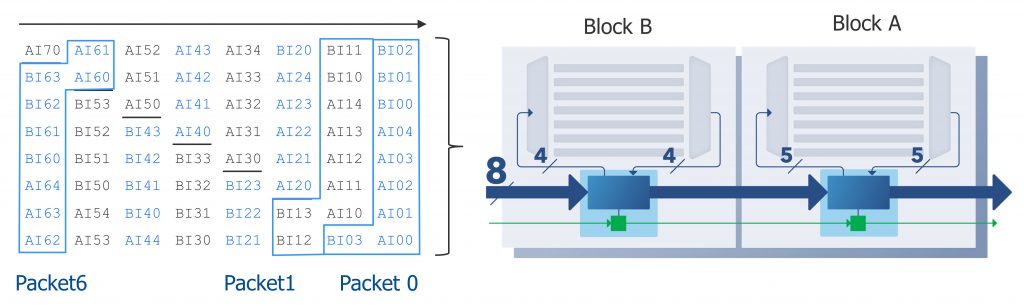

As an example, take a design in which two cores are to be tested concurrently using SSN, as shown in Figure 2. Block A has 5 scan channels, Block B has 4 scan channels. A packet is the total amount of data needed to perform one shift cycle across both cores. The packet size in this example is 9 bits. However, there are 16 pins available for scan test (8 inputs, 8 outputs), so the SSN bus is 8 bits wide.

Figure 2. Testing two blocks at the same time. With Streaming Scan Newtork, packet size is 9 bits, delivered on an 8-bit bus (Siemens EDA).

The table on the left side of Figure 2 shows how the data is streamed through the synchronous SSN bus to the cores. It will take two SSN bus cycles to deliver all the data required to perform one shift cycle in both cores. Note that the bit location of the data corresponding to each core changes (rotates) for each packet. The host nodes know where the data corresponding to that core resides on the bus and when to generate local DFT signals, including pulsing the core shift clock.

How Streaming Scan Network reduces test time and test data volume

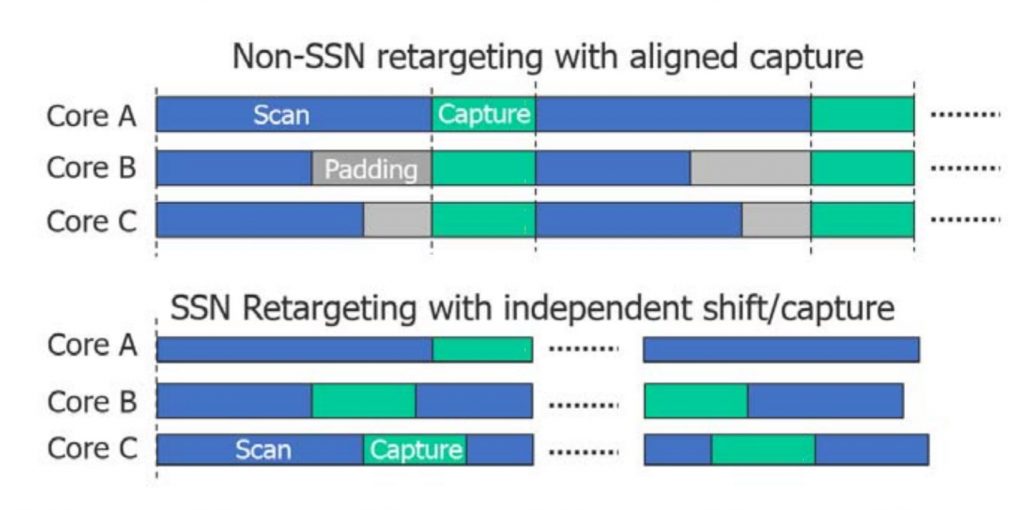

SSN contains several capabilities to reduce test time and test data volume. One is independent shift and capture. In many retargeting schemes, the capture cycles of all the affected cores must be aligned. If multiple cores are shifting in concurrently (Figure 3) and they have different scan lengths, some of the cores with shorter chains need to be padded to perform capture at the same time for all cores. With SSN, the host nodes are programmed so that each core can shift independently, but capture occurs concurrently once all the cores have completed scan load/unload.

Figure 3. When capture cycles must be aligned, some cores need padding, which is a waste of data and test time (Siemens EDA).

SSN also performs bandwidth tuning. Rather than providing as many bits as there are core-level scan channels per packet, SSN can allocate fewer bits to a core that requires less data overall. For a core that has fewer patterns or shorter scan chains, less data is allocated per packet, and this better distributes the data across the cores and ultimately reduces test time.

SSN is a scalable method for testing any number of identical cores with a constant amount of test data and test time, making it an excellent fit with many AI designs. For identical cores, the compare circuitry is included in each host node. A fixed amount of data is then provided to any number of identical cores: Scan input, expect data, and mask data. That allows SSN to do a comparison inside each core. The accumulated status across all identical cores is then shifted out on the SSN bus. A pass/fail bit per core is also captured in the host and scanned out through IJTAG.

When treated as heterogeneous cores, scan input and output data has to be replicated. With SSN, a fixed set of data is used to test any number of identical cores (Figure 4).

Summary

SSN addresses key DFT challenges seen in AI chips. By reducing the DFT planning and implementation effort, SSN takes DFT out of the critical path, while still optimizing test time and cost, especially for large designs and in the presence of many identical cores. SSN was developed in collaboration with several leading semiconductor companies. We presented a paper with Intel at the International Test Conference 2020 that describes the technology and shows some key results of Intel’s validation of SSN. Compared to a pin-muxed solution, they saw a reduction in test data volume of 43% and also a reduction of test cycles of 43%. Steps in the design and retargeting flow were between 10x-20x faster with SSN.

About the author

Geir Eide is the director, product management for the Tessent Design-for-Test software products at Siemens Digital Industries Software. As a 20 year-veteran of the IC DFT and test industry, Geir has worked with leading semiconductor companies and presented technical presentations and seminars on DFT, test, and yield learning throughout the world. Geir earned his MS in Electrical and Computer Engineering from the University of California at Santa Barbara, and his BS in microelectronics from the University of South-Eastern Norway.