Rethinking SoC verification

The complexity of a modern SoC design, which may incorporate multiple interface protocols, tens of power and clock domains, hundreds of IP blocks, millions of lines of code and more than a billion gates, has created a vast verification challenge.

Verification engineers need tools with greater performance and capacity to address these large designs, which also need to be verified in more ways: for their power-management behavior, analog performance, interactions with software, and so on.

Designers currently use combinations of point tools to address these challenges, leading to duplicate steps, proliferating databases, and inconsistent debug environments. Making these tools work together costs time and resources, and undermines productivity. Integrating the results of these tools into standard sign-off flows can also be a challenge.

Synopsys has introduced Verification Compiler to tackle these issues by integrating the software capabilities, technology, methodologies and verification IP (VIP) needed to verify current and future advanced SoCs.

Better static and formal performance

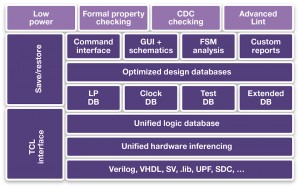

The first step in tackling the verification of very complex SoCs is to increase the performance of static and formal verification tools, to enable earlier bug detection and prevention. It is also important to increase their capacity, so that they can be applied at the full-chip, rather than just block level. Verification Compiler has three to five times the performance and capacity of the previous generation of tools.

The various SoC verification engines used in the tool share a common front-end compiler, coverage and debug interfaces. This unification reduces set-up overhead and give users greater visibility into all the techniques being applied during verification. This consistent reporting and visualization helps in complex verification cases that use a combination of formal or static techniques and dynamic simulation.

Low-power SoC verification

Verifying that the power-management strategies designed into a chip have been implemented correctly usually starts with a specification of the intended power design. The next step is to ensure that all the tools in the flow can model that intention accurately.

However, there are problems with doing this. When the definition of power intent was standardized, it did not take into account that some nets connecting to power-management circuitry would have analog, rather than pure digital, sources. Think of a smartphone, whose touchscreen sensor will produce an analog output that must be able to start the device’s power-on sequence. Ensuring that analog inputs to power-management systems are correctly handled in simulation has required advances in simulation to enable accurate modeling.

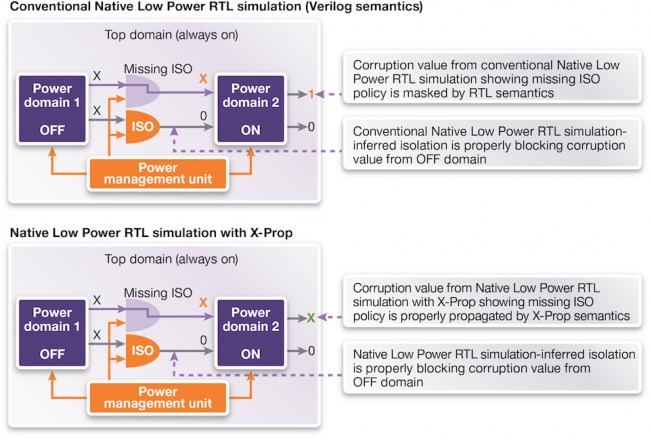

Similarly, when a smartphone turns on, a complex sequence of events occurs in hundreds of IP blocks so that it wakes up properly. Reset simulations are often run at gate level to check the way that actual hardware resolves X states. This approach is increasingly impractical for large SoCs, yet working at RTL can mask bugs by being overly optimistic about the way X values are propagated. The issue has become critical as power management has become more aggressive, meaning that devices spend more time going in and out of low-power states that leave signals in uncertain conditions.

In Verification Compiler, X-propagation simulation can be run simultaneously in a native low-power and mixed-signal co-simulation environment. The various verification engines involved can share coverage data, and be debugged simultaneously, allowing for more robust verification. A common understanding of power intent, the automatic generation of low-power assertions, and advanced modeling techniques (such as X-propagation analysis), help provide a better debug view of a design’s behavior under power management (See Figure 1).

Figure 1 The impact of missing isolation cells on X propagation can be masked by RTL semantics (Source: Synopsys)

Clock-domain crossing validation

Low-power designs often call for multi-voltage, adaptive frequency designs, which make it more difficult to ensure that clocks that cross between power domains remain stable. For example, every element at the boundary of every power domain should have its isolation enabled, either in the source domain or synchronized to the source domain. This requirement means that the static tools traditionally used for CDC must also share data with low-power static-checking tools to achieve accurate validation. These static tools need far greater capacity to make these checks at the SoC level.

Figure 2 Verification Compiler’s integrated approach to state and formal verification (Source: Synopsys)

Verification Compiler has a high-capacity, integrated front-end compiler with common coverage that enables the full chip to be verified, and next-generation static and formal applications to share data (see Figure 2). All of these activities use the same configuration as simulation and other verification activities.

A design is compiled once, and then targeted for static and formal checks to identify bugs related to CDC, low power, connectivity, and other scenarios. The same set-up can also be used to run dynamic simulations on the scenarios requiring them, while leveraging common coverage and debug across all the verification engines. This consolidation means verification engineers can run these checks without having to set up a new flow, catching scenarios that may have gone untested in a flow comprised of multiple point tools.

Integrated debug

Verification Compiler also features many advanced debug capabilities. The ability to interactively debug a simulation as it runs helps identify issues more quickly than debugging after the fact. It is also possible to find the root cause of constraint conflicts and perform ‘what-if’ analyses of various values for a random variable within the same debug interface.

The same integrated approach also makes it easier to trace unknown values through a power-up sequence using X-propagation analysis in a power-aware simulation, without having to run a simulation to completion.

It should also make it simpler to find bugs in test benches, by using transaction-level visualization of dynamic objects in the debugger’s waveform window while stepping through the simulation cycle-by-cycle.

RTL can be verified alongside object code running on an embedded processor in the SoC, enabling users to verify the processor and the hardware simultaneously.

The fact that Verification Compiler has a common front end, offers native traversal of the design hierarchy, and can compress dump data should also make it easier to debug large designs efficiently.

Integration with static technology and awareness of VIP in the debugger allows a user to abstract the visualization of their chip to a higher level, for more efficient analysis of issues at the protocol level.

Verification Compiler’s constraint engine is also tuned to work well with its VIP library. The engine includes integrated debug solutions for VIP to enable protocol- or transaction-level analysis with the rest of the testbench.

The VIP library can be precompiled and then linked to the rest of the simulation, saving compilation time and disk space at the SoC level.

Conclusion

Verification Compiler has been developed to make it easier to find bugs in the design of very large, complex SoCs. It is built upon best-in-class verification engines which have been tuned to handle cross-functional tasks such as CDC and low-power verification, all of which run within a common debug and visualization environment. Verification Compiler enables concurrent verification strategies that make it easier to understand complex design behaviors, especially those that cross between different verification domains.

Combined with improved capacity and performance, and including the latest protocol and memory VIP, Verification Compiler makes the verification of today’s complex SoCs easier and quicker.

Author

Rebecca Lipon is the senior product marketing manager for the functional verification product line at Synopsys. Prior to joining the marketing team, Rebecca was an Applications Engineer at Synopsys working on UVM/VMM adoption, VCS, VIP, static and formal verification deployments. Rebecca has more than 10 years of experience in the semiconductor industry and has held verification engineering roles at SGI and ATI. Rebecca holds a BS from MIT in Electrical Engineering and Computer Science.

Company info

Synopsys Corporate Headquarters 700 East Middlefield Road Mountain View, CA 94043 (650) 584-5000 (800) 541-7737 www.synopsys.comSign up for more

If this was useful to you, why not make sure you’re getting our regular digests of Tech Design Forum’s technical content? Register and receive our newsletter free.

Rebecca Lipon is the senior product marketing manager for the functional verification product line at Synopsys. Prior to joining the marketing team, Rebecca was an applications engineer at Synopsys working on UVM/VMM adoption, VCS, VIP, static and formal verification deployments.

Rebecca Lipon is the senior product marketing manager for the functional verification product line at Synopsys. Prior to joining the marketing team, Rebecca was an applications engineer at Synopsys working on UVM/VMM adoption, VCS, VIP, static and formal verification deployments.