It’s time to embrace objective-driven verification

Consider the Wall Street controversy over High Frequency Trading (HFT). Set aside its ethical (and legal) aspects. Concentrate on the technology. HFT exploits customized IT systems that allow certain banks to place ‘buy’ or ‘sell’ stock orders just before rivals, sometimes just milliseconds before. That tiny advantage can make enough difference to the share price paid that HFT users are said to profit on more than 90% of trades.

Now look back to the early days of electronic trading. Competitive advantage then came down to how quickly you adopted an off-the-shelf, one-size-fits-all e-trading package.

Banking has long been at computing’s cutting edge. What HFT illustrates today is a progressive shift in the strategy it uses to develop systems from tool-based (‘We have bought an e-trading system’) to objective-driven (‘Make our e-trades the fastest and most profitable’).

As I said, I want to set aside the fair/unfair debate around HFT, and take it simply as a high profile illustration of how Wall Street’s approach to IT is evolving. Banks are continuously developing other systems based on objective-driven thinking. My point is that we can draw important lessons for SoC design from this overall shift because we are moving – and need to move – in the same direction toward objective-driven verification. Less controversially (thankfully), but we should still follow the trend more aggressively.

‘Objective-driven verification’ defined

What do we mean by ‘objective-driven’? At a high level, the mindset of the system architect has changed: He has gone from identifying useful tools and deploying them in isolation to starting with a pre-defined goal that is achieved through a customized synthesis of available tools and methods.

Going deeper, one can identify two triggers:

- A recognition that systemic tasks have become so complex it is very unlikely that you can fully realize them using a single raw tool, or even a few. Multiple tools and techniques must be combined and used in a fuller context.

- A deeper understanding of the inner workings of complex systems that allows architects to isolate the processes and cause-effect relationships relevant to their objectives.

These triggers describe IT trends in logic verification as well as in banking.

The ‘system’ in verification is the SoC. The raw tools are, first, simulation, but also static-timing analysis and formal analysis. After a healthy run of around 25 years, SoC complexity has caught up with and overtaken this coarse-grain raw-tool model.

Objective-driven verification begins with that deeper understanding of the SoC architecture and the processes involved in putting it together. The objectives themselves emerge from today’s greater knowledge of failure modes and hard-to-achieve verification goals.

The model moves away from treating logic verification as monolithic. It focuses instead on specific goals. For each, we now know that custom solutions are more effective. Objective-driven verification rewards us with a much deeper, much cheaper process.

Raw tools play a role but have become interchangeable and commoditized. The productivity of an SoC design group is no longer determined by the use of a particular simulator. Rather, productivity and the viability of the design depend on how well the group adopts objective-driven solutions.

The value today therefore resides in a layer that sits on top of commoditized raw tools which contains a deep knowledge of different failure-modes within a structured workflow. This is where your big verification dollars need to be spent.

It is a disruption of a logic verification business model long based on selling raw tools. Nevertheless, the assertion that future growth will come from objective-driven verification is already well illustrated in two specific instances.

Objective-driven verification is already here

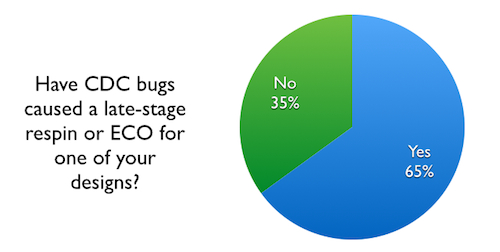

Take verification for failures caused by asynchronous clock-domain crossing (CDC). Until recently, it entailed manual design review and the use of specialized synchronizer library cells in simulation. You bought a fast simulator and then pounded stimuli onto the special cell-equipped model. This worked for crossings up to, say, the dozens. But as they grew in number and complexity, the approach broke down. Asynchronous-crossing failures increased alarmingly.

In response SoC designers, aided by vendors like Real Intent, have carved out asynchronous-CDC as a distinct objective-driven verification task. They have adopted dedicated solutions and workflows that address the problem to sign-off. Objective: “There will be no failures caused by asynchronous crossings.”

Real Intent’s asynchronous CDC solution stack illustrates an objective-driven verification process. It starts with a first-principles understanding of the failure modes. Around that is built a synergy of structural analysis methods, formal analysis methods and simulation hooks. A workflow then guides the user through an iterative chip-environment setup and the progressive refinement of verification results until full-chip sign-off is achieved.

This workflow component shows that objective-driven verification goes beyond simply a rediscovery of the ‘point tool’. Context, relationships with other ‘objectives’ and their solutions, relevance to the overall goal, and even the UI play subtle but important roles they did not in the point era.

Every SoC taped out today goes through an explicit asynchronous CDC sign-off based on a dedicated static solution of this type. However, I would note that the workflows associated with different solutions are materially different and lead to measurably different levels of productivity and quality of final results.

Objective-driven verification is also becoming the norm in X propagation. Logic simulation has long been an imperfect tool here: It can still incorrectly turn a deterministic value into an X, or an X into a deterministic value. The second effect is worse because it can mask bugs, giving false confidence in the chip’s correctness.

These insidious failures make it imperative that SoC design teams deploy objective-driven verification to catch them early. The same template applies as for asynchronous CDC: Synergistic structural and formal analysis with simulation hooks are joined to an intuitive and iterative workflow. This delivers progressively better results.

The list of high-value goals to which we can apply objective-based verification is getting longer. The broader concept is spreading quickly out from Wall Street’s deep-pocketed IT pioneers. And importantly for SoC design, objective-based verification techniques for asynchronous CDC and X-effects already demonstrate a value you can – well – take to the bank.