Machine learning overcomes library challenges at the latest process nodes

From 16nm, new complexities hinder .lib file characterization and verification but machine learning now offers an efficient way of managing them.

Advanced process nodes below 16nm/12nm and other specialized process technologies offer significant advantages in power, performance and area (PPA) for leading-edge SoC/IC designs.

Depending on product direction and competitive requirements, some design teams may utilize the latest process node for each new design, whereas others may opt to use one same process node as a platform for launching several designs. Regardless of the approach taken, upgrading to a newer process node almost always presents a new set of considerations for library and design teams.

On moving to 16nm/12nm and below, library and digital teams face challenges such as increased library cell count; higher process, voltage and temperature (PVT) corner coverage; and more complex .lib data, such as variation modeling. These new considerations have drastically increased SPICE simulation requirements on library characterization, and made it harder to reach sufficient verification coverage across all .lib data. Such issues increase the risk of tapeout delays and potential silicon failure.

Thankfully, machine learning methods can be used to accelerate library characterization and provide comprehensive verification. These methods help design teams build a workflows for characterizing, validating and integrating advanced node .libs into their latest digital methodologies.

What has changed for .libs at newer nodes

Increased cell count and complexity

As one of the building blocks of digital IC/SoC designs, library models based on the Liberty standard have steadily become larger and more complex at each new process node. Libraries today consist of an increasing number of specialized cells that aim to provide better power, performance, or footprints, as well as more specialized functionality.

From the power/performance optimization point of view, a couple of examples include more common usage of body-bias cells that can dynamically adjust threshold voltage or simply more versions of the same cells with different threshold voltages, and digital implementation tools that pick the optimal threshold voltage cell set for the design’s needs. Both approaches increase the complexity and size of .libs.

More process, voltage, and temperature corners

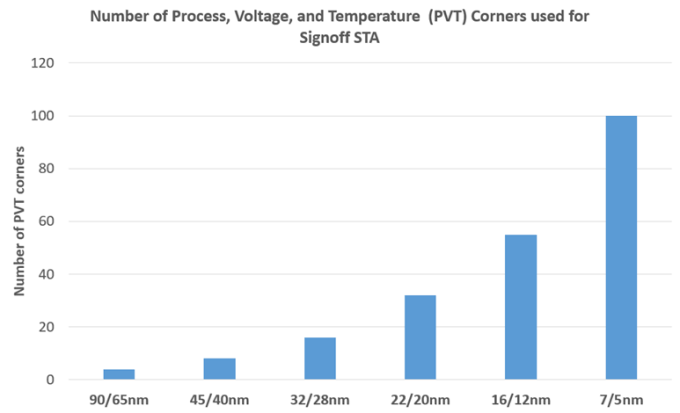

The increased impact of PVT on power and performance has increased the number of PVT corners required for performing static timing analysis (STA) on advanced node designs by an order of magnitude.

For example, while designs at 40nm and larger process nodes normally require less than a dozen PVT corners, 7nm/5nm designs may require 100 or more for signoff STA (Figure 1). In the implementation stage, multi-mode, multi-corner (MMMC) optimization has also become a necessity to reduce timing and power differences between implementation and signoff stage STA.

Figure 1. Approximate number of PVTs used for signoff STA per process technology node (Source: Siemens EDA)

Variation modeling in Liberty Variation Format

The Liberty Variation Format (LVF) is an extension of the .lib specification and has become the industry standard format for including on-chip variation (OCV) modeling information in .libs. Aside from being widely used for encapsulating OCV information, it is also ratified by the Liberty Technical Advisory Board, an IEEE-ISTO program with members from semiconductor and EDA industries.

By measuring variability for each cell and timing arc, LVF provides the accuracy and granularity required to facilitate design closure against aggressive PPA metrics while also meeting stricter timing and power requirements that account for OCV.

In addition, the usage of LVF moments enables library characterization teams to model skewness, mean shift and standard deviation of timing and power characteristics. This provides an additional layer of statistical modeling accuracy.

For advanced process nodes, LVF .libs are mandatory for signoff STA because OCV has an increased impact on design timing and power. At 7nm, for example, OCV may impact timing and power numbers by 50%-100% of the original value.

LVF adds complexity to .libs, as well as increasing the runtime characterization of .libs by about 5x-10x. Library characterization and verification teams must take this into account when working with advanced process nodes.

Library characterization, verification and integration challenges

The additional considerations for advanced node .libs outlined above present new challenges for library characterization and verification teams, as well as digital design and signoff teams.

An exponential increase in simulations

For library characterization, increases in cell count, PVT count, as well as the inclusion of LVF data results in an exponential increase in the number of simulations required to characterize a given .lib set.

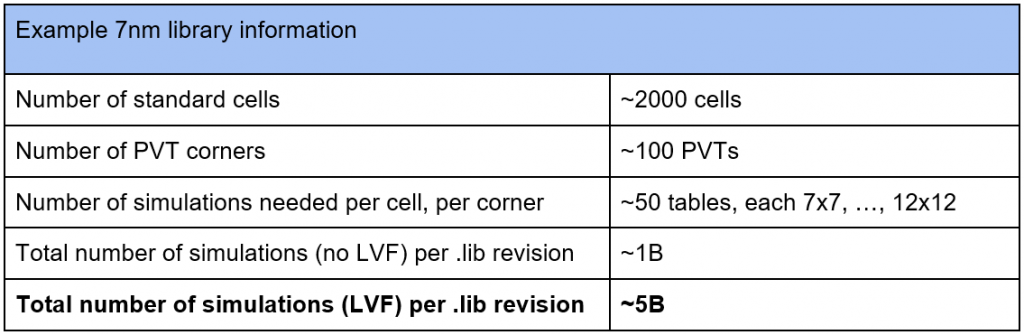

Table 1. Approximate number of SPICE simulations needed to characterize an example 7nm .lib (Source: Siemens EDA)

Table 1 shows the approximate number of simulations required to characterize an example 7nm foundation IP .lib, across all PVTs. This amount of work translates roughly into days, and potentially a couple of weeks’ worth of SPICE simulation runtime, even with a reasonably large compute cluster of around 1,000 CPUs.

Moreover, these numbers apply to characterizing just a single revision of that .lib. Each new IP library typically undergoes 5-10 revisions before reaching production-ready status. While digital design teams can often start with early versions of the .lib, getting to .lib production-readiness remains a key schedule bottleneck when working at a new process node.

Meanwhile, due to the increasing size and complexity of .libs, simulation runtime requirements are also expected to continue to outpace compute performance and resource availability, for pure SPICE-based characterization methodologies.

Hard-to-find .lib inaccuracies and errors increase tapeout risks

All static timing analysis engines rely on the availability of accurate .libs, and sufficient coverage over the spread of PVT corners targeted for the design. However, increases in .lib size and complexity at advanced process nodes have made it more difficult to fully verify .libs.

LVF data in particular is hard to verify because current SPICE-based methodologies include no straightforward method of reproducing a golden reference value for comparisons.

STA for advanced process node designs has smaller margins of error compared to more mature process nodes, mainly due to more competitive design specs and less availability of historical manufacturing/post-silicon data. Also, since the number of cell instances per design or top-level block has increased to hundreds of millions of instances, any inaccuracy in the .lib for a given cell has a more significant impact on the design’s overall timing.

Ultimately, the true cost of errors and inaccuracies in .libs are design-closure schedule delays, and silicon failures requiring re-spins. At advanced process nodes, it is therefore both more important but also more difficult to have a comprehensive verification strategy for .libs.

Integrate machine learning to address advanced node .lib challenges

The explosion in .lib data quantity and complexity at advanced process nodes may raise many challenges for traditional characterization and verification, but the defining characteristics of advanced node .lib datasets also make them the kinds of problem machine learning (ML) methods are well equipped to handle. More PVTs, larger tables, and generally more variations of library components with parameters that can be correlated to results, all provide further advantages that help ML methods produce more accurate results.

How machine learning accelerates .lib characterization

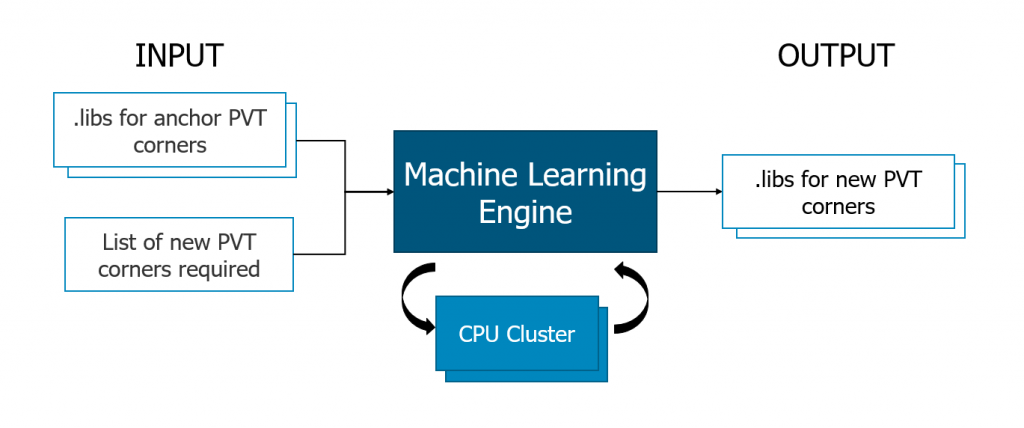

The main concept behind ML-accelerated .lib characterization is the use of SPICE-characterized .lib data points as anchor data to generate other .lib data points using machine learning techniques (Figure 2).

For advanced process node libraries, this means significant runtime savings due to the large number of PVT corners to be characterized. After an initial minimum set of .libs is SPICE-characterized, the remaining PVT corners can be generated using ML at a fraction of runtime of SPICE characterization.

Digital design and signoff teams who obtain .libs from other sources such as in-house library teams or external IP vendors can also benefit from this flow. Using the original set of PVTs provided by the library provider, digital teams can produce the other PVTs required for their specific design conditions. The user will thereby get precise PVT coverage without the need to replicate the library provider’s characterization environment.

How machine learning accelerates .lib verification

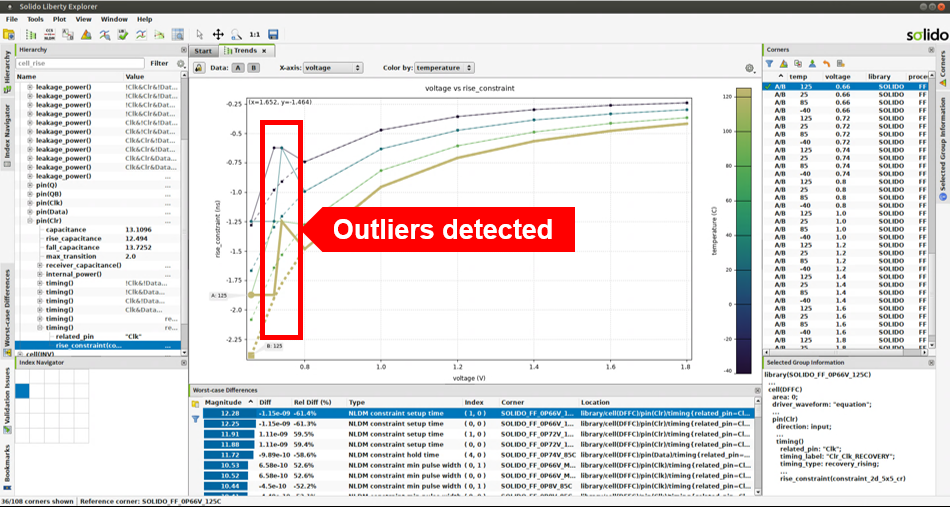

Another use case for ML methods is .lib verification. The same technology that is used for producing a new .lib can also be used to provide ‘expected values’ for existing data points on a full set of PVTs, The user will thereby get an accurate re-validation of each table entry in the .libs (Figure 3).

The large amount of data in advanced process node .libs becomes an advantage in this situation too because it helps construct an accurate representation of the .lib characterization results.

ML also makes it feasible to validate LVF data. Since LVF data consists of sigma values for each table entry, attempting to use SPICE optimization or brute-force Monte Carlo analysis to revalidate LVF table values is impractical because of the millions of table values requiring validation. The ML approach to verification is data-driven and is not impacted by the long runtimes required to reproduce results.

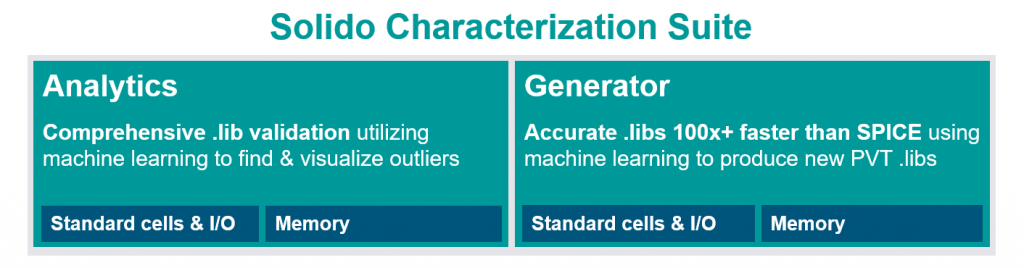

Machine learning in the Solido Characterization Suite

Siemens’ Solido Characterization Suite (Figure 4) utilizes ML methods to verify and accelerate the characterization of .libs. It consists of Solido Generator, which accelerates .lib characterization by 100x compared to SPICE characterization, and Solido Analytics, which provides a comprehensive verification solution for all .lib data, including NLDM, CCS, and LVF with moments.

Both Generator and Analytics incorporate the ML methods described we have discussed and provide a solution to handling .lib requirements for all digital methodologies, including those leveraging advanced process node libraries.

For more information on this, please visit the section on the Solido Characterization Suite on the new Siemens EDA website.

Summary

Advanced process nodes offer design teams power, performance and area benefits that can provide a competitive edge for SoC/IC designs. However, .lib models for these nodes, a key component in STA, have become significantly larger and more complex.

ML methods can be integrated as part of library characterization and verification, in order to address the exponentially-increasing SPICE simulation requirements and .lib verification challenges of advanced process node .libs.

Siemens’ Solido Characterization Suite is a machine learning-enabled library characterization and verification solution that takes advantage of the large datasets and high complexity of .libs to accelerate library characterization by 100x compared to SPICE, and provide a comprehensive solution for verifying correctness and accuracy of .libs.

Wei-Lii Tan is a Senior Product Manager at Siemens EDA.