Let there be no misunderstanding: Verifying CXL cache coherency

How to work with the Compute Express Link and protocols such as MESI to maintain cache coherency.

To achieve higher performance in multiprocessor systems, each processor usually has its own cache. This means that different processors and co-processors must work together efficiently, while sharing memory and utilizing caches for data sharing. Cache coherence refers to keeping the data in these caches consistent. This presents a formidable technical challenge of maintaining cache coherency, which is addressed by the Compute Express Link (CXL).

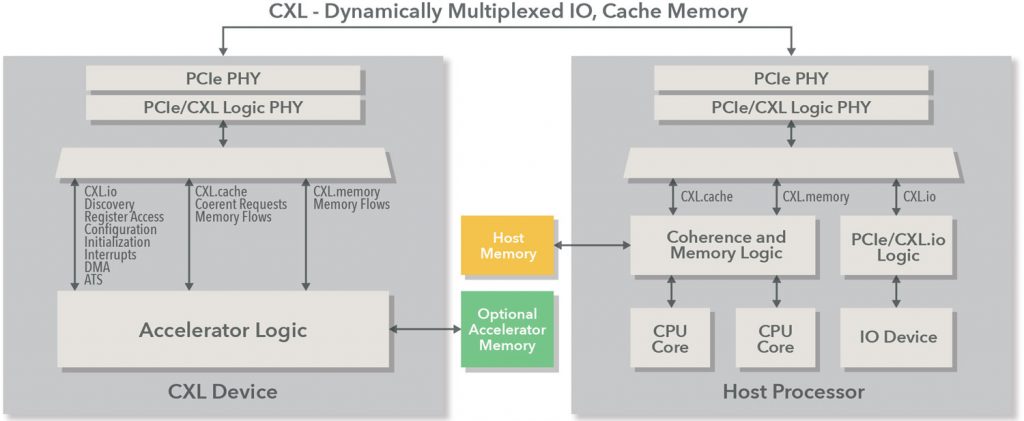

CXL is based on the PCI Express (PCIe) 5.0 and 6.0 physical layer infrastructure, using PCIe electricals and standard PCIe form factors for the add-in card. It enables high-bandwidth, low-latency connectivity between the host processor and devices such as accelerators, memory buffers, and smart I/O devices.

CXL helps to enable high-performance computational workloads by supporting heterogeneous processing and memory systems with applications in artificial intelligence, machine learning, communications, and high-performance computing.

CXL maintains a unified, coherent memory space between the CPU (host processor) and any memory on the attached CXL device, allowing both the CPU and device to share resources for higher performance and reduced software stack complexity.

Why is cache coherency required?

Since each core has its own cache, the copy of the data in a cache may not always be the most up-to-date version. For example, imagine a dual-core processor where each core brought a block of memory into its specific cache, and then one core writes a value to a specific location. When the second core attempts to read that value from its cache, it will not have the most recent version unless its cache entry is invalidated. Thus, there is a need for a coherence policy to update the entry in cache of the second core; otherwise, it becomes the cause of incorrect data and invalid results.

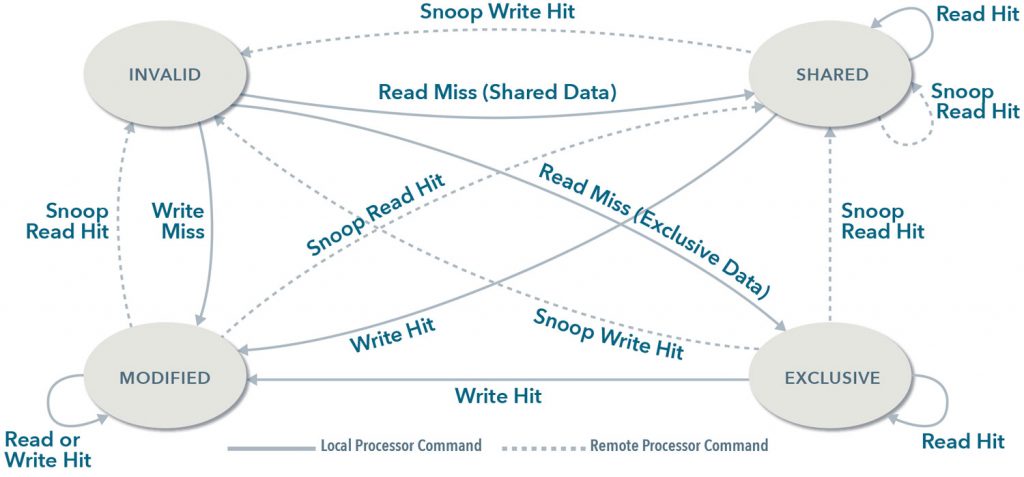

There are various cache coherence protocols in a multiprocessor system. One of the most common is MESI. It is an invalidation-based protocol named after the four states that a cache block can have:

- Modified: The cache block is dirty for the shared levels of the memory hierarchy. The core that owns the cache with the ‘modified’ data can make further changes at will.

- Exclusive: The cache block is clean for the shared levels of the memory hierarchy. If the owning core wants to write to the data, it can change the data state to ‘modified’ without consulting any other cores.

- Shared: The cache block is clean for the shared levels of the memory hierarchy. The block is read-only. If a core wants to read a block in the ‘shared’ state, it may do so; however, if it wishes to write, then the block must be transitioned to the ‘exclusive’ state.

- Invalid: This state represents cache data that is not present in the cache.

The transitions of the states are controlled by memory accesses and bus snooping activity. When several caches share specific data, and a processor modifies the shared data’s value, the change must be propagated to all the other caches that have a copy of the data. The notification of a data change can be done by bus snooping. If a transaction modifying a shared cache block appears on a bus, all the snoopers check whether their caches have the same copy of the shared block. If they have, then that cache block needs to be invalidated or flushed to ensure cache coherency.

Figure 2 shows the state-transition diagram for this protocol and how the cache states transition on receiving commands from the local and remote processors.

Addressing cache coherency challenges

Since data in each cache can be modified locally, the risk of using invalid data is high. Therefore, you must provide a mechanism that manages when and how changes can be made. Cache coherent systems are high-risk design elements — they are challenging to design and even more challenging to verify. In the end, you need a way to confidently sign off that your system is cache coherent. It is a key verification challenge.

Another challenge in verifying a CXL cache-based design is that the specification provides a vast range of request types, response types, and a vast possibility of cache state combinations. Every combination and permutation must be verified thoroughly. Although the specification defines the logical behavior of activity on the bus, the sequencing and timing of cache shared lines must also be verified accurately.

The complexity of creating and managing thousands of individual test cases is not feasible within realistic schedule constraints. There is a clear need for a wide range of pre-defined stimuli to ensure that you can achieve high coverage against your compliance goals.

The major requirements for CXL cache verification are:

- A Verification Plan with stimuli: A variety of stimulus for achieving verification completeness goals

- Checks: Complete protocol checking with cache coherency checking at every stage of verification

- Debug Mechanism: An efficient debug mechanism to reduce verification cycle time

- Coverage: A subtle coverage map for verification completeness

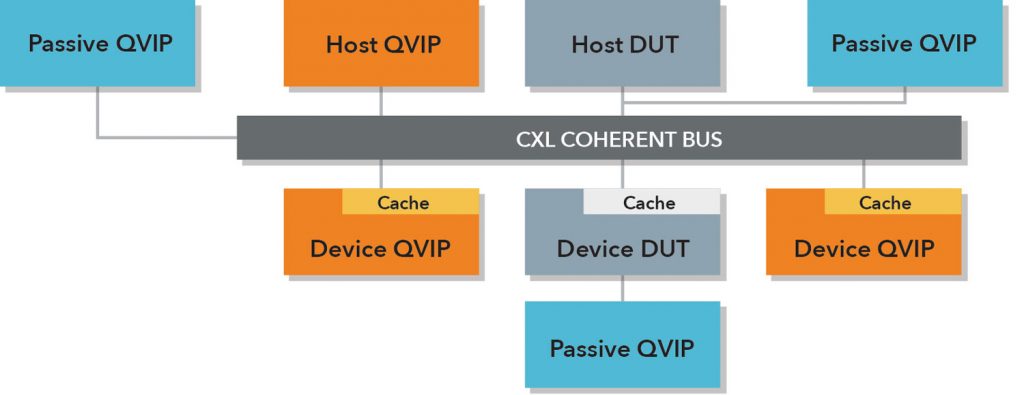

Questa Verification IP for CXL (Figure 3) meets these requirements and delivers exhaustive verification of CXL-based IP and SoCs. QVIP for CXL is a comprehensive solution for exhaustive verification of CXL-based IP and SoC products, providing the flexibility to create and cover all possible verification scenarios. QVIP for CXL includes ready-to-use verification components and exhaustive stimuli to increase productivity and accelerate verification signoff.

Intelligent modeling allows the Questa Verification IP (aka QVIP) to mimic both host and device behavior if the DUT is at the other end of the CXL interconnect. Also, QVIP can be hooked up as a passive component to actively monitor the bus and provide various verification capabilities like checkers, coverage, and loggers.

Further reading

The whitepaper Purging CXL cache coherency dilemmas digs deep into the importance of maintaining CXL cache coherency in high-performance multiprocessor system designs, and demonstrates how Questa Verification IP for CXL makes it easier to achieve maximum verification productivity and design quality in CXL-based IP and SoCs.

About the authors

Nikhil Jain is a Principal Engineer in the IC Verification IP team at Siemens EDA, specializing in the development of CXL, Memories, and Ethernet verification IP. He received his BTech degree in Electronics and Communication from GGSIPU University Delhi in 2007.

Gaurav Manocha is a Member Consulting Staff at Siemens EDA. He has nine years of experience in verification IP and testbench development. He received his Bachelor’s Degree in Electronics and Communication from ITM University India. In his current role, he supports the deployment of CXL, PCIe Verification IP at Siemens EDA.