Introduction to the Compute Express Link (CXL) protocols

A look at the key protocols that control the Compute Express Link (CXL) standard for connecting CPUs and accelerators in hetereogenous computing environments.

Compute Express Link (CXL) is an open interconnect standard for enabling efficient, coherent memory accesses between a host, such as a CPU, and a device, such as a hardware accelerator, that is handling an intensive workload.

A consortium to enable this new standard was recently launched at the same time as CXL 1.0, the first version of its interface specification, was released.

CXL is expected to be implemented in heterogenous computing systems that include hardware accelerators addressing topics in artificial intelligence, machine learning, and other specialist tasks.

This article focuses on the CXL protocols.

The CXL standard defines three protocols that are dynamically multiplexed together before being transported via a standard PCIe 5.0 PHY at 32GT/s.

The CXL.io protocol is an enhanced version of a PCIe 5.0 protocol that can be used for initialization, link-up, device discovery and enumeration, and register access. It provides a non-coherent load/store interface for I/O devices.

The CXL.cache protocol defines interactions between a host and a device, allowing attached CXL devices to efficiently cache host memory with extremely low latency using a request and response approach.

The CXL.mem protocol provides a host processor with access to the memory of an attached device using load and store commands, with the host CPU acting as a master and the CXL device acting as a subordinate. This approach can support both volatile and persistent memory architectures.

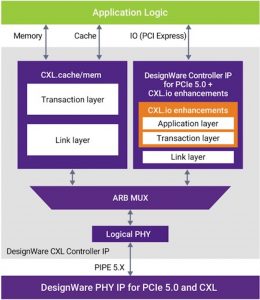

Figure 1 shows that the CXL.cache and CXL.mem protocols are combined and share a common link and transaction layer, while the CXL.io has its own link and transaction layer.

Figure 1 Block diagram of a CXL device showing PHY, controller and application (Source: Synopsys)

The data from each of the three protocols are dynamically multiplexed by the arbitration and multiplexing (ARB/MUX) block, before being turned over to the PCIe 5.0 PHY for transmission at 32GT/s. The ARB/MUX arbitrates between requests from the CXL link layers (CXL.io and CXL.cache/mem) and then multiplexes the requested data based on the arbitration results, which are created by a weighted round-robin arbitration scheme managed using weights set by the host. The ARB/MUX also handles power-state transition requests from the link layers, creating a single request to the physical layer to power down in an orderly way.

CXL transports data via fixed-width 528bit flits, each made up of four 16byte slots plus a two-byte CRC:(4 x 16 + 2 = 66byte = 528bit). Slots are defined in various formats and can be dedicated to either the CXL.cache protocol or the CXL.mem protocol. The flit header defines the slot’s format and carries information that enables the transaction layer to route data to the intended protocols.

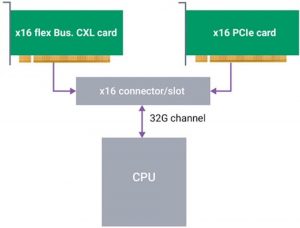

Since CXL uses the PCIe 5.0 PHY and electrical schema, it can plug into a system anywhere that could use PCIe 5.0 through Flex Bus, a flexible high-speed port that can be statically configured to support either PCIe or CXL. Figure 2 shows an example of the Flex Bus link.

Figure 2 The Flex Bus Link supports Native PCIe and/or CXL cards (Source: Synopsys)

This approach enables a CXL system to take advantage of PCIe retimers. However, CXL is currently defined as a direct-attach CPU link only, so it cannot take advantage of PCIe switches. If switching is added to the CXL standard, industry will have to build CXL switches.

Further information

Introduction to CXL device types

Author

Gary Ruggles, senior product marketing manager, Synopsys