Emulation for AI: Part One

AI companies of all sizes are looking to develop their own silicon as they face increasing demands for performance, market fragmentation that demands application-specific algorithm-to-hardware solutions and pressure to reduce power consumption even as the AI processing load surges. The first article in this two part series describes how emulation is playing an important role in enabling this shift-into-silicon. The second looks at how emulation’s capabilities matched the silicon design objectives of high profile AI startup Wave Computing in creating a dataflow processing unit (DPU).

There has been a surge in the design of application-specific silicon for artificial intelligence (AI) systems. The trend poses interesting challenges in terms of overall system refinement, validation and verification. One of the keystone technologies being used to address those is emulation.

To understand emulation’s increasing use here – which spreads across household-name system design companies, hitherto software/algorithm-focused AI specialists, and startups – we need to understand why some companies are choosing not to port AI offerings to off-the-shelf hardware.

Three dynamics stand out:

- The increasing demands being placed on AI systems in terms of data volumes and performance.

- The fragmented nature of the AI/Internet of Things universe.

- The power consumption concerns mounting in step with AI’s deployment. Standard chips are already burning a lot of energy.

According to a model developed by Mentor, the market leader in emulation, that design technology addresses the resulting challenges across three main ‘pillars’ (needless to say, there are other emulator suppliers, but these concepts can be applied to the market generally):

- Scalability: These are big multi-billion gate designs, capable of operating either in isolation or stacked. The pre-silicon platform must match that capacity while allowing the design (hardware and software) to run at a realistic level to offer trustworthy benchmark results and refinement.

- Virtualization: AI applications are new and various. An optimized silicon design for any one of them is likely to be a first generation design that will start as a model with no preceding hardware generation as reference. Development requires a pre-silicon platform that can handle a predominantly (possibly entirely) virtual model of the design.

- Determinism: Application-specific AI silicon is only one part of a system alongside, primarily, software and algorithms. All ideally need to be developed, refined and verified simultaneously. That kind of work can only take place if the hardware design maps to a pre-silicon platform in exactly the same way for every iteration when any benchmarks, software and algorithms are run on it.

Let’s now take a closer look at how these factors relate to one another, starting with the move toward AI ASICs.

Off-the-shelf or custom?

This question has faced developers of electronic system for decades. Here is why it is now AI’s turn.

Performance and data volume

Cisco projects that global IP traffic will rise to 4.8ZB by 2022 from 1.5ZB in 2017, and that by the end of the forecast machine-to-machine (M2M) connections will account for 51% of it.

Data is the fuel for AI, ML and DL. The more you can get, the better the analysis and decision-making will be. But it also stands to reason that the more data you get, the greater the processing workload. Because the global data volume is going to carry on scaling at a rapid rate beyond 2022 – fueled by new use-cases and the increasing deployment of 5G – you need silicon and systems that can scale at the same rate.

But this is not the only performance issue.

AI decision-making cannot follow one model. Some applications may be ‘dumb’ at the edge but ‘smart’ in the cloud. Others, most obviously those that are safety-critical like autonomous driving, will need to have a lot of intelligence at the edge to minimize latency.

Alternatively, consider retailing or the stock market. Already AI and advanced data analytics are being used in both fields. Retailers manage food and clothing, with world-class companies swapping fast- and slow-moving items in and out of stores on a daily basis. On Wall Street, high-frequency trading allows some funds to secure greater profit by placing trades seconds or even fractions of a second before their rivals.

These players want to use increasing data volumes and world-class AI platforms to make their systems yet more responsive and profitable. Because of the competitive advantage they provide, some see a value in making the systems proprietary.

Fragmentation

Any process that can be better automated, partially or in whole, through the use of AI represents a potential use-case. The Internet of Things is therefore an umbrella term for an incredibly wide range of markets, so wide that it should be self-evident that there almost certainly never can be a one-size-fits-all solution. Algorithms for, say, e-health are very different from those for stocktaking.

This is another reason why some companies are shifting toward adding their own hardware to existing AI systems, or including it from the ground up. But once you decide to optimize at the whole-system level, the fragmentation being seen in AI tends to dictate that the silicon on which those algorithms will run diverge from standard parts to maximize performance.

ASIC is arguably being rewritten to mean “algorithm-specific” as much as “application-specific”.

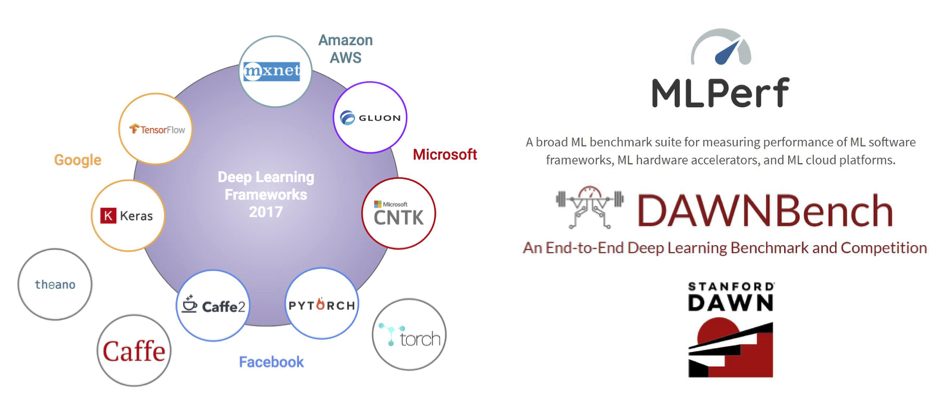

At the same time, because many of these markets are nascent, there are virtually no legacy hardware designs or IP blocks that can be reused or leveraged. There is also little in the shape of standards to form a hardware starting point beyond performance targets. There are frameworks (e.g. TensorFlow, Caffe, etc) and benchmarks (e.g., MLPerf, DAWNBench) but they are mainly algorithm- or software-centric with some nods to hardware accelerator performance.

Power

Server farms have long been under pressure to reduce power consumption, particularly those operated by the FAANG (Facebook/Apple/Amazon/Netflix/Google) and BAT (Baidu/Alibaba/Tencent) groupings, as well as those of Microsoft because of its position in cloud computing.

This environmental pressure was always likely to spill over into AI. A recent story in the UK newspaper The Guardian illustrated that by focusing on the power consumption levels related to cryptocurrency mining. It cites a number of cities that are already looking to constrain such activity.

In response, processors that are more tuned to particular tasks – and this need not only be for applications but also the structure of the data to be processed – offer one way of burning less energy.

And the big farm operators themselves want to cut their electricity bills. This is a bottom line issue too.

Beyond that, though, there is the trend toward more processing and analysis at the edge, frequently using battery-powered devices. Here, the same constraints apply as for cellphones.

Emulation for AI

Let’s look at how closely emulation maps to the design objectives of those companies that are designing their own AI silicon alongside software and algorithms.

Some are big players like the FAANGs and BATs and some are existing semiconductor companies. They may go for the customer-owned tooling model though ASIC will also be an option. Others are start-ups for which ASIC is likely to the better option. The distinction does not matter much here. Emulation plays the same role in either model.

The key thing to consider is that these are very much system designs. Much of the complexity resides in the alignment of the system’s different hardware, software and algorithmic components.

In pure hardware design terms, the silicon is comparatively straightforward in that an AI chip will largely comprise multiple instantiations of a highly optimized core. The parallelism that suits AI – and which has given graphics processing companies a head start with their proprietary IP – is relatively well understood. And while getting the network-on-chip right can be tricky, there is likely to be little, if any, mixed-signal complicating things.

With that in mind, let’s consider Mentor’s three pillars of Emulation for AI in their system context.

Scalability

Although there is a lot of instantiation, AI chips are big with gate counts in the billions. To emulate the design, and allow algorithm optimization and software co-design, you need a pre-silicon platform with similar capacity. Mentor’s Veloce Strato platform currently scales up to 15 billion-gate designs. It has the capacity.

As such an emulator scales, it continues to deliver the performance that allows the system team to run tasks within leading AI frameworks and undertake benchmarking against MLPerf or DAWNBench. Those results – both in terms of system optimization and power consumption – have value because you can run the software on the hardware at megahertz clock speeds, as opposed to being in the tens of hertz on an FPGA platform.

An emulator also offers sufficient pre-silicon gate capacity whether the target is a system based on monolithic hardware or stacked silicon. This satisfies the two development strategies AI chip developers are using. And importantly, a high gate-count emulator can cope with the kind of rapid growth in system size that will meet AI’s ever-advancing performance targets.

Virtualization

Because of the lack of any real hardware legacy for most of this generation of AI players, the pre-silicon platform must be one that can take a model – say, C, SystemC or testbench – and run it.

Emulation freed itself from the lab some time ago and can host such models. It offers options beyond in-circuit emulation that drew more explicitly on tags from legacy designs.

Virtualization then plays a key role in bringing together the different parts of the design process. By either using a cloud-based emulator or locating it on an internal corporate network, software engineers and algorithm developers at one location can work on what their hardware colleagues have designed at another.

Optimization for a specific target is quicker and easier.

Determinism

Emulators offer greater visibility than many other pre-silicon environments. As a result, they are known for their use in debug and hardware/software co-optimization. This is a more crucial step during the development of AI systems, where algorithms are continuously tuned.

In that context, determinism in the emulator plays an important role. With a complete system as the target, it is essential that each time the hardware is mapped to the emulation platform, it is exactly the same. Links between it and the algorithms that are intended to run on the final silicon need to be maintained at each stage in the design process.

This is nothing new, if you have worked on earlier designs that have also been highly deterministic. It is also a key weakness of FPGA-based development strategies – as the mapping there is not deterministic, how can the algorithm team be sure that its various tweaks will ultimately run on what emerges from the fab?

Taken together, these three pillars answer the challenges posed by the development of integrated AI systems. They give designers the ability to refine platforms for performance and power.

They have the capacity and performance to run designs against benchmarks and enable iterative refinement. That refinement can then extend to meeting designers end-use targets within an increasingly fragmented space.

Meanwhile, emulators also have a long history of use for power optimization at the system level by offering visibility into its closely aligned components.

Paul Dempsey is editor-in-chief of Tech Design Forum

Paul Dempsey is editor-in-chief of Tech Design Forum