Emulation for AI: Part Two

The first part of this feature discussed the trends causing AI companies to move from generic processors to their own ASICs or toward the use of still more focused platforms. This second article looks at what led Wave Computing, a pioneer in dedicated AI silicon – to adopt emulation during the development of its ‘dataflow processing unit’.

Scalability is key to artificial intelligence (AI). According to open.ai, deep neural networks (DNNs) are doubling their performance demands every three-and-a-half months, compared to the 18 months of Moore’s Law.

At the same time, some applications are already beginning to strain the GPU-based AI processors that dominate AI silicon today. An analysis by Moore Insights & Strategy (MI&S) cites the examples of reinforcement learning for visual recognition and natural language processing as fields “where accelerators sometimes struggle with more than a few nodes”. Fragmentation in AI use-cases is thought likely to provide further examples.

Wave Computing and the AI dataflow processing unit

Wave Computing is a high profile Silicon Valley AI startup. It recently closed an $86M venture capital funding round and has open-sourced the MIPS processor instruction set architecture, which it acquired in 2018. Its main IP, however, is a dataflow processing unit (DPU), the basis for chips which it is now offering under early access for AI applications at the server, enterprise and edge levels (a further reflection of how AI processing is itself being distributed as applications proliferate).

The DPU has been designed to harmonize with the dataflow graph of a DNN. In a paper commissioned by Wave, MI&S describes the core processor architecture:

“The company’s implementation of a dataflow architecture seems to present an elegant alternative to train and process DNNs for AI, especially when models require a high degree of scaling across multiple processing nodes. Instead of building fast parallel processors to act as an offload math acceleration engine for CPUs, this dataflow machine directly processes the flow of data of the DNN itself. The CPU is only used as a pre-processor to initiate runtime, not as a workload scheduler, parameter server, or code/data organizer.”

To address scalability, Wave has developed a technique that combines data parallelism and model parallelism. Data parallelism applies one model to every thread with different parts of the input spread across the processing resource. Model parallelism receives the same data but with the model split across the resource. The perceived benefits of model parallelism come when the model cannot be accommodated by a single acclerator.

Then, to allow its silicon to be stacked, Wave has used a distributed signal manager to deliver data more efficiently to each processing unit and a combination of novel interconnect (which enables the signal management) and memory. M&IS says of this:

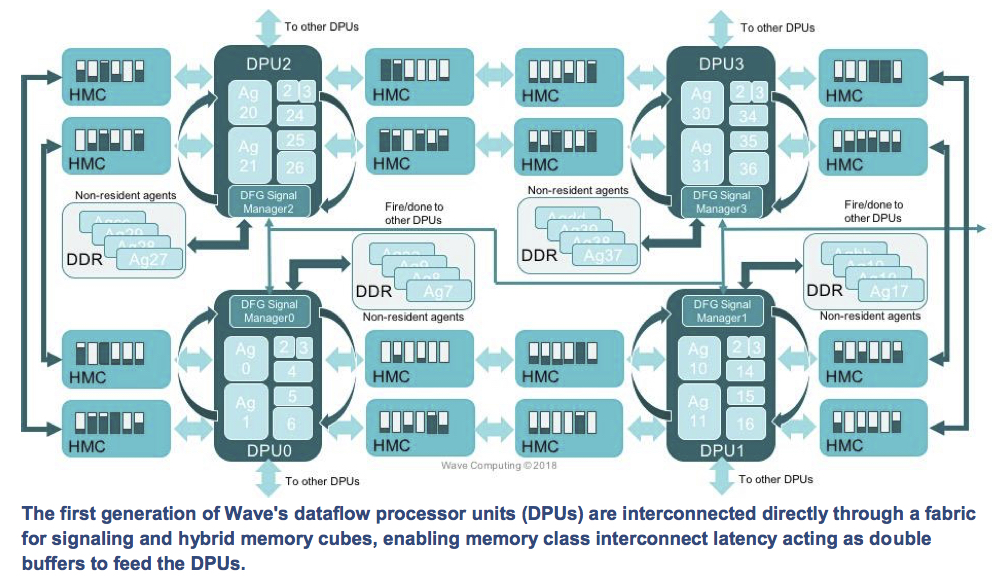

“The DPUs are interconnected directly with each other over a fabric (used for signaling “Fire” and “Done”) and through dual ported Hybrid Memory Cubes (HMC), which act both as fast memory and as shared data buffers between the DPUs. This allows shared double buffering to improve scalability by keeping the critical data close to the processors.

“The bisection bandwidth of this approach is impressive, which supports [Wave’s] scale-up and scale-out thesis, delivering up to 7.25TB/s per second. The company suggests that this would translate to approximately 300GB/s of user data on average, since much of the bandwidth is consumed by the movement of feature map data moving between the agents in the DPUs.”

A block diagram for a four-CPU board is shown in Figure 1. M&IS has produced a white paper that takes a more detailed look at Wave’s architecture.

Figure 1. How AI dataflow processing units are interconnected through a bespoke fabric (Wave Computing)

Wave Computing and emulation

“These are very big chips,” says Jean-Marie Brunet, marketing director of Mentor’s emulation business. “There are billions of gates – we’re typically looking at three-to-four billion gates right now for AI – and according to its specification there are about 16,000 processing units on the main Wave chip.”

Wave shifted from an FPGA-based development strategy to emulation – and specifically to Mentor’s Veloce emulation platform – largely because of the scalability required by that silicon size.

“With the demands on AI now, there are two ways you can scale: You put more hardware into the stack or you increase the capacity of the chip,” says Brunet, “and those both create capacity issues for FPGA.

“But another aspect for Wave was that before they decided to really push on these DPUs, the software they were running on FPGA prototypes was end-user software, not performance software. When you move into your own performance software, you need a platform on which to run the right AI benchmarks – MLPerf and others. So, you now need many more metrics – power and performance – and visibility.

“Then, so much of this was new – there was no legacy – that for both the software and the hardware there was a real need for virtualization. You don’t have a previous design you can just plug into ICE [in-circuit emulation]. It’s all about models. The other issue with virtualization is that you can give highly distributed teams access to the platform and ensure concurrency of the hardware and software. [Wave has offices in the US, China, Taiwan, Sri Lanka and the Philippines]”

Scalability and virtualization form two of what Brunet sees as the three ‘pillars’ supporting the case for emulation in AI, with determinism as the third.

“And again, that is something that made Wave a good example – once they moved from end-user software to their own, they needed a platform to which the design would map exactly in the same way for each iteration. If you don’t have that and you’re looking to made architectural tradeoffs, then you’re wasting your time.”

A building emulation market in AI

If one takes Wave as emblematic of a trend, then things look good for emulation in the AI business. According to AI analyst Cognilytica, venture capital funding for AI startups reached $17B in 2017 and almost certainly exceeded that figure in 2018. The money is out there to add silicon NRE to AI’s traditional foundations in algorithms and software.

And the traditional silicon and system companies are getting involved. Companies like Google and Facebook have in-house projects, Nvidia is heavily committed (and itself responding to both the scalability and fragmentation issues) as is Intel. Qualcomm set up a $100M corporate venturing fund targeting AI at the end of 2018, and Wave itself has partnered with Broadcom for the development of its 7nm generation.

“And there are no real standards, so it is a bit like the Wild West, and that is obviously good for us,” says Brunet. “I think there are a couple of analogies you can look at.

“If you go back about 25 years ago and look at networking, it started that software was the key thing, and you put a few CPUs in a workstation. But then people realized that the power and the performance weren’t there and they would have to do their own hardware designs. It was expensive to start with, but soon the economies of scale kicked in. It’s much the same in AI now.

“The other more recent one to look at is what happened when we didn’t have EUV and we started with double-patterning, then multi-patterning (double, triple, quadruple). There was no single approach. Like with AI, we had to support them all.”

There is the issue that these will often be originally software led-companies coming to AI, but here Brunet is phlegmatic.

“If you start with the big systems companies, then obviously they already have silicon expertise in-house, so they know about emulation. They know about doing very big, very complex chips and what emulation can do,” he says. “The startups are having to bring in engineering expertise, who also bring an understanding of emulation with them..

“The basic hardware arguably isn’t as complex as some other designs. But these are big chips and they have to scale. If you look at the trends: If a company wins a socket they know that the AI or ML or DL system is going to scale and scale rapidly, so they have to be able to scale with it.

“There is an incredibly strong argument that emulation has a place here.”

Paul Dempsey is editor-in-chief of Tech Design Forum

Paul Dempsey is editor-in-chief of Tech Design Forum