The shape of system design and verification in 2016

2016 marks the 20th anniversary of the term Electronic System Level (ESL), introduced by Gary Smith in 1996. Where are we now? And how will developments this year push the frontiers of practical ESL design?

The EDA industry has been working relentlessly for decades to raise the level of abstraction to allow earlier and more efficient design entry as well as verification. Over the past two decades it has received a somewhat mixed reputation, perhaps because expectations may have been set wrongly. An argument can be made that both verification and design entry moved upwards significantly and 2016 will be no different in pushing this trend even further.

The 1970s were dominated by layout-driven entry: complexity was low at the time. In the 1980s we arrived at the gate level. The 1990s saw abstraction move up to the register transfer level (RTL), first for verification and then with logic synthesis for automated implementation. When the term ESL was introduced, the expectation seemed to be that the move upwards would be as complete, as it was in the previous two elevations from layout to gates and from gates to RTL, with the creation of a single new form of representation from which automation was done. However, both the role of software and the breathtaking rise in complexity have thrown a wrench into all this, causing a fragmented move upwards.

The 1990s introduced some important trailblazer projects. For example, Cadence’s VCC, spearheaded by EDA pioneer Alberto Sangiovanni Vincentelli, introduced the methodology of function-architecture co-design, recognizing the role of software and fully separating the entry of “function” and “architecture” with a mapping in-between. At DAC 2001, Cadence showed how the move of a functional unit from hardware to software could be implemented automatically and be traced all the way to layout.

Cadence VCC, although an important trailblazer, came and went. Does this mean that the race to higher level abstraction stopped and ESL was unsuccessful? Absolutely not. IP reuse increased productivity enough for chip design costs to avoid becoming prohibitively high. On the verification side, high-level verification languages (HVLs) such as e, Vera, and SuperLog paved the way for SystemVerilog to automate verification. SystemC was introduced as a design and verification language above signal-level RTL.

With the introduction of the TLM-2.0 APIs in 2008, SystemC became the backbone for integrating transaction-level models into virtual platforms. Software as part of chip development completely changed the equation and became the dominant cost factor for complex designs. The industry has been striving towards a so-called “shift left” to allow continuous, agile integration of hardware and software earlier and earlier every year.

State-of-the-art design today: IP creation

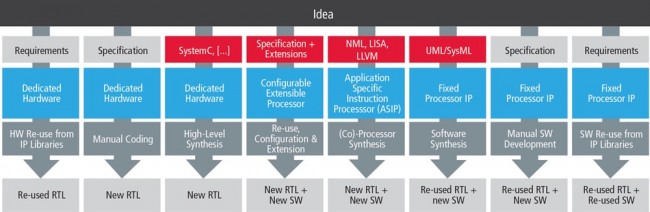

So where is the EDA industry today and what will 2016 bring? In IP creation, users have the choice of eight approaches to bring new functions into their design, as shown in Figure 1. Six of the options (including simply re-using IP) use higher levels of abstraction. First, users can simply re-use a hardware block if they are readily available as hardware IP.

Figure 1 Eight block implementation options

There is a healthy IP market that continues to grow. As a second option, they can manually implement the function in hardware as a new block starting with RTL, using the “good old way”, so to speak. The same two options exist on the software side, where users can manually implement software and run it on a standard processor or re-use software blocks if they are readily available as software IP – these are options seven and eight.

In addition, the four remaining IP creation options also split into hardware and software. As a third choice, users can use HLS to create hardware as new blocks from a high-level description like SystemC. Several companies are using this method as the only way to create new IP. At Cadence, we are aware of 1000-plus such tapeouts using what is now our Stratus product line. On the software side, designers can use software automation to create software from a system model like UML, SysML, or MathWorks Simulink, and run it on a standard processor: that’s choice six.

By effectively creating hardware-software combinations to implement a new functionality, designers can use an extensible and configurable processor core to create a hardware-software implementation. Automation is based on a higher level processor description, which is shown as block implementation choice four.

Furthermore, users can create, in the final implementation choice five, an application-specific instruction processor (ASIP) with its associated software using automated tools to do so. LISA and NML are common description languages for this purpose. Variations to both approaches exist, such as automatically creating co-processors from C-code that is profiled on a standard processor.

Guess what? With the exception of manual implementation, the eight options shown in Figure 1 all effectively raise the level of abstraction.

State-of-the-art design today – IP integration

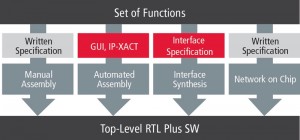

Now facing a “sea of functions”, the other step in the design flow centers on IP integration. Here, users have four options to connect all the blocks together, as shown in Figure 2, regardless of whether they were re-used or built as described above. First, users can connect blocks manually.

Second, they can automatically assemble the blocks using auto-assembly front ends like the ARM Socrates tool with interconnects that are auto-generated by tools such as ARM AMBA Designer, Sonics, or Arteris. One can call that design “correct by construction”, but automating the integration of these designs with appropriate verification environments is becoming more and more important. Third, users can synthesize protocols for the interconnect from a higher level protocol description and, finally, they can use a fully programmable NoC that determines connections completely at runtime.

Figure 2 Four IP assembly options

As with IP creation, with the exception of full manual assembly, all these methods can be considered as abstractions above pure RTL coding. Although it certainly took 20 years to reach the point where each of these options are in common use, the industry has indeed moved up in abstraction for both IP creation and IP assembly. However, it was achieved in a more fragmented way than some may have expected. A universal executable specification from which everything can be derived simply has not emerged, which arguably was one of the flaws that contributed to VCC’s demise.

2016 and beyond

Looking forward into 2016 and beyond, it is first important to understand the market backdrop. Our future will have billions of devices as edge nodes that sense data of every kind, from heartbeats to temperatures, pressure, and location, to just name a few. The number of design starts is predicted to still increase in the more advanced nodes below 90nm. However, not all designs may require that complexity and even drive to smaller geometry nodes.

There is room for smaller designs at the edge nodes that might not require the latest bleeding-edge technologies. These devices will always be connected to gateways aggregating data and sending it over complex networks into clouds, where on equally complex servers data can be analyzed in every way possible.

From a system design and verification flow perspective, flows are bifurcating in 2016 into very complex designs – mobile, server, networking and gateways for what is “buzz-worded” the internet of things (IoT) – and less complex designs for edge nodes, such as the sensors in our fitness trackers.

In addition design flows will simply become more application specific. For example, in sensor designs for the edge nodes in the IoT, analog/mixed signal, and specific needs to address functional safety issues in mission-critical applications for automotive, military/aerospace, and healthcare will become far more critical than the shift left of enabling software development earlier, as required in mobile and IoT gateways.

Looking back to the IP creation techniques, in 2016 the balance of manual coding and HLS will further tip towards HLS as it continues to gain mainstream adoption and grows further. Re-use is already gradually moving upwards from single IP blocks to subsystems of processors combined with peripheral IP blocks and interconnects, all ready to go with software executing on them. Good examples are the Tensilica sub-systems in the area of dataplane networking and multimedia.

The overall balance of hardware and software is hard to predict in general terms, but overall development efforts and lifecycles, especially for complex chips, will let the share of software grow further. Bottom line: design flows will bifurcate into complex flows that require automation for assembly and building verification environments, mixed with simpler flows in which edge-node designs can be assembled in a point-and-click style from, let’s say, fewer than 20 IP components and sub-systems.

Horizontal and vertical flow integration

Functional verification has touched hardware-software systems in many ways, combining dynamic engines for RTL simulation, emulation, and FPGA-based prototyping with formal verification that has become — as with HLS —mainstream as well. Horizontal integration of these engines was kicked off by Cadence in 2011, with the introduction of the System Development Suite.

Market window pressures, exacerbated by brand exposure for quality and reliability, will further increase the need for integration between the engines in 2016. Hybrid combinations of RTL engines with TLM virtual platforms will enable further shifts left, as well as integration of debug, verification IP, and verification management. Coupling of these engines will further strengthen horizontal integration. In addition, the industry is approaching a pivotal point to make stimulus truly portable across all the engines. Work is going on in the Accellera PSWG to allow this together with the extension of verification reuse into the post-silicon domain.

Despite this drive towards higher levels of abstraction, we will also see further vertical integration of the front-end flows with classic RTL down implementation flows. We have already witnessed this with combinations like emulation with power estimation, as well as performance analysis with the interconnect workbench tools. There’s also lots more to come in the area of annotating implementation data back into the front-end flows.

Conclusion

System-level design and verification have become reality, albeit a somewhat different reality than original assumptions indicated. Specifically, fragmentation of the flows as described above, caused by complexity and software, suggests that we are still quite a bit away from a single description of software-hardware systems, from which automation can implement the results from higher levels of abstraction, just like gate-level and RT-level in the 1980s and 1990s. The need for it may or may not be there. We probably won’t answer that question in 2016, but that will make EDA interesting for years to come.

About the author

Frank Schirrmeister is group director of product marketing for the System Development Suite at Cadence Design Systems.