The principles of functional qualification

Functional logic errors remain a significant cause of project delays and re-spins. One of the main reasons is that two important aspects of verification environment quality—the ability to propagate the effect of a bug to an observable point and the ability to observe the faulty effect and thus detect the bug—cannot be analyzed or measured. The article describes tools that use a technique called mutation-based testing to achieve functional qualification and close this gap.

Functional verification consumes a significant portion of the time and resources devoted to a typical design project. As chips continue to grow in size and complexity, designers must increasingly rely on dedicated verification teams to ensure that systems fully meet their specifications.

Verification engineers have at their disposal a set of dedicated tools and methodologies for automation and quality improvement. In spite of this, functional logic errors remain a significant cause of project delays and re-spins.

One of the main reasons is that two important aspects of verification environment quality—the ability to propagate the effect of a bug to an observable point and the ability to observe the faulty effect and thus detect the bug—cannot be analyzed or measured. Existing techniques, such as functional coverage and code coverage, largely ignore these two issues, allowing functional errors to slip through verification even where there are excellent coverage scores. Existing tools simply cannot assess the overall quality of simulation-based functional verification environments.

This paper describes the fundamental aspects of functional verification that remain invisible to existing verification tools. It introduces the origins and main concepts of a technology that allows this gap to be closed: mutation-based testing. It describes how SpringSoft uses this technology to deliver the Certitude Functional Qualification System, how it seeks to fill the “quality gap” in functional verification, and how it interacts with other verification tools.

Functional verification quality

Dynamic functional verification is a specific field with specialized tools, methodologies, and measurement metrics to manage the verification of increasingly complex sets of features and their interactions. From a project perspective, the main goal of functional verification is to get to market with acceptable quality within given time and resource constraints, while avoiding costly silicon re-spins.

At the start of a design, once the system specification is available, a functional testplan is written. From this testplan, a verification environment is developed. This environment has to provide the design with the appropriate stimuli and check if the design’s behavior matches expectations. The verification environment is thus responsible for confirming that a design behaves as specified.

The current state of play

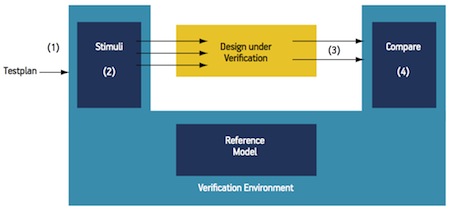

A typical functional verification environment can be decomposed into the following components (Figure 1):

- A testplan that defines the functionality to verify

- Stimuli that exercise the design to enable and test the defined functionality

- Some representation of expected operation, such as a reference model

- Comparison facilities that check the observed operation versus the expected operation

Figure 1

The four aspects of functional verification

Source: SpringSoft

If we define a bug as some unexpected behavior of the design, the verification environment must plan which behavior must be verified (testplan), activate this behavior (stimuli), propagate this behavior to an observation point (stimuli), and detect this behavior if something is “not expected” (comparison and reference model).

The quality of a functional verification environment is measured by its ability to satisfy these requirements. Having perfect activation does not help much if the detection is highly defective. Similarly, a potentially perfect detection scheme will have nothing to detect if the effects of buggy behavior are not propagated to observation points.

Code coverage determines if the verification environment activates the design code. However, it provides no information about propagation and detection abilities. Therefore, a 100% code coverage score does not measure if design functionality is correctly or completely verified. Consider the simple case in which the detection part of the verification environment is replaced with the equivalent of “test=pass.” The code coverage score stays the same, but no verification is performed.

Functional coverage encompasses a range of techniques that can be generalized as determining whether all the important areas of functionality have been exercised by the stimuli. In this case, “important areas of functionality” are typically represented by points in the functional state space of the design or critical operational sequences. Although functional coverage is an important measure, providing a means expected or inappropriate operations. The result is a metric that provides good feedback on how well the stimuli covers the operational universe described in the functional specification, but is a poor measure of the quality and completeness of the verification environment.

Clearly, there is a lack of adequate tools and metrics to track the progress of verification. Current techniques provide useful but incomplete data to help engineers decide if the performed verification is sufficient. Indeed, determining when to stop verification remains a key challenge. How can a verification team know when to stop when there is no comprehensive, objective measure that considers all three portions of the process—activation, propagation, and detection?

Mutation-based testing

Functional qualification exhaustively analyzes the propagation and detection capacities of verification environments, without which functional verification quality cannot be accurately assessed.

Mutation-based testing originated in the early 1970s in software research. The technique aims to guide software testing toward the most effective test sets possible. A “mutation” is an artificial modification in the tested program, induced by a fault operator. The Certitude system uses the term “fault” to describe mutations in RTL designs.

A mutation is a behavioral modification; it changes the behavior of the tested program. The test set is then modified in order to detect this behavior change. When the test set detects all the induced mutations (or “kills the mutants” in mutation-based nomenclature), the test set is said to be “mutation-adequate.” Several theoretical constructs and hypotheses have been defined to support mutation-based testing.

“If the [program] contains an error, it is likely that there is a mutant that can only be killed by a testcase that also detects this error” [1] is one of the basic assumptions of mutation-based testing. A test set that is mutation-adequate is better at finding bugs than one that is not [2]. So, mutation-based testing has two uses.

- It can assess/measure the effectiveness of a test set to determine how good it is at finding bugs.

- It can help in the construction of an effective test set by providing guidance on what has to be modified and/or augmented to find more bugs.

Significant research continues to concentrate on the identification of the most effective group of fault types [3]. Research also focuses on techniques aimed at optimizing the performance of this testing methodology. Some of the optimization techniques that have been developed include selective mutation [4], randomly selected mutation [5] or constrained mutation [6].

Certitude

Using the principles of mutation-based testing and the knowledge acquired through years of experimentation in this field and in digital logic verification, the Certitude functional qualification technology was created. It has been production-proven on numerous functional verification projects with large semiconductor and systems manufacturers. The generic fault model, adapted to digital logic, has been refined and tested in extreme situations, resulting in the Certitude fault model. Specific performance improvement algorithms have been developed and implemented to increase performance when using mutation-based methodologies for functional verification improvement and measurement.

The basic principle of injecting faults into a design in order to check the quality of certain parts of the verification environment is known to verification engineers. Verifiers occasionally resort to this technique when they have a doubt about their test bench and there is no other way to obtain feedback. In this case of handcrafted, mutation-based testing, the checking is limited to a very specific area of the verification environment that concerns the verification engineer.

Expanding this manual approach beyond a small piece of code would be impractical. By automating this operation, Certitude enables the use of mutation-based analysis as an objective and exhaustive way to analyze, measure, and improve the quality of functional verification environments for complex designs.

Certitude provides detailed information on the activation, propagation, and detection capabilities of verification environments, identifying significant weaknesses and holes that have gone unnoticed by classical coverage techniques. The analysis of the faults that do not propagate or are not detected by the verification environment points to weaknesses in the stimuli, the observability and the checkers.

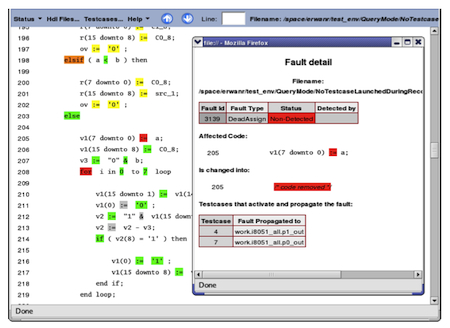

Certitude enables users to efficiently locate weaknesses and bugs in the verification environment and provides detailed feedback to help correct them. An intuitive, easy-to-use HTML report gives complete and flexible access to all results of the analysis (Figure 2). The report shows where faults have been injected in the HDL code, the status of these faults, and provides easy access to details about any given fault. The original HDL code is presented with colorized links indicating where faults have been qualified by Certitude. Usability is enhanced by a TCL shell interface.

Figure 2

Example of Certitude HTML report

Source: SpringSoft

Certitude is tightly integrated with the most common industry simulators. It does not require modification to the organization or execution of the user’s existing verification environment. It is fully compatible with current verification methodologies such as constrained random stimulus generation and assertions.

Bibliography

[1] A.J. Offutt. “Investigations of the Software Testing Coupling Effect”. ACM Trans Soft Eng and Meth, Vol 1, No 1, pp 5-20, 1992.

[2] A.J. Offutt and R.H. Untch. “Mutation 2000: Uniting the Orthogonal”. Mutation 2000: Mutation Testing in the Twentieth and the Twenty First Centuries, pp 45-55, San Jose, CA, 2000.

[3] M. Mortensen and R.T. Alexander. “An Approach for Adequate Testing of Aspect Programs”. 2005 Workshop on Testing Aspect Oriented Programs (held in conjunction with AOSD 2005), 2005

[4] A.J. Offutt, G. Rothermel and C. Zapf. “An Experimental Evaluation of Selective Mutation”. 15th International Conference on Software Engineering, pp 100-107, Baltimore, MD, 1993.

[5] A.T. Acree, T.A. Budd, R.A. DeMillo, R.J. Lipton, and F.G. Sayward. “Mutation Analysis”. Technical Report GIT ICS 79/08, Georgia Institute of Technology, Atlanta GA, 1979.

[6] A.P. Mathur. “Performance, Effectiveness and Reliability Issues in Software Testing”. 15th Annual International Computer Software and Applications Conference, pp 604-605, Tokyo, Japan, 1991.

[7] R.A. DeMillo, R.J. Lipton and F.J. Sayward. “Hints on Test Data Selection: Help for the Practicing Programmer”. IEEE Computer, 11(4): pp 34-43, 1978.

2025 Gateway Place Suite 400

San Jose

CA 95110

USA T: 1-888-NOVAS-38 or (408) 467-7888 W: www.springsoft.com