A matter of timing

We talked to Mentor Graphics CEO Wally Rhines about the solutions that already exist to combat increasing design complexity.

By 2018, the semiconductor industry will be designing chips with 40 billion transistors and between five and 10 billion gates. By 2025, it will be working with more than 100 billion transistors. It seems like only yesterday that engineers were scratching their heads with both admiration and confusion at the first one-billion-transistor designs, yet a mere eight years from now, we will need to achieve improvements in design efficiency of at least an order of magnitude—if not greater—to deal with still more complexity.

That is the view of Wally Rhines, CEO of Mentor Graphics, as he outlined it at his company’s recent user group meeting in Santa Clara. And, as the leader of one of the ‘big three’ EDA vendors, Rhines also had some answers for the User2User (U2U) audience. You see, 40 billion transistors by 2018 should actually arrive bang on schedule.

“The adoption of EDA tools is actually a very slow process,” Rhines explained. “If you look at the time from when a good solid production version of some new EDA tool or methodology hits the market to when it becomes mainstream, it’s also about eight years.”

His U2U address went on to consider four areas where minimum 10X improvements are necessary:

- system-level (ESL) design creation;

- functional verification;

- physical design and verification; and

- embedded software.

The challenges each of these stages represents today will be familiar to any project manager.

When one talks about the rate at which NREs are escalating for application-specific devices and system-on-chips (SoCs), the mantra, “It’s the software, stupid” is never far away. It is now commonplace for the number of software engineers to outnumber their hardware counterparts on major designs.

Meanwhile, as Rhines himself later noted, ESL has already become a prerequisite for all leading semiconductor companies rather than a competence confined largely to Europe and Japan.

“The Europeans, for their part, tended to be ESL pioneers because their electronics industry has been driven more by systems companies and system expertise as opposed to the US industry which has developed out of component companies moving upward,” he said.

One obvious touchstone here would be the way in which companies such as Germany’s Infineon Technologies and NXP Semiconductors of the Netherlands were born out of larger conglomerates such as, respectively, Siemens and Philips.

“What’s happening now is that an SoC is becoming a true system and the US companies that used to design chips now find they are also designing systems. To do that, you have to go to the system level,” said Rhines.

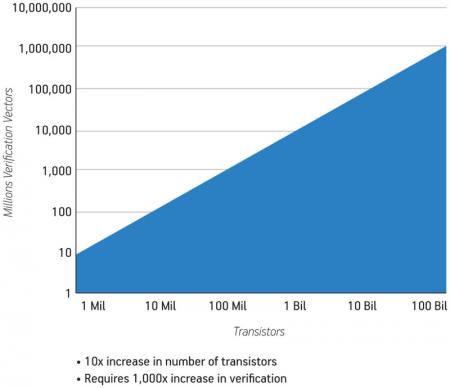

And then there is verification. It was Rhines himself who raised the potential threat posed by ‘endless’ verification in a DVCon speech only two years ago. At U2U he showed a forecast that showed a 10X increase in transistor count requiring a 1,000X improvement in the verification process (Figure 1).

Figure 1

The verification gap

Add to that the need for new place and route strategies, largely required to achieve manufacturability on submicron processes (though they tend to occur every two or three process nodes anyway), and you can see why many engineers might ask themselves, “How on earth do we get there?” Rhines’ answer though—going back to that eight-year adoption pattern—was that in many respects we are already there. The tools exist today.

He explained that at the system level, it is possible to raise abstraction towards the transaction-level, to work with models on that basis and exploit a variety of associated design and modeling standards already.

In the embedded software domain, a similar pattern also emerges. Re-usable blocks and open standards are obviating the bespoke driver and middleware effort once required.

“I think the embedded software development and verification business had for some time been much like electronics design was in the 1960s and 1970s,” Rhines said later. “There was a lot of unique code. Every chip had its own drivers and protocol stacks and you redeveloped them for each processor. There was very little reuse, very little automated verification, very little standardization. There were no standard operating systems, except for PCs. Now you have Linux. You have Android. You have MeeGo. A whole slew of available standard operating systems.

“It’s standardization, reuse and automation that are making that embedded software investment more affordable because they have to. Otherwise, every man, woman and child becomes an independent software developer to get it all done.”

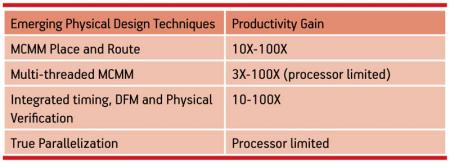

And as Figure 2 and Figure 3 show, Rhines broadly quantified the efficiencies available from a range of existing techniques in verification and physical design. In short, his view is that the future is not merely bright, it is known.

However, the question then arises as to why it takes so long for companies to adopt these technologies, given the pressure to pack more transistors onto each generation of a design, to integrate more functionality within an SoC worthy of the name, and to continue to drive down the cost-per-function while driving up volumes? The answer appears to be a combination of human nature and naked economics.

“In general, people do not adopt new methodologies until they have to. If they can get the job done with the methodology they have in place, they will do so,” said Rhines. “We have to maintain continuous contact with the customer so that when the time comes that the existing methodology breaks, they have one they can migrate to.

“Even then it’s painful. There’s training. There’s the broader process of understanding a new tool. There’s infrastructure, models, libraries. And a really important fact here is that the time when a methodology breaks is never ideal. It’s likely that the user will already be behind schedule because they have been trying to work with something that can’t do the job.”

Rhines added that this perennial state of affairs has possibly continued to have more influence on tool adoption and strategies in recent times than the global recession.

“You go through economic cycles but interestingly the amounts companies spend on EDA don’t vary that much because the number of designers stays relatively constant,” said Rhines. “When there’s a recession, you shut down manufacturing. You might lay off administration and marketing staff. But you keep your designers designing because you know some day that recession is going to be over and the one thing you have to have then are products—everything else you can start back up.”

A further factor is the time it takes not merely to introduce a new tool to market but for it to propagate its way through a company and then the wider design community.

“The initial money spent on the software may not be that much. If you’re a start-up, we offer bargain-level prices to get you started and those increase as you grow and are more able to pay the normal price. But even with an established user the initial outlay might not be that much,” Rhines explained.

“In early usage, you don’t run that many copies. There’s a period when you’re getting the infrastructure in place, doing a first design with the new methodology and working out bugs. Then there’s the question of do people like it.

“Ultimately, what you get is something that develops, in a way, like nuclear fission. One design group might talk to another design group and then that gets them interested in the tool, and that process repeats itself until all of a sudden everyone wants to adopt the software and you have this massive ramp in demand. Then, with start-ups, even if one company fails, the designers will go somewhere else and take their preferences with them, so that adds to the process as well.”

Rhines does nevertheless note that in the immediate short-term, the industry—including his own company—is still playing its cards very closely, noting recent reports of allocation and tightness in the supply chain despite the increasing signs of recovery.

“There are a lot of companies looking to see what the sustainable numbers will be,” said Rhines, considering contrasts between different silicon markets and specific reports of microcontroller shortages.

“Semiconductor companies that sold into the wireless handset market or into MP3 players experienced an almost unprecedented drop caused by a total liquidation of demand throughout the supply chain. They are currently experiencing strong demand as the supply chain is rebuilt but their concerns are largely about how strong the overall consumer spending picture is to make that resurgence sustainable.

“Then the companies in the microcontroller industry have a broader range of things to look at; they touch on a lot of basic areas of the global economy. For them, sales in the automotive space have been weak, industrial sales have been relatively weak and then they have other markets beyond that. And, on top of that, you have to put another inventory swing.

“So, they have even greater uncertainty as to what will be sustainable through the recovery. Demand might be growing, but their customers are not yet hiring people or making big bets on growth in the economy. They’re delaying those commitments as long as possible which means that they’re keeping supply much tighter than the consumer electronics market.”

Mentor itself recorded growth, 2%, in 2009. “Even though Mentor was the only EDA company that, in the calendar year just ended, grew, we didn’t grow that much and we’re reluctant to add substantial increased expense. We’re certainly being cautious in our spending until we also see a more sustainable strength across our customer base,” said Rhines.

So where might those more positive economic signs come from? Tools for next generation design appear to be available, there is a story to tell about them, and a timescale (albeit an apparently more leisurely one than the EDA vendors might like).

To finish on a positive note, some green shoots might be emerging in the start-up sector.

“The fabless semiconductor industry has been relatively weak for the last two years, not surprisingly,” said Rhines. “But there are still a significant number of start-ups being funded. The actual rate in 2008 was within 20% of the average for the decade, despite the fact that the last part of the year was a disaster. And 2009 was also down but companies were still being formed.

“Something that’s particularly interesting, though, is that a lot of start-ups are being formed in the emerging economies that aren’t as traceable as the venture capital-funded ones in the US, Europe and Israel. There are hundreds of companies in China with negligible revenue right now, but which have the potential over time, because of their domestic market, to become significant to the business.”