Maximizing the benefits of continuous integration with simulation

Embedded systems developers are turning to agile techniques and continuous integration. To make these processes possible, you need an environment that supports easy access to virtual prototypes throughout the life cycle.

The role of software is becoming more important than ever in embedded systems. It provides the opportunity for competitive differentiation and can significantly accelerate and increase revenues. However, companies are often not willing to wait a year or two for new and updated software, so there is increased pressure to release faster.

The way developers work is fast evolving. Market observation shows that new practices such as agile development, continuous integration, continuous deployment and cross-functional teams are being established for the development of embedded software. Embedded systems development is clearly very different from developing a web or an IT-based application. Target hardware does not always lend itself to adopting these new development methodologies but there are approaches that can be adapted to the embedded development life cycle.

Most of the challenges can be categorized as being related to access, collaboration or automation. Let’s investigate the first of these challenges: access or the availability of the hardware required to move to continuous integration and testing. Continuous integration is often considered to be a useful means to achieve faster success in embedded development. Continuous integration can highlight hardware limitations that make the technique difficult to deploy but it is something that needs to be addressed.

Lifecycle issues

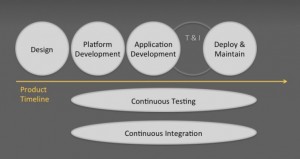

Due to market needs, the traditional lifecycle – design, platform development, application development, test and integration, deployment and maintenance – is changing. Test and integration is now a continuous and ongoing activity that needs to happen throughout the product lifecycle: it occurs during the development of a product and after deployment when the product is in the maintenance phase.

Figure 1 Product life cycle and deployment testing

Software is also often updated in deployed products, so it is being continuously tested and integrated, which means that the traditional post-development test and integration phase is changing and even disappearing in some cases. Integration test has not been entirely eliminated but it has largely been encompassed in quality assurance (QA), which is perhaps less about doing integration and basic tests and more about ensuring that proper testing is done before product shipment. In this area, there are two opposing views: either production quality is part of the continuous test and integration system, where it is the final step in that process, meaning that the end-goal of the continuous integration is to have production quality; or there is a dedicated QA phase that is a specific activity with specific equipment and comes after and is separate from the continuous test and integration system.

Continuous integration

There are a number of basic drivers behind continuous testing and integration. The technique can: provide faster feedback to developers; allow the system to be built and tested piece by piece to avoid big-bang integration and big failure; reduce the waiting time to test systems; find errors in the smallest possible context; and find errors peculiar to each level.

Continuous integration is often difficult to achieve because there is limited access to often-fragile and scarce target hardware, which causes long latency to run tests that makes the fixing of bugs more expensive. A long time to run tests means it takes time to get feedback so that regressions creep back in. And using only two levels of test increases the likelihood that bugs in intermediate levels are not caught.

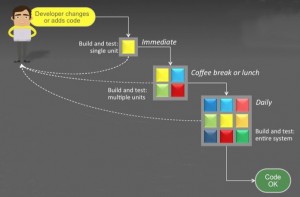

Figure 2 Typical time frames of continuous practices

Target hardware can be too costly to provide enough for individual developers. Or it may simply not be available. Developers may have to wait for access to a test lab or specific equipment, may have to wait for a result to be reported back from a test lab, or may lose time with setup or configuration changes after they gain lab time, all causing a loss of velocity, thought flow and momentum.

The access bottleneck also causes an inability to scale out the test and integration; and it may cause quality problems if a lack of access to hardware causes developers to reduce their test matrix to what is possible rather than what should be tested. This may cause late-stage integration problems that could have been avoided by introducing continuous integration.

Hardware and software labs

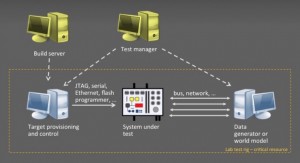

A typical hardware lab involves: a system-under-test; a target-provisioning PC that pulls code from a build server and programs and drives the system; and a data generator/real-world model (either a standard PC or specialized hardware); all coordinated by a test manager server.

Figure 3 The hardware test lab

The hardware test lab is essential for final QA. It is used to test what is shipped and a simulator cannot replace this hardware. However, there are issues. The lab is difficult and expensive to maintain. It is a limited resource, so integration tests run less often than they really should. Complete automation is hard to achieve. There is a limited set of intermediate system configurations, because hardware is typically rigged for system-level or unit tests rather than supporting intermediate steps. And the lab requires various subsystem setups that are often hard to come by. Testing is often shaped by available hardware and not necessarily by what developers ideally want to test.

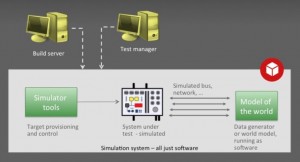

When simulating, the system-under-test and real-world model are replaced with a simulation, and the provisioning and service is replaced with simulation functions. This transforms the whole test lab into a software program, which can be run on any PC, encapsulated and shared. It turns a hardware management problem into a software management problem without changing the tests.

Figure 4 The simulation test lab

Simulation provides flexibility. With a simulated test lab, any PC can be any testing target and the test setup is just software. This means that tests can be run as soon as they are available; testing targets can be shifted immediately; test setups can be easily scaled up and down to fit each integration step; it is easier to maintain old setups as they are now just software; and it allows surges on one particular system, which avoids resources standing fallow. Additionally, adding a lab setup is as easy as adding a new server.

World simulation

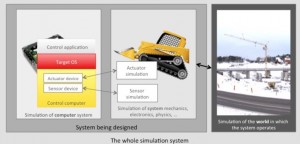

A simulation factoring in the outside world that the system interacts with is particularly important for embedded control systems. This can be achieved by simulating the control computer and running the whole software stack upon it, using the same software as on the real system. The software intended for the target system talks to simulated I/O devices using the normal drivers, and the simulated I/O devices talk to a simulation of the controlled system. This is the system that the control computer is part of, and it contains simulators for the actuators and sensors. Finally, this system simulation interfaces to a model of the world in which the system operates.

Figure 5 Building an integrated simulation system

In fact, there could be dozens of system and world models all interacting with each other and the simulated computer system. In a typical mechanical system, the system and environment simulations already exist as part of a model-based or simulation-based workflow. In a networking system, the world model tends to be the only model, as there is no involved mechanical system.

Certainly the simulation of the control computer board is the most difficult access issue, as it is necessary to simulate components such as the processor, I/O devices, storage and networking, among other functions. But it is then easy to add multiple boards in a single simulation setup, making it ideal for networked, rack-mounted and distributed systems. The simulator system provides the automation features needed to build the virtual lab, which is more than just hardware; it is hardware under simulation control.

Overall, simulation provides a solution that is more stable, dependable and flexible. By augmenting physical hardware with a simulation-based test lab, it is easy to equip developers with the systems they need, both for spontaneous testing and continuous integration based testing. It saves time, because there is no wait for access to hardware, and prepared setups and configurations can be used immediately. Limitations are removed when it comes to lab configurations, which means developers can test what they really need to test, and obtain faster feedback. It is also possible to increase quality and scale out testing without necessarily having to increase costs. Late-stage integration bugs and emergencies can be reduced, and projects and products can be delivered on time and under budget.

While access to physical hardware has traditionally impacted the effectiveness of continuous integration and testing, but technology such as Simics can enable new software practices, because it delivers much greater levels of availability for target access and high flexibility when continuously testing and integrating embedded system software.

About the authors

Jakob Engblom is product line manager for Simics at Wind River and Eva Skoglund is senior marketing manager for Simics at Wind River.