Cache-coherency checks call on portable stimulus

Portable stimulus and formal verification provide the means to handle the challenge of verifying cache-coherent SoC interconnects.

Of all the verification headaches that face the verification engineer, cache coherency lies close to the head of the list. It’s not just one complex state machine, it’s a bunch of state machines. And in the era of heterogeneous computing, they are not likely to even be replicants of the same state machine.

In a February DVClub seminar organized by T&VS at locations around Europe, Cadence director of product management Tom Anderson, said in order to even capture cache-coherency bugs “a specific series of events have to happen: and all of a sudden you get the wrong value”.

Those events call for multiple cache controllers to be running at once, each with its own set of vectors. Multiprocessor software tests run on top of the virtual hardware can expose errors in cache coherency as it provides the kind of workload needed. But Anderson pointed out: “If you are running an app on top of an operating system, it is almost impossible to go back and determine if the problem is a cache-coherency bug or not.”

Software verification limits

Robert Fredieu, verification architect at Mentor Graphics, said software in itself is often designed to avoid cache-coherency problems. “True memory sharing is relatively rare outside of the operating system. Even with multicore applications that use shared data, software writers have an interest in making data exchanges rare.”

The large number of software writers creating code for ARM-based systems also tends to make them conservative in terms of how their software approaches shared memory.

Software writers used to working on machines based on x86 architectures have the benefit of strong memory ordering. ARM has typically used a more relaxed form of ordering stores to main memory from cache that allows them to proceed out of order. It means that, without the software writers taking special care about when writes are performed, programs can wind up reading the wrong values back from memory. Barrier instructions force the write queue to be emptied in order at the cost of performance.

“Barriers tend to get overused by software engineers,” Fredieu said.

As a result, software-based verification is more likely to miss situations where the write ordering is not what the hardware designers intended. “To do the tests, you need to run lots of loops and do a lot of careful synchronization to keep the processors aligned. If you don’t, you won’t see the overlaps. Plus, you need a test that’s supposed to fail [without the barrier]. Everything that works without the barrier will also work with the barrier. That is often the most difficult thing to deal with,” Fredieu explained.

Unanticipated cache behavior can also lead to performance issues, Fredieu says. Although the behavior is not incorrect from a verification point of view, it’s undesirable. It is something that scale-out computer users are encountering as their use of multicore and heterogeneous computing expands. Snoop activity and asymmetric loading of processors can result in unwanted problems. Even the physical location of a shared cache with respect to the different processors can lead to some receiving better service from the cache than others. If the processors need to synchronize at the end of a set of parallel loops, that is not going to translate into poor performance.

As Fredieu outlined, diagnosing cache issues is not easy to do with regular software. Anderson recommended a combination of formal and simulation-based techniques. “You really want to verify formally if you can,” he said. “You take the state transition diagram and transform it into assertions, then check the behaviours.”

Full-chip checks

The problem for cache-coherency verification, however, is that formal works well for individual controllers but it may still allow errors introduced at the full-chip level to go undetected. “We believe formal should be complemented by full-chip simulation to ensure that the cores are connected correctly. Lots of things can go wrong at the full-chip level. You start with intra-cluster tests and then inter-cluster. You want software that can create various concurrency scenarios.

“There are a lot of variations of what can happen on-chip. Power states introduce an additional layer of complexity. You have to make sure that as you make transitions between power states that the cache coherency remains correct. It is difficult for a human to write these tests.”

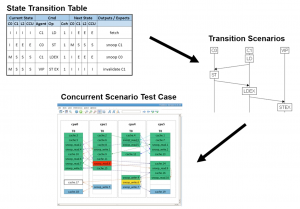

Image Portable stimulus allows multiple copies of state machines to be assembled into verification scenarios (Source: Breker)

That is where Accellera’s portable stimulus initiative comes in. “Portable stimulus may not be the best term: portable testing may be better. But the overall point is to automate the generation of tests,” said Anderson.

Cadence’s offering in this area is the Perspec System Verifier, which will ultimately be made compatible with the Accellera standard once it is published. “The language we use is similar to that in the [proposed] standard.” Anderson said. “Customers tell us they see a 10x productivity gain over writing tests by hand. There is a startup cost associated with creating the framework but once you’ve got that the productivity improvements kick in.

“You start off with a high-level model and then the tool decomposes that into use-cases,” Anderson said.

Typically, the use-cases are prepared in a spreadsheet. The data from the spreadsheet drives the generation of the different scenarios based on core stimulus packages. For example, a use-case might be three processors with overlapping memory where two of the cores are powered down and back up again. The tests check that the power cycling does not corrupt memory.

Breker Verification Systems CEO Adnan Hamid said cache coherency verification is “the poster child for portable stimulus”.

Graph-based verification

Portable stimulus makes it relatively simple to translate the state-transition models of individual cache controllers into tests that exercise a number of them at once. “The graph-based models of portable stimulus allow us to walk through the models to build a series of tests,” Hamid said. “For example, CPU1 performs a load. CPU0 stores to that same address. CPU1 then performs a load-exclusive and then the verification IP performs a store-exclusive. You build up tests like these and then get many of them to run concurrently [in the simulator]. You can traverse the graph many times with different combinations of scenarios.”

Although the combinations of scenarios leads to a big state space overall, Hamid said the numbers are tractable for EDA tools if not people. “You have maybe a million entries because not all possible combinations are legal.”

Hamid said his company’s TrekApp tool can, for an architecture such as ARM v8, perform many different cache checks, such as cache-line evictions and accesses that cross cache-line boundaries. “We can get all the prefetch buffers and queues to fill and test those as well as situations like power scaling,” he added. “Cache coherency is a deep, deep problem. But we can use algorithmic generation to create the tests that are needed.”