Using sequential equivalence to verify clock-gating strategies

Sequential equivalence checking can be used to show that a block of sequential logic produces the same output for the same inputs after it has been modified by optimization techniques such as clock gating or register re-timing.

Effective verification involves using a variety of tools throughout the design elaboration process to capture different classes of bug, ideally as early as possible in the design cycle.

Formal verification complements established techniques by providing an exhaustive analysis of the state space of a design, rather than relying on, for example, the directed test strategies of simulation. It can be used at a very early stage to catch basic bugs quickly. It’s also good at finding corner cases for which a verification engineer may not have thought to develop a test.

Formal techniques are also good at clearing away entire classes of design issue before you need to start running simulations. Automatic property checking can be applied to finite state machines. Code coverage analysis can help find unreachable parts of the design, which otherwise would be untestable. Connectivity checking can ensure, for example, that buses are wired up correctly.

Figure 1 The various apps available as part of VC Formal (Source: Synopsys)

Synopsys’s VC Formal tool is made up of a series of ‘apps’, which each address different formal verification strategies such as connectivity checking, coverage analysis, and register verification. It also includes assertion IP for standard bus protocols such as Arm AMBA, AXI, and AHP, so you don’t have to write assertions to check that such buses have been implemented properly.

Sequential equivalence checking

Sequential equivalence checking can be used to show that a block of sequential logic produces the same output for the same inputs after it has been modified by optimization techniques such as clock gating or register re-timing.

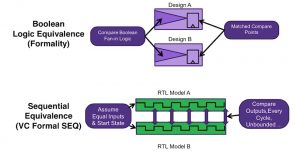

There are two main types of equivalence checks. The first checks that the functionality of the gate-level description of a design created by synthesis matches that of the RTL from which it was derived. The second checks for sequential equivalence between two RTL models, to check that if they each start from the same state with the same inputs they will both create the same outputs on every clock cycle.

Combinational vs sequential equivalence

Combinational equivalence checking is widely used to compare RTL with its synthesized netlist, and requires there to be a one-to-one mapping of registers between the two models. It works best when the state elements remain the same and the only changes are in the combinational logic, for example, through the addition of buffers.

If the state elements cannot be mapped between these two models, then combinational checking equivalence will fail. In such situations, sequential equivalence checking is a better fit, because it only requires the outputs to be equivalent.

Sequential equivalence checking is useful to check that the logical function of an RTL design hasn’t been changed when clock gating has been applied to it to reduce power.

It’s also useful to check that the ‘before’ and ‘after’ versions of the RTL are equivalent if a design has been optimized in a way that removes some of the sequential elements.

The third situation in which sequential equivalence checking is useful is when RTL has been modified, by features being added or removed, and you want to check that the original functionality works as before.

The fourth application of sequential equivalence is to check what happens if the design includes some non-resettable flip-flops. If this is the case, then each time the design is initialized those flops will be in an unknown X state. Sequential equivalence checking shows whether the design will work in the same way, independent of these X values.

Clock gating

Clock gating saves power by turning off the clock to areas of a design that are not being used on that clock cycle. It can be applied to blocks and sub-blocks, and is usually implemented using a latch or flip-flop.

Tools that check the Boolean equivalence of logic don’t have the capacity to compare a clock-gated RTL model with the original RTL. Traditional simulation is time consuming, needs someone to author a testbench, and is unlikely to be exhaustive.

Figure 2 Two approaches to equivalence checking (Source: Synopsys)

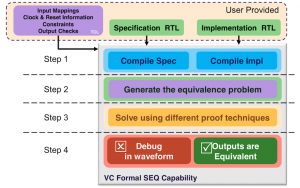

VC Formal has sequential-equivalence capabilities for verifying power-optimized RTL against its golden RTL.

Figure 3 Sequential equivalence checking flow in VC Formal (Source: Synopsys)

The flow begins with RTL for the two versions of the design. The mapping information is used by VC Formal to create assumptions that say the primary inputs are driven with the same set of values, and assertions on the primary outputs to check for equivalence.

The next step is to compile both designs, generate the equivalence problem, and then put the designs into the same known state. The VC Formal engine then works through the assertions that have been generated to check the equivalence of the primary outputs.

If any of the expected equivalence between the two sets of outputs are absent, VC Formal SEQ will show the reason for this in a waveform viewer.

Convergence

Formal techniques are powerful, but not infallible – especially given the very large state spaces that the tool can be asked to explore. VC Formal automatically creates properties based on the mappings at the output. There is no guarantee that these properties will converge (i.e. come to a conclusion) on the first run: some will be proven, some will be falsified, and some will be inconclusive.

This happens because of the complexity of some of the properties. If a property has a lot of memories, counters, and FIFOs in its cone of influence, or has a very large sequential depth, it may not converge within a practical run-time. VC Formal SEQ can help users understand why properties aren’t converging, so that they can apply more advanced techniques that will lead to convergence or, at least, put tighter boundaries on the property.

These techniques include creating internal equivalent points, black boxing, setting cut points and using abstractions.

Internal equivalence

VC Formal SEQ can break down a hard problem into smaller pieces, by creating ‘internal equivalent points’ that lie within the cone of influence of the parent proof and then creating an abstracted model.

The tool then works on these internal equivalent points, either proving or falsifying them. When all the internal equivalent points have been proven, then the parent proof is also proven.

Understanding the equivalence checking process

VC Formal SEQ works with the Verdi debug user interface, through which users can see how a design is being decomposed into internal equivalent points and watch whether these smaller elements of the design are converging or not.

The ‘orchestration view’ in this interface help users to understand the progress of convergence of internal equivalent points, and therefore which part of the proof is particularly complex. It’s then possible to use other techniques to overcome the issue. These might include turning an internal equivalent point into a constraint for the main design, and then trying to prove the internal equivalent point as a separate job. Users can also choose to cut parts of the logic out of a design, or simply put it into a black box.

The user interface provides a comprehensive view of the decomposition and proving process, all the way down to color-coding individual registers in the design based on whether they have been proven, falsified or found to be inconclusive.

Tracking the success of the sequential equivalence checking process also demands a strong sense of the complexity of the design, in terms of how many flops, latches, memories, FIFOs and counters it includes. VC Formal SEQ can generate a complexity report for both the original and power-optimized version of the design, and show the mapping between them. The tool can also generate a complexity report for individual properties.

Changing the effort level

VC Formal SEQ can apply several different levels of effort which can be adjusted on the fly. Users can also keep an eye on the likely success of a sequential equivalence job by checking system resources such as memory limits and the number of parallel jobs that are being run.

Using abstraction

Users can abstract memories, multipliers, adders, counters and other such features to simplify sequential equivalence checking. VC Formal SEQ will then automatically map these abstracted points between two designs to avoid the chances of false failures.

If a design has many uninitialized registers, users can over-constrain the environment by forcing some of them to a known value. Users can also analyse the complexity report to understand which parts of a design (such as large memories) can be restated as black boxes. Once part of a design has been hidden in a black box, VC Formal will create assertions to check whether the inputs to that black box are the same in both versions of the design. It also adds mapping constraints on the output of the black box to avoid any kind of false failures.

Signoff

VC Formal SEQ has a designer view, which offers insights into internal equivalent points but lacks the abstractions of the orchestration view. This means that a failure seen in the designer view is a true failure. VC Formal will generate counterexamples for these internal equivalent point failures.

It may be the case that not all the internal points in two versions of a design are expected to be equivalent, due to some optimizations, although the outputs are still expected to be equivalent. Users can ensure that these equivalent points are falsified, to rule out the need for over-constraint and to speed up debug for primary failures.

If some properties remain inconclusive even after applying all the advanced techniques described, users may want to sign off on them based on the bound depth. The question is, what bound depth is appropriate?

VC Formal can do a bounded coverage analysis, based on a structural code-coverage matrix such as line, condition, toggle and FSM. The maximum bound depth is then set to the property bound depth by VC Formal and these code-coverage goals, which are in the cone of influence of the property, are run by VC Formal. If all the code-coverage goals are met within the maximum bound depth, then this ensures that the bound depth reached by the property is good enough to sign off. Otherwise, users need to rethink the property.

Alternatively, VC Formal can do an over-constrained analysis based on the same code-coverage matrix. It looks at the assumptions and runs the code-coverage goals, and then generates an exclusion file. The excluded element will be annotated as such to help users understand whether the unreachable code has any constraints.

Conclusions

Formal techniques are powerful, and sequential equivalence checking is a particularly appropriate way to apply them to check that a design will work the same way after a clock-gating strategy has been applied. However, the technique is not a magic wand, and needs to be applied with care and insight if it is going to deliver the best results.

Authors

Kiran Vittal is product marketing director, static & formal verification, Synopsys.

Neelabja Dutta is staff corporate applications engineer, formal verification, Synopsys.