Emulation overcomes the five main IoT and networking verification challenges

More protocols, multibillion-gate designs, minimized power, burgeoning software and, for networking, hundreds of switch and router ports emphasize the need for scalable, virtualized emulation.

Massive verification is needed to design the products and networks that make up the Internet of Things (IoT) and networking ecosystem. These are large, complex designs. They feature huge amounts of software and must meet stringent low power requirements. As such, they require an enormous number of verification cycles to fully exercise and debug.

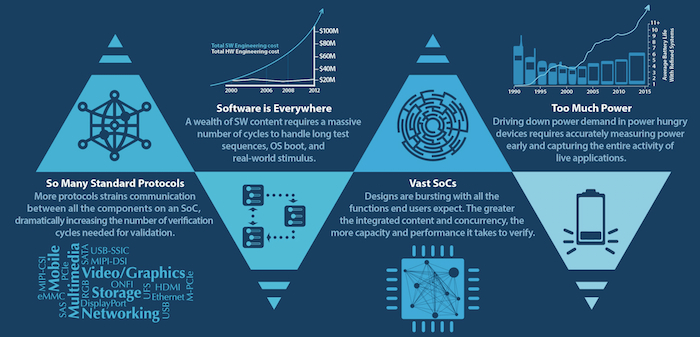

Five characteristics of IoT and network designs have most impact on the verification task. Four are common to both: more protocols per chip, larger and more complex designs, lower power usage requirements, and more software. Networking systems then add a fifth challenge: the growing number of switch and router ports.

Only emulation has the capacity, speed, and capabilities that allow you to complete so much verification efficiently. Yet we also need to think carefully about how emulation itself should now be used. Traditional in-circuit emulation (ICE) cannot accommodate the exploding number of Ethernet ports that networking companies use in their systems. Nor is it practical for testing the multitude of interface protocols found on IoT devices.

We need to move beyond traditional ICE toward software-centric, virtual emulation.

First we’ll look at the challenges, then their solutions.

Primary challenges of IoT and networking designs

1. More protocols per chip

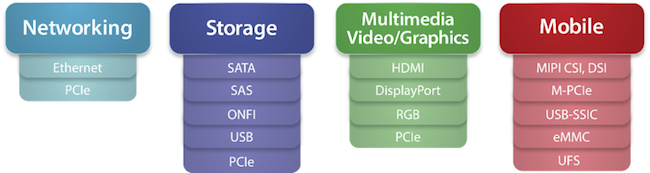

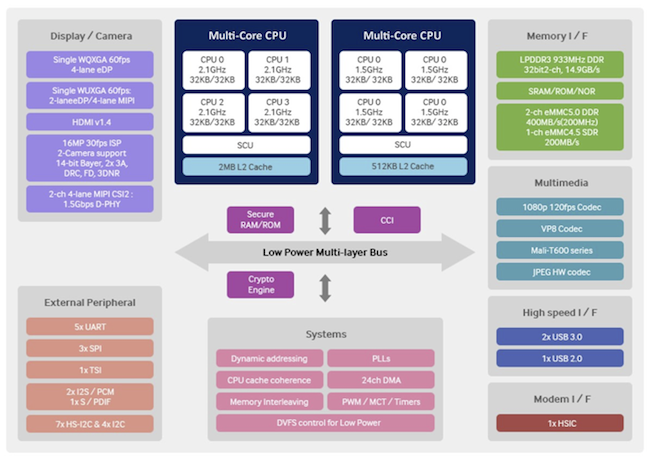

Because more applications and functions need to connect to a network at the same time, the number of protocols-per-device is increasing. For example, mobile phones need to connect to storage on the cloud, stream video and audio, run various kinds of app, and send and receive phone calls and texts.

The protocols needed to do all this can be complex in themselves. But because there is so much communication and so many interactions between the different protocols and the rest of the design, they generate a lot of different tricky scenarios and corner cases that need to be checked and often debugged.

Cramming these protocols onto a single SoC significantly increases hardware complexity. The more protocols there are communicating internally and externally on a chip, the more verification cycles are needed to validate the design.

2. Larger designs

Greater protocol integration increases complexity and size. So do a widening array of functions, multiple processors, and embedded software. Consumers want products that can handle ever more content. These devices must offer integrated digital, audio, voice, and data that is always on and always connected.

The higher the amount of integrated content, the more concurrency there will be. This results in harder to verify systems, ones that can contain billions of gates. The need for us to rethink verification is self-evident.

Figure 3. More integration, multi-functionality, multi-processors, and embedded software increase complexity (Mentor Graphics)

3. Lower power consumption

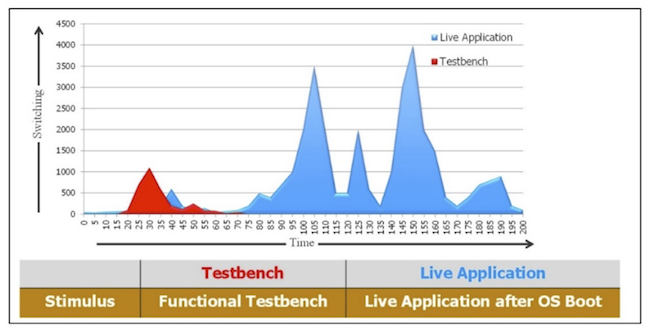

Design decisions need to consider power, area, and performance. The IoT places particular emphasis on power. This obviously makes system-level power analysis and management key activities.

Verification solutions that provide accurate power analysis early in the flow can help guarantee that you make design decisions that do lower consumption significantly. The accuracy of such an analysis depends upon measuring power in the context of the applications that will run on the final SoC. This is where traditional testbench-based verification falls short. We need a better way of delivering analysis that matches the needs of IoT designers.

Figure 4. Digital simulation testbenches cannot capture accurate power measurements (Mentor Graphics)

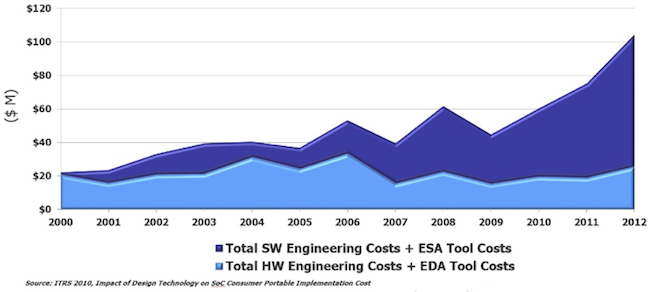

4. Greater software content

Traditional software verification running on a software simulator is losing steam. Simulation is too slow to fully stress test an SoC running billions of cycles or frames of data. If designers are entirely or even just heavily dependent on simulation, they have to compromise on functionality. They cannot fully understand what is really happening in their system or SoC because they cannot generate and isolate the corner cases that could cause a problem in the field.

Also, more software content increases SoC development costs, given the rule of thumb that software requires between five and 10 software engineers for every hardware engineer. The time your software engineers spend on verification needs to be made as efficient as possible.

Your coding team needs a verification solution that has the performance to boot the OS and run the software applications on the target hardware well before hardware prototypes are available. This solution must also have the capacity and speed to exercise the billions of cycles required to fully verify software.

5. Increasing network switch and router activity

The IoT is driving networking advances because consumers want more bandwidth, more multimedia, more video, and more audio streaming. Networking companies must therefore offer higher bandwidths, higher performance computing, and more content-focused applications. Ethernet and other networking standards are being extended and/or introduced to address these needs with faster networking protocols and more ports to improve network service.

For example, the enormous networking configurations required to meet demand compels developers of newer network chips to put thousands of Ethernet ports on a single SoC. It is already nearly impossible to provide connections for all of these ports in a hardware test environment. Therefore, networking companies need a way of exercising their designs in software.

Emulation-led verification for IoT Networks

Today’s hardware and software require an enormous amount of verification. And things are not getting any easier; rather they are getting more difficult. We need to remember that the need to create products within shortening deadlines is also intensifying. So how can emulation help you manage these pressures? Why is it becoming a ‘must have’ component of verification?

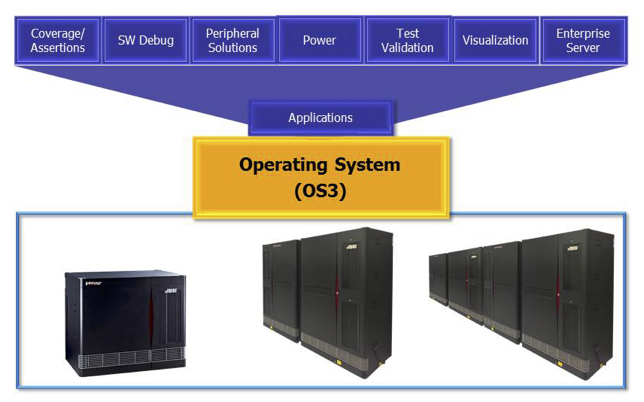

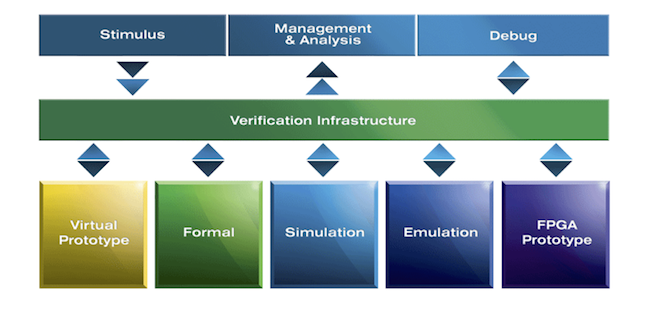

The Mentor Graphics emulation team determined that the best way to improve the verification of complex IoT and networking systems was to have its Veloce platform replicate the way IoT companies approach design. That approach involves disconnecting the hardware and operating system (OS) from the applications that run on phones and other products. It makes sense because end-users care about the apps they want to use and want them to run on whatever OS or device they have.

So, the Veloce emulation platform has been tailored to allow designers to test designs using Veloce applications in the same way. The verification applications (for example, coverage, SW debug, low power, etc.) are independent of the operating system (OS). Thus, the Veloce OS provides an interface to the emulator for Veloce verification applications or for applications developed either internally or by third parties. Because Veloce OS is compatible with any generation or model of the Veloce emulation hardware family, users benefit from seamless adoption from one platform to the next, preserving investments and supporting scalability as an owner’s performance and capacity needs increase.

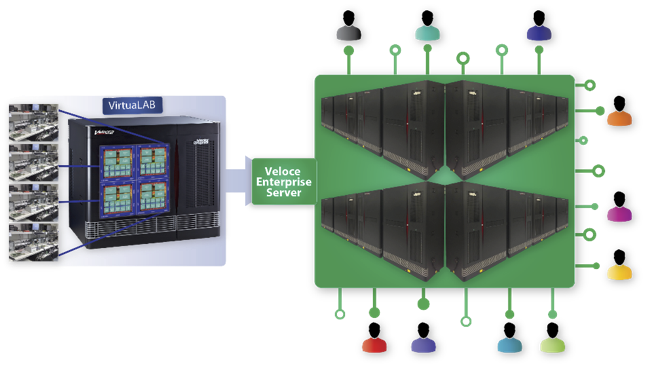

The Veloce OS also supports the Enterprise Server. It optimizes resource utilization and provides job queuing and prioritization using load-sharing management software. The Enterprise Server allows users to submit jobs from their desktops to Veloce emulation resources housed in datacenters anywhere in the world. It supports the concurrent use of Veloce emulation across multiple projects, teams, users, and use modes. It figures out where to most efficiently allocate a single or multiple projects to ensure the most efficient use of the Veloce resources. The result is highly efficient, worldwide, 24/7 access to datacenter-friendly emulation capacity.

But all of this would be for nothing were the peripherals and protocols required to exercise a design not themselves software-based. This is why Mentor developed VirtuaLAB. It has already changed the way SoC-based emulation is performed, and has paved the way for the Enterprise Server capability, transforming the lab environment into a datacenter that requires only the emulator and workstations to execute the software versions of the protocol models. Since VirtuaLAB uses the same protocol IP and software stacks as the ICE hardware solutions, it delivers the same functionality as traditional ICE-based verification, giving users the accuracy of hardware, and the flexibility and repeatable results available with software.

Figure 7. VirtuaLAB Enterprise Server provides 24/7 global access to multiple users and projects (Mentor Graphics)

But then there are further benefits. Compared to ICE, these are substantial:

- You get better reliability because the emulation system eliminates the external hardware and cabling that often introduce faults.

- You raise productivity by leveraging an efficient multi-user environment and an ability to remotely re-configure VirtuaLAB models by merely changing their compile parameters, rather than swapping in/out a tangle of external hardware chassis and cables.

- You reduce overall cost because you can deploy reliable, low-cost workstations to execute software models rather than attaching hardware, including expensive testers.

- You lift your ROI by moving emulation out of the lab and into the datacenter, where it can be used 24/7 by multiple teams, worldwide, just like your server farm.

- You achieve higher quality results because of the greater debug visibility offered into software-based solutions and because designers have access to software protocol checkers and analyzers that are hard to use in a physical environment.

Let’s look at how the Veloce software-based environment specifically addresses the five technological challenges faced by IoT and network developers.

1a. Protocol solutions

Software solutions make it easier to get accurate results in part because hardware solutions can give different results even when using the same stimulus – the results depend on the state that the hardware comes up in. Thus, having a software-based environment for the protocols in a design is very important. Veloce offers protocol solutions for multiple market segments with a battery of solutions that provide host/peripheral models, protocol exerciser/analyzers, and software debug connections.

2a. Large designs

As design size continues to increase, emulation capacity must keep pace. Veloce meets this challenge with a scalable emulation platform. Customers may initially find a Veloce Quattro adequate. The Quattro handles up to 256 million gates per system and up to 16 users. The next model up is the billion-gate Veloce Maximus that supports up to 64 users. After that, the Double Maximus system takes capacity to two billion gates and 128 users. All Veloce models and generations use the same OS, run the same applications, and are fully backwards-compatible. This ensures scalability and preserves existing investments as you grow your emulation capacity.

3a. Low power

Veloce is ideal for low power analysis because it provides that degree of accuracy which can only be achieved by running a design in the context of real applications.

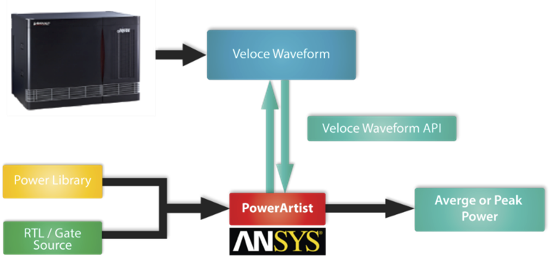

Veloce has the speed and capacity to boot the OS and run the billions of cycles required to fully exercise software applications running on the target hardware, even when that hardware has billions of gates. Mentor has created a dynamic waveform API flow for direct connections to power analysis tools that are integrated right into the Veloce emulator. Integration with industry-leading, third-party power analysis tools, such as ANSYS, allows customers to get accurate power numbers early in the design flow. Now they can make intelligent decisions about power, area, and performance.

No other emulation system offers all this, making the Veloce Power Application software much more accurate than any other low power solution.

4a. Software debug

Software embedded on a chip must be verified at the same time as the hardware. Emulation is as good at debugging as simulation, and it is thousands and, for larger designs, even millions of times faster. Veloce includes a number of solutions giving software engineers what they need to debug their embedded software.

For live interactive debug with emulation, Veloce offers Virtual Probes. These provide a virtual connection to a software debugger, obviating the need for hardware JTAG probes. This not only eliminates some inherent problems with JTAG probes, but also takes advantage of the Enterprise Server. However, interactive debug uses valuable connection time on the hardware even when emulation is stopped during active debugging. Running zero hardware cycles per second while debugging an issue is an expensive way to use an emulator. Thus, interactive debugging should only be used when absolutely necessary.

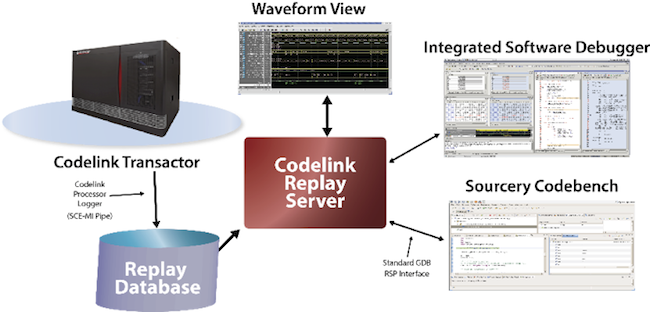

When it is not, Veloce Codelink supports offline and re-playable debugging. Codelink provides debug capabilities that standard software developer tools provide, including correlation between the code running in a software debugger and where it appears in the hardware waveform. With Codelink software, multiple databases are generated by the emulator, and these databases are used offline for the debug session. This is a very productive environment, freeing up the emulator for other tasks and to other users while software debug is performed offline.

5a. Switch and router ports

Hundred- or thousand-port designs have so many connections to hardware, all requiring cabling, that it is no longer feasible to verify network switch and router designs in an ICE environment. Furthermore, a 128-port Ethernet design, for example, can be hundreds of millions or even billions of gates in size.

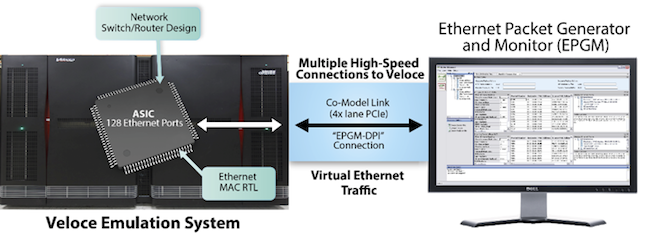

Veloce VirtuaLAB overcomes the obstacles of a hardware environment by moving most of the test environment into software, and it runs on the scalable Veloce platform that can handle up to two billion gates. Additionally, the VirtuaLAB protocol solution satisfies the key objectives that a network switch or router company has when verifying a chip: packet latency, bandwidth, packet loss, out-of-order sequences, and traffic analysis.

In a typical VirtuaLAB environment, an SoC is loaded into the Veloce emulator. The emulator is connected to the user environment on a workstation through one or more software connections. These connections enable the engineer to interact with the DUT running in emulation. In the case of Ethernet, virtual Ethernet traffic is generated by the VirtuaLAB Ethernet Packet Generator and Monitor (EPGM) application running on the workstation. The EPGM generates the tests and provides visibility, analysis, and user control of the Ethernet traffic.

Enterprise emulation and the IoT

The IoT and the increased networking infrastructure needed to support it extend existing verification challenges and present new ones. If companies are to bring products to these markets both in a timely way and after fully exercising their designs against errors, they need the workhorse that such complex tasks require. Only emulation fulfills that role.

With its Enterprise Verification Platform (EVP), Mentor Graphics has therefore developed a family of emulation solutions specifically aimed at the IoT, with Veloce VirtuaLAB at the core. This solution is more flexible, provides more visibility, and scales with the increasing capacity and complexity of IoT and network system designs. VirtuaLAB supports higher productivity and increases design quality. It also delivers many of ICE’s traditional capabilities – but without all those additional cables and hardware units.

Because all of these capabilities and technologies are based on the idea of enterprise emulation, all of the emulation resources reside in a data center and can be accessed remotely, 24-hours-a-day by multiple team, users, and projects. The VirtuaLAB environment delivers software and hardware verification across all IoT markets, providing high-speed verification solutions for multiple protocols, complex designs, accelerated low-power applications, and hardware-software debug.

Further reading

For a still deeper dive into this important topic, you can also read this white paper: ‘SoC verification for the Internet of Things’.

About the author

Richard Pugh has 30 years of experience in electronic design automation, working in IP, ASIC, and SoC verification across roles in application engineering, product marketing, and business development at ViewLogic, Synopsys, and Mentor Graphics. He is currently the Product Marketing Manager for Mentor’s Emulation Division.

Richard holds an MSc in Computer Science and Electronics from University College London and an MBA from Macquarie Graduate School of Management, Sydney.