Understanding DDR SDRAM memory choices

This article explains which form of DRAM memory is best for your SoC application, comparing DDR variants, types of DIMM, mobile and low-power versions, graphics memory and 3D stacks.

Memory performance is a critical part of achieving system performance in many applications. The most common choice for main memory is dual data-rate synchronous dynamic random-access memory (DDR SDRAM or DRAM for short), because it is dense, has low latency and high performance, offers almost infinite access endurance, and draws little low power.

The Joint Electron Device Engineering Council (JEDEC) has defined several categories of DRAM by their power, performance, and area requirements. This article provides an overview of these standards, to help SoC designers choose the right memory for their application.

DDR DRAM basics

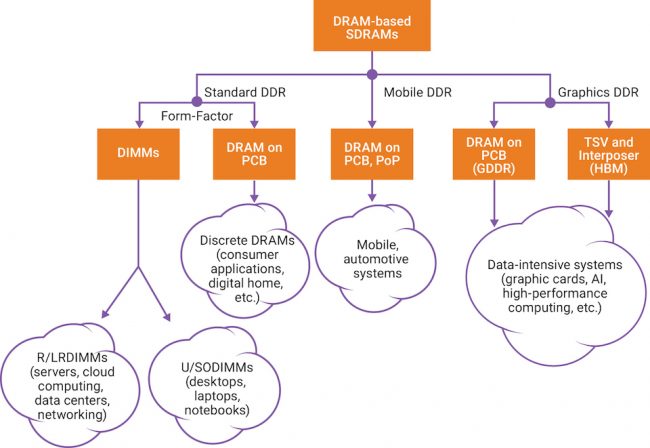

DDR DRAMs can be used as discrete devices, or in dual in-line memory modules (DIMM). JEDEC has defined three categories of DRAM, as follows:

- Standard DDR is designed for use in servers, cloud computing, networking, laptop, desktop, and consumer applications. It supports wide channel widths, high densities, and multiple form factors. DDR4 has been the most popular standard in this category since 2013; DDR5 devices are in development.

- Mobile DDR is designed for use in mobile and automotive applications, which need low power operation. It supports narrower channel widths than standard DDR, and several low-power operating states. The standard today is LPDDR4, and LPDDR5 devices are in development.

- Graphics DDR is designed for use in applications that need very high data throughput, such as graphics processing, data centre acceleration, and AI. This category of DDR includes graphics DDR (GDDR) and high bandwidth memory (HBM).

All three categories use the same storage technology, but each has architectural features such as data rates, channel widths, host interfaces, electrical specifications, I/O termination schemes, and/or power states, that are tailored for particular applications. Figure 1 shows JEDEC’s three categories of DRAM standards.

Figure 1 JEDEC’s standards for application-specific DDR memory

Here are more details of each form of DDR memory.

Standard DDR

The most popular variant of DDR is DDR4, which offers:

- Data rates up to 3200Mbit/s, vs DDR3 operating at up to 2133Mbit/s

- Lower operating voltage of 1.2V, compared to 1.5V in DDR3 and 1.35V in DDR3L

- Higher performance through the use of bank groups

- Lower power thanks to data-bus inversion facilities

- More reliability, availability, and serviceability (RAS) features, such as post-package repair and data-cyclic redundancy checks

- Higher densities, due to increased densities per die of up to 16Gbit

Standard DRAMs support data-bus widths of 4 (x4), or 8 (x8), or 16 (x16) bits. Servers, cloud, and data-centre applications use the x4 DRAMs as they allow a higher density on DIMMs, and enable RAS features that cut application downtime due to memory failures. The x8 and x16 DRAMs are less expensive and are commonly used in desktops and notebooks.

DDR5, under development at JEDEC, will increase data rates to 4800Mbit/s, at an operating voltage of 1.1V. DDR5 adds features such as voltage regulators on each DIMM, better memory refresh schemes, updated memory architectures to use the data channels more effectively, the addition of error-correcting codes, larger bank groups to boost performance, and support for greater memory capacities.

DDP, QDP, 3DS DRAMs

Multiple DRAM dies are often co-packaged to increase density, using dual-die (DDP) or quad-die (QDP) packages to put two or four memory ranks in one package.

It’s hard to use this technique for more than four die because this increases the load on the command/address (CA) and data (DQ) lines when connecting all the memory ranks in parallel. Instead, multiple DRAM die, each representing a memory rank, are stacked in a package with through-silicon vias providing the interconnections. The bottom die then acts as an electrical buffer for the C/A and DQ wires, so the TSV DRAM stack only presents one load to the host’s C/A and DQ wires. This allows TSV DRAMs to have eight or even 16 stacked memory ranks. TSV DRAMs are also known as 3D-stacked (3DS) DRAMs. A DIMM based on several TSV DRAMs can support hundreds of gigabytes of memory.

DIMM types

DIMMs are printed circuit boards that carry multiple packaged DRAMs and support 64bit or 72bit databus widths, the latter to enable eight error-checking and correction (ECC) bits to protect against single-bit errors.

Because servers need to access terabytes of memory, they often support two or three DIMMs on each memory channel. This increases the loading on the shared C/A and DQ signals, and so demands extra buffering on each DIMM.

- Registered DIMMs (R-DIMMs) only buffer the C/A wires

- Load-reduced DIMMs (LR-DIMMs) buffer both the C/A and DQ wires

Both types usually have a 72bit databus width to enable ECC.

- Unbuffered DIMMs (UDIMMs) are mainly used in cost- and power-conscious applications and only have one DIMM per channel

All three types have the same form. Small-outline DIMMs (SODIMMs) are roughly half the size of regular DIMMs.

Mobile DDR

Mobile DDR, also called low-power DDR (LPDDR) DRAMs, have several features to reduce their power consumption. They tend to have fewer memory devices on each channel and shorter interconnects so they can run faster than standard DRAMs

LPDDR DRAM channels are typically just 16 or 32bit wide.

LPDDR5/4/4X DRAMs

LPDDR4 can run at up to 4267Mbit/s at 1.1V. LPDDR4X is identical to LPDDR4, but saves power by reducing the I/O swing to 0.6V.

These LPDDR4/4X DRAMs usually have two channels, supporting two independent x16 channels, each with its own C/A pins. This approach gives greater flexibility in the way in which the memory is connected to the host or SoC. The LPDDR4/4X DRAMs usually only have up to two ranks per channel.

LPDDR4/4X DRAMs also offer several ways to configure the dies to achieve the required densities for the two channels. The simplest option has two channels in a x16 configuration, so that two such dies can be co-packaged to create a dual-ranked, dual-channel DRAM. Higher densities can be achieved by having dies that support both channels in a byte-mode (x8) configuration. Four such dies can be co-packaged to give a dual-ranked, dual-channel topology. These byte-mode LPDDR4/4X DRAMs enable the creation of higher density parts, for example by combining four 8Gbit die to produce a 32Gbit device, with each DRAM die only supporting one byte from both channels.

LPDDR4/4X DRAMs can be used as individual parts or in a package-on-package configuration. Quad-channel x64 DRAMs are also available.

LPDDR5, under development at JEDEC, is expected to run at up to 6400Mbit/ps. It will have more power-saving and RAS features, including a deep-sleep mode.

Connectivity options with LPDDR4/4X DRAMs

The simplest way of connecting to a dual-channel, dual-ranked LPDDR4 DRAM is for the host to run the two DRAM channels independently, by sending separate C/A and chip-select lines to these two channels. This configuration enables the host to use each channel in different power states, to achieve either performance or power efficiency.

The host can also operate the two x16 channels as one 32bit-wide channel, or two 16bit-wide channels. In the first case, the host operates the DRAM as a x32 channel and sends one pair of C/A signals to both x16 DRAM channels.

In the second option, the host operates the two x16 DRAM channels as one x16 channel, by sending one pair of C/A signals to both DRAM channels and connecting the DQ[15:0] signals from the two DRAM channels together. The result is a single x16, quad-ranked channel. Although this approach doubles the memory density of the channel, it can limit performance by loading the C/A and DQ wires.

Graphics standards

The next two sections explain the GDDR and HBM memory architectures, which are designed for use in high-throughput applications.

GDDR

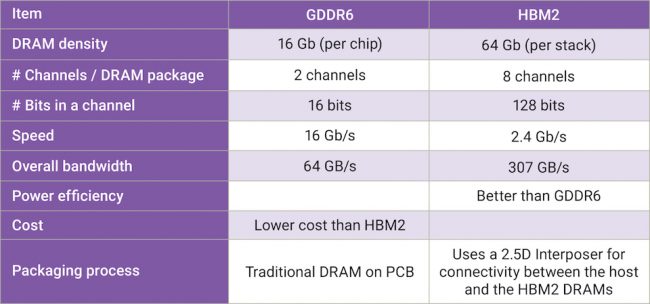

GDDR DRAMs use a point-to-point standard that can support data rates of 8Gbit/s (GDDR5) and 16Gbit/s (GDDR6).

GDDR5 DRAMs are 32bit wide and always used as discrete parts. They can be configured to operate in either x32 mode or x16 mode. GDDR5X targets a transfer rate of 10 to 14 Gbit/s per pin, almost twice that of GDDR5. This is made possible by using a greater level of prefetch than GDDR5 DRAMs, and having 190, rather than 170, pins per chip

GDDR6 supports data rates of up to 16Gbit/s, at 1.35V, compared to 1.5V in GDDR5. GDDR6 DRAMs have two channels, each 16bit wide, which can service multiple graphics program threads simultaneously.

HBM/HBM2

GDDR memories use high data-rates and narrow channels to achieve high throughput. HBM memories solve the same problem using multiple independent channels and much wider datapaths of 128bit per channel, operating at 2.4Gbit/s data rates. For this reason, HBM memories provide high throughput at a lower power and in much less board area than GDDR memories.

HBM2 DRAMs stack up to eight DRAM dies, plus an optional base die, and interconnect them using TSVs and micro-bumps. Commonly available densities include 4 or 8Gbyte per HBM2 package. As HBM2 DRAMs have thousands of data wires, interposers are used to connect the memory and host, shortening the routing and therefore enabling faster connectivity.

HBM2 has architectural features that boost performance and reduce bus congestion, including a ‘pseudo channel’ mode, which splits every 128bit channel into two semi-independent 64bit sub-channels that share the channel’s row and column command buses while executing commands individually. Increasing the number of channels in this way also increases overall effective bandwidth, by avoiding restrictive timing parameters to activate more banks per unit time. RAS features include optional ECC support for enabling 16 error detection bits per 128 bits of data.

Figure 2 Comparing two forms of graphics memory (Source: Synopsys)

Summary

System designers have lots of ways to achieve the memory performance, power, and area their application needs. JEDEC has defined three major categories of DDR memory: standard DDR to address high-performance and high-density applications such as servers; mobile DDR (or LPDDR) for low-cost, low-power applications such as laptops; and graphics DDR for data-intensive applications requiring very high throughput. JEDEC has further defined GDDR and HBM as the two standards for graphics DDR.

Synopsys offers silicon-proven PHY and controller IP, supporting the latest DDR, LPDDR, and HBM standards. Synopsys is also an active member of the JEDEC work groups, driving the development and adoption of the standards. Synopsys also has memory interface IP that can be configured to meet the exact application requirements of an SoC.

Author

Vadhiraj Sankaranarayanan is a senior technical marketing manager at Synopsys.