Making sense of cloud EDA

How to carry out a sensible analysis of cloud EDA’s potential, so you get the right tools and computational resources to deliver increasingly complex designs.

Cloud computing feels like it has been around for eons except, it seems, in the EDA industry. Which is a bit strange, because most EDA technology, like the core Calibre functionality from Siemens Digital Industries Software, has been cloud-ready for years. Turns out there were some pretty good reasons for this, the first being intellectual property (IP) security. Semiconductor IP, in a very real sense, is a significant portion of the value assets of a design company or foundry. Schematics, models, layouts, process information, foundry rule decks, design checks — all contain the knowledge and expertise and data that distinguish one company from another. The risk of losing control over their IP was a huge barrier to cloud computing for both semiconductor companies and foundries.

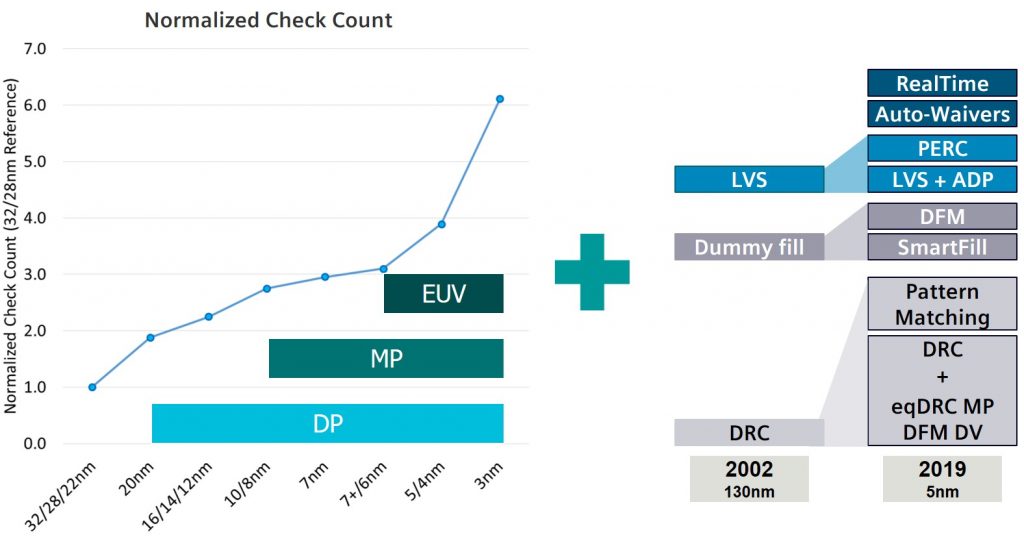

As long as companies could handle their compute requirements with on-site hardware, there was little urgency to adopt potentially risky alternative strategies. However with each new process node, companies saw check counts and check operations grow, driving up the overall requirements for both compute resources and time (Figure 1). The capital investment required to continuously expand and maintain those resources, combined with growing latency issues during periods of high demand when teams competed for access, started to make the future look less certain. This spurred greater interest in more efficient and cost-effective ways of accessing large quantities of compute resources.

Figure 1. Advances in verification technology and growth in the number and complexity of rule checks have contributed to an increase in computational requirements (Siemens EDA)

Importantly, cloud providers responded with enhanced and expanded security to allay IP security concerns. This freed companies to explore and adopt cloud processing as a means of maintaining competitive tapeout schedules while adjusting their resource usage to better fit their business requirements and market demands. As a result, the focus has been able shift to defining and implementing best practices that maximize productivity and efficiency, starting by defining the value of cloud computing to your company.

The value of cloud EDA

Companies considering the addition of cloud computing to their design flows must first understand where and how they will benefit. Most companies we have already worked with initially assumed the value would come from a lower cost of ownership — that is, they would save money because they would no longer have to buy and maintain all the compute resources they needed to meet their maximum computing requirements. Instead, they could simply add extra capacity only when needed. A reasonable assumption, it would seem.

The reality proved somewhat different. In practice, it turns out that a lower cost of ownership was not the major benefit of cloud EDA. Instead, it was the time saved. By eliminating roadblocks to the acquisition of sufficient compute resources, companies discovered that teams were able to complete more design iterations per day, resulting in less time spent in physical verification, reliability checking, simulation, design for manufacturing (DFM) optimizations, and so on. Spending less time in these stages of the design and verification flow meant they got to market faster.

This time saved is particularly valuable for design companies because more than half of all IC design development programs have schedule slips. The last stage before delivering a design to the foundry is sign-off physical verification—so that team is always under tremendous time pressure, not only to complete this final step as quickly as possible, but to make up for any earlier schedule slips. Everyone is watching when the design program is already behind. That pressure and exposure generates very real leverage to claw back as much time as possible.

What does that mean? If you’re planning to incorporate cloud computing, you need to clearly understand the economics of combining cloud resources with your existing resources to ensure you’re optimizing your cost of ownership. That calculation starts with determining your current on-premise compute resource use patterns, so you can effectively evaluate the impact and cost of adding cloud computing access.

For example, if your on-premise usage is relatively low, you may want to optimize your internal allocation and/or scheduling processes before considering cloud computing. If your on-premise use is high, but teams are mostly meeting their schedules, then you have two options: either you only make use of cloud computing in conditions where there are big spikes in demand, or you evaluate whether the addition of cloud computing would allow teams to compress their schedules and deliver designs earlier.

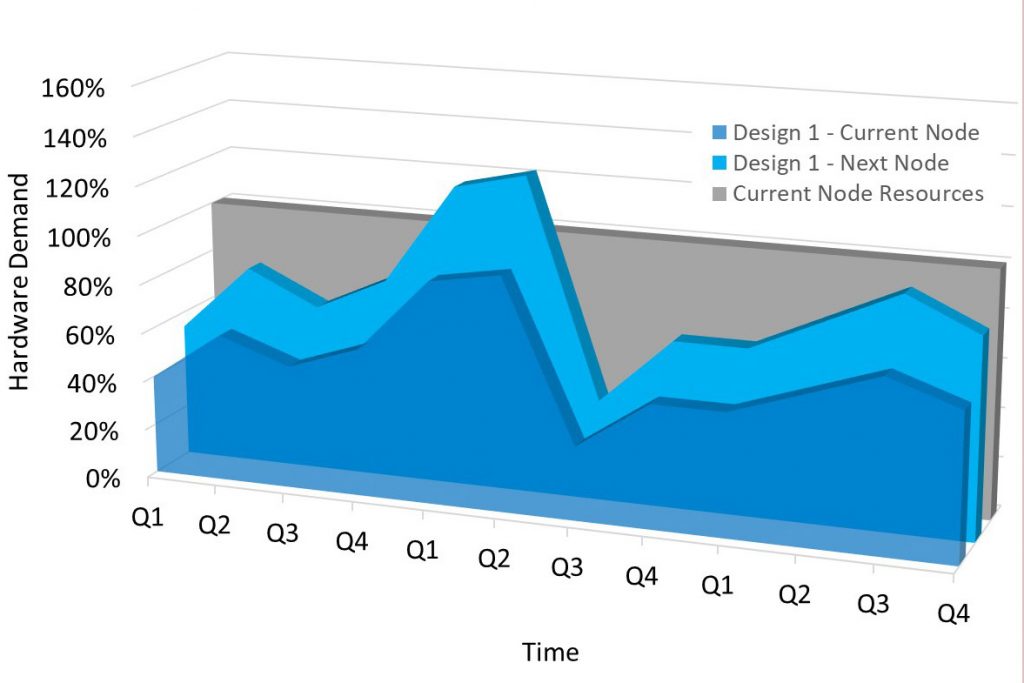

Unexpected resource demand can emerge from several sources. For example, moving the same type of design to a new node often results in an increase in the total amount of necessary compute that may exceed available in-house resources (Figure 2). If you are not planning ahead for these transitions, you may be caught off-guard when schedules start to stall.

Figure 2. Moving the same design type to a new node drives up the need for compute resources (Siemens EDA)

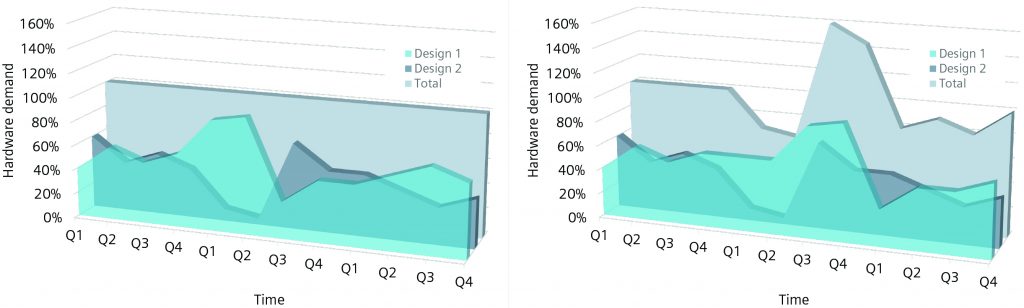

Companies also typically have multiple project teams, all competing for the same resources. As long as the resource demands are spread out, the available in-house capacity will be sufficient. Most companies expect and plan for such phased tapeouts—that is, the peak resource demand of one team will fall into the resource demand ‘valleys’ of the others. However, one small delay on any one team can cause overlaps in these peak utilization periods, at which point resource demand exceeds supply (Figure 3).

Figure 3. As long as competing resource demands do not exceed supply, companies can use in-house resources. When peak usage overlaps, companies may need external resources (Siemens EDA – click to enlarge)

There is another factor in the value equation—economies of scale. For example, the Calibre nmPlatform is designed to scale to massive numbers of resources, which can significantly compress verification runtimes and tapeout schedules. Cloud computing offers access to a nearly infinite resource pool. A company must determine the market value of, say, moving from 2x design iterations per day to 4x, to decide if adding cloud resources to get to tapeout faster is financially desirable, and whether it wants to do that for just one, many or even all of its projects.

Best practices

Once a company has made the decision to add cloud computing to its design and verification flow, the next step is to ensure that usage is as efficient and cost-effective as possible. First is the selection of a cloud provider and your cloud environment configuration. Then, you need to establish cloud usage guidelines that ensure you maximize the value of those cloud resources within your company.

Cloud providers

Running EDA applications in the cloud is very different from running a word processor or spreadsheet program in the cloud. Scaling efficiently to thousands of CPUs/cores takes the right setup. Not all cloud providers have the same experience in enabling high performance computing (HPC) applications like EDA software. When evaluating a cloud provider, their expertise in and experience with HPC applications should be a strong consideration.

One factor in the selection of a cloud provider is the types of server they offer. Each type has different core, memory, interface, and performance characteristics, with the “best” selection dependent on your needs and applications. For example, some servers are designed to support demanding workloads that are storage-intensive and require high levels of I/O, while others are optimized for applications driven by memory bandwidth, such as fluid dynamics and explicit finite element analysis. Understanding your computing needs will help you select the server and provider best suited to your requirements.

EDA support

Because most fabless companies are not looking to move all their EDA compute to the cloud—just that small percentage of surge demand, or when tapeout speed is the highest priority— understanding your specific EDA-in-the-cloud options is crucial. Some EDA companies provide an integrated solution that requires the use of their proprietary cloud. Others, like Siemens Digital Industries Software, support an open solution that allows you to choose your cloud service supplier, whether that is private or public.

Cloud configuration and usage

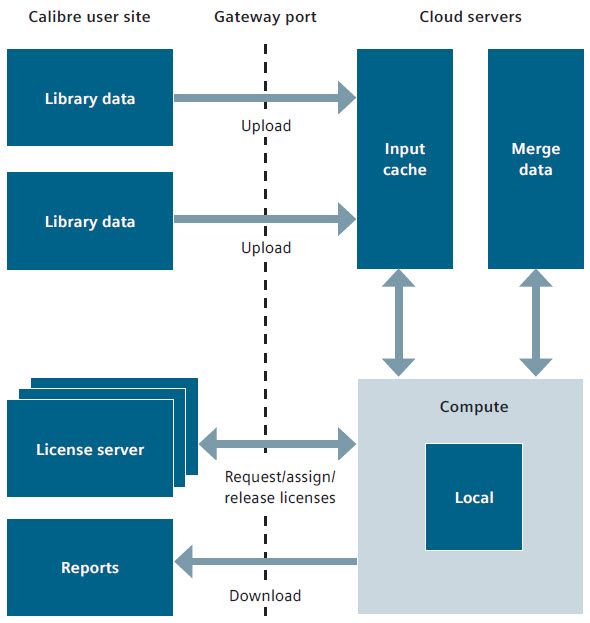

Advanced node designs might be tens, if not hundreds, of gigabytes, so companies should scale the amount of compute they are actually running in the cloud as a function of such facts as what node they are using, what type of physical verification they are running, and how big are their die? Defining and structuring how teams should communicate with the cloud, how and when they move data out into the cloud, and how they assemble jobs out in the cloud will all contribute toward more efficient and effective cloud computing.

Specific best practices depend on the EDA software your company uses. For the Calibre nmPlatform, cloud best practices include such options as:

- Cloud server location: By choosing geographically close cloud servers, you can reduce network latency time. Cache-based systems also improve machine performance.

- Foundry rule decks: Always using the most recent foundry-qualified rule deck will ensure that the latest coding best practices are implemented.

- EDA software: Because Siemens optimizes the Calibre engines with every release, using the most current version of Calibre software ensures optimized runtimes and memory consumption

- Hierarchical filing: Implementing a hierarchical filing methodology, in which a design is sorted into cells that are later referred to in the top levels of the design, significantly reduces data size and enables a significant reduction in final sign-off runtimes

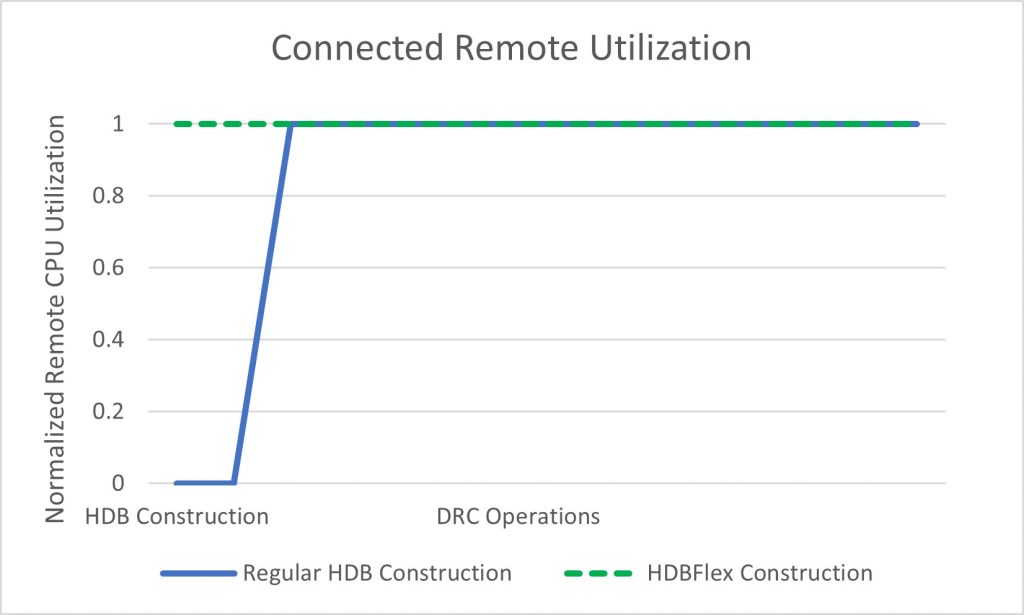

- Hierarchical construction mode: Using the Calibre HDBflex construction mode substantially reduces the real time that Calibre MTflex remotes are idle during the creation of a hierarchical database (HDB) (Figure 4). The Calibre HDBflex process connects to the primary hardware only during HDB construction, eliminating idle resource time by ensuring that the majority of the construction takes place in the multi-threading (MT) only mode, and does not connect to remotes until the later stages of construction. Minimizing HDB construction time through this targeted server utilization enables the Calibre nmPlatform to use the larger DRC operation resources more efficiently.

- Staged uploads: Uploading each block separately as it is available, along with standard cells and IPs, then uploading the top level, minimizes upload time. By uploading in stages, you avoid any bottlenecks. You can then use the Calibre DESIGNrev interface in the cloud to assemble all the data (Figure 5).

Figure 4. CPU utilization as a percentage of total acquired CPUs. The Calibre HDBflex process only connects to the primary server during HDB construction, ensuring that CPU utilization is sustained at 100% throughout the entire flow (Siemens EDA)

Figure 5. Uploading blocks and routing separately, then combining the data in the cloud server, minimizes both upload time and potential bottlenecks (Siemens EDA)

Cloud EDA means opportunity

Design teams are always under pressure to get to market as fast as possible. Because physical verification happens at the end of the design cycle, any schedule slips put enormous pressure on verification teams to complete more design iterations per day to achieve tapeout as quickly as possible. At the same time, competition for limited resources within the company can further delay design tapeouts.

Cloud EDA gives companies the opportunity to reduce time to market and speed up innovation while maintaining or lowering operating costs. With the advent of strong security measures for both technology and physical resources, the semiconductor industry is on its way to an environment where cloud computing for EDA will be as ubiquitous as the foundry is today for IC wafer manufacturing.

As IC companies look to leverage further cloud capacity for faster turnaround times, especially on advanced process node designs, having a clear understanding of the options, the benefits and the costs of cloud EDA can help them make the decision that best supports their needs and resources. Once the decision is made to adopt cloud EDA computing, the availability of proven technology, flexible use models and guidance through best practices can help companies obtain the maximum benefit for their investment.

Further reeading

For more information, you can review these white papers:

Calibre in the cloud: Unlocking massive scaling and cost efficiencies

EDA in the cloud—Now more than ever

Siemens, AMD, and Microsoft collaborate on EDA in the cloud

About the authors

Michael White is the senior director of physical verification product management for Calibre Design Solutions at Siemens EDA, a part of Siemens Digital Industries Software. Prior to Siemens, Michael held various product marketing, strategic marketing, and program management roles for Applied Materials, Etec Systems, and the Lockheed Skunk Works. He received a B.S. in System Engineering from Harvey Mudd College, and an M.S. in Engineering Management from the University of Southern California.

Omar El-Sewefy is a senior product engineer for Calibre Design Solutions at Siemens EDA. Omar received his BS and MS in electronics engineering from Ain Shams University in Cairo, Egypt, and is currently pursuing his Ph.D. at Ain Shams University in silicon photonics physical verification. Omar’s extensive industry experience includes semiconductor resolution enhancement techniques, source mask optimization, design rule checking, and silicon photonics physical verification.