The evolution of lint

It’s tempting to see lint in the simplest terms: ‘I run these tools to check that my RTL code is good. The tool checks my code against accumulated knowledge, best practices and other fundamental metrics. Then I move on to more detailed analysis.’

It’s an inherent advantage of automation that it allows us to see and define processes in such a straightforward way. It offers control over the complexity of the design flow. We divide and conquer. We know what we are doing.

Yet linting has evolved and continues to do so. It covers more than just code checking. We begun with verifying the ‘how’ of the RTL but we have moved on into the ‘what’ and ‘why’. We use linting today to identify and confirm the intent of the design.

A lint tool, like our own Ascent Lint, is today one of the components of early stage functional verification rather than a precursor to it, as was once the case.

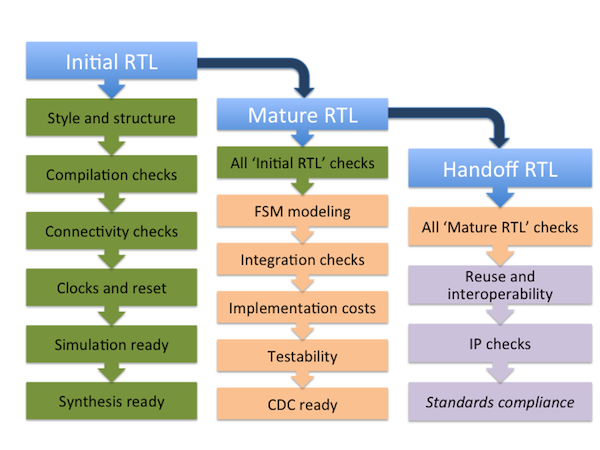

At Real Intent, we have developed this three-stage process for verifying RTL:

- Initial RTL: Requirements may still be evolving. Design checks here ensure that regressions and builds failures are caught early.

- Mature RTL: Our concerns now revolve around such issues as modeling and its costs, simulation-synthesis mismatches, FSM complexity and so on. The focus is on higher order aspects of freeze-ready RTL that might impact design quality. These ‘mature RTL’ checks ensure necessary conditions for downstream interoperability.

- Handoff RTL: By this late stage, checks are geared towards compliance with industry standards or internal conventions to allow easy integration and reuse.

Applying those three stages to lint tools gives us the following diagrammatic representation of how they can be applied.

Linting plays an increasing role in this process because better tools now use policy files that are both appropriate and adaptable at each of the three stages. They still detect coding errors but also monitor that further satisfaction of design intent. We can use linting to begin the deep semantic analysis of designs now needed to manage their increasing complexity.

Lint as a semantic analysis platform for verification

Semantic analysis is an approach that we might more immediately associate with tools run after linting to analyze, say, clock domain crossings or troublesome X states. If we consider linting as an example of early stage functional verification rather than simple code checking, it becomes natural for it to also undertake such an analysis, addressing issues with the relationships between different elements as soon as possible.

The results from a linter therefore tell us a great deal about the state of the design. The tool should catch problems that require immediate debug but also provide data that help us plan and refine the verification plan going forward.

Can some issues be seen as settled, needing no further investigation? Do others require examination in particular detail?

A good linter helps us to allocate resources. This is in addition to features that look toward debug. Ascent Lint, for example, has Emacs editor integration to make the find-and-fix loop faster and easier.

These are not the linters of the past. But even they may be only the beginning. For example, as intelligent testbenches evolve, they are also incorporating more capability for semantic analysis. The opportunity here alone for greater integration and efficiency is self-evident.

Lint may be an established, even mature concept but continues to evolve in exciting directions and how it fits in your flow today should not be taken for granted.