Productivity, predictability and versatility drive verification environments

Three key characteristics determine a verification platform’s ability to add value to the design flow. But how they score within a project depend on how each is applied and at which point.

The key issues to consider when choosing a verification platform fall into three main categories: productivity, predictability, and use-model versatility. These characteristics will determine whether a verification platform will add value to the design flow. But how well each platform scores will depend on how the platform is to be applied – the parameters that call for the use of in-circuit emulation will differ from those that indicate the use of transaction-level modeling (TLM) in a pure-software environment.

Productivity is not limited to execution speed. Speed is often seen as the main concern, but it must be balanced with accuracy, bring-up efforts, and debug insight – greatly influencing the debug loop of loading the design, executing it, analyzing it, fixing an issue and then starting the cycle again with the next version of the design. A hardware-verification platform may offer headline features such as debug trace and a high number of parallel users but if the buffer size is restricted or only a fraction of the users are able to obtain a realistic amount of trace for them to work with offline, those users will find they need to run the multiple verifications to obtain sufficient debug information. This overhead can quickly eliminate any claimed advantages in time or power consumption versus conventional simulation.

User feedback to Cadence shows that the key careabout is the ability to remove bugs as quickly and efficiently as possible, getting the design to a point that the confidence for its correctness is high enough that tapeout can take place. It all boils down to the loop of:

1) design bring-up

2) execution of test

3) efficient debug,

4) bug fix

and back to step one to confirm that the bug has indeed been removed.

To ensure customer careabouts are met, we need to consider multiple aspects of the hardware verification engine, including speed, accuracy, capacity, development cost and bring-up time.

Scaling speed

For verification platforms, speed scales from previous-generation chips and actual samples that are able to executing at the target clock rate through software virtual prototypes without timing annotations, followed by FPGA-based prototypes and in-circuit emulation and acceleration.

A common tradeoff in verification platforms is accuracy: how detailed is the hardware that is represented compared to the actual implementation? Software virtual prototypes based on transaction-level models (TLMs), with their register accuracy, are sufficient for a number of software development tasks including driver development. However, with significant timing annotation, speed slows down so much that RTL in hardware-based prototypes often is actually faster.

Capacity provides a further dimension of choice. The different hardware-based execution engines differ greatly in the size of design they can handle. Emulation is available in standard configurations of up to multiple billion gates, and standard products for FPGA-based prototyping are in the range of up to about 100 million gates, but often suffer speed degradation with larger designs when multiple boards are connected to enable higher capacity. Software-based techniques for RTL simulation and virtual prototypes are only limited by the memory capabilities of the executing host. Hybrid connections to software-based virtual platforms allow additional capacity extensions.

Development cost and bring-up time are important factors: how much effort must be spent to build the execution engine on top of the traditional development flow? Virtual prototypes are still expensive because they are not yet part of the standard flow and often TLM models must be developed. Emulation is well understood and bring-up is very predictable, generally on the order of days or possibly weeks. FPGA-based prototyping from scratch is still a much bigger effort, often taking several months. Significant acceleration is possible when the software front-end of emulation can be shared.

Debug attachment

As the target design will contain multiple microprocessors, effective software debug, hardware debug, and execution control have become vital. How easily can software debuggers be attached for hardware and software analysis and how easily can the execution be controlled? In general, debugger attachment to software-based techniques is straightforward using standard interfaces, and execution control is excellent as simulation can be controlled easily.

However, the lack of speed in RTL simulation makes software debug feasible only for very low-level software. For hardware debug, the different hardware-based engines again vary greatly. Hardware debug in emulation is very powerful and comparable to RTL simulation, whereas in FPGA-based prototyping it is very limited. Hardware insight into software-based techniques is great, but the lack of accuracy in TLMs limits what can be observed. With respect to execution control, software-based execution allows users to efficiently start and stop the design, and users can selectively run only a subset of processors enabling unique multi-core debug capabilities.

Speed has a knock-on effect on system connections – how can the system environment be included? In hardware execution engines, rate adapters enable speed conversions and a large number of connections are available as standard add-ons. RTL simulation is typically too slow to connect to the actual environment. TLM-based virtual prototypes on the other hand execute quickly so can have virtual I/O to connect to real-world interfaces like USB, Ethernet, and PCI. As a result, I/O connectivity has become a standard feature of commercial virtual prototyping environments.

Predictability is important and relates to the partitioning aspects and compile-time required. Processor-based emulation is very predictable with a compiler, while FPGA-based prototyping and FPGA-based emulation have challenges due to complex FPGA routing and interconnect issues.

Development engine availability

A related issue is when the development engine becomes available after project start. Software virtual prototypes win here as the loosely timed TLM development effort is much lower than RTL development. When models are not easily available, then hybrid execution with a hardware-based engine alleviates re-modeling concerns for legacy IP that does not exist yet as a TLM. This is why hybrid use models with both acceleration and emulation and FPGA-based prototyping have gained popularity in the last couple of years.

The number of use models for verification platforms has expanded to fit the growing requirements for different functions within the whole process. The functions have expanded from the core three for emulation and verification acceleration: functional verification; performance validation; and system emulation. The use models now include: regression runs to check changes to the code base to ensure a bugfix has not introduced new problems; post-silicon debug; test-pattern preparation; and failure reproduction and analysis.

More sophisticated use models have become possible with the introduction of architectures, such as the Cadence Palladium XP Platform. This supports gate-level acceleration, something FPGA-based emulators cannot support due to the explosion in complexity in re-mapping the target technology to FPGA gates, as well as dynamic low-power analysis. Power has become a crucial issue for most application.

With accurate switching information at the RTL level, power consumption can be analyzed fairly accurately. Emulation adds the appropriate speed to execute long-enough sequences to understand the impact of software. At the TLM level, annotation of power information allows early power-aware software development, but the results are not nearly as accurate as at the RTL level.

As a result of this expansion of functionality, the versatility of the verification environment has become an important factor.

Key careabouts

All these careabouts within the three basic categories of productivity, predictability and use-model versatility then must be compared to the actual cost, the cost of both replicating and operating the execution engine – not counting the bring-up cost and time. Pricing for RTL simulation has been under competitive pressure and is well understood. TLM execution is in a similar price range, but the hardware-based techniques of emulation and FPGA-based proto- typing require more significant capital investment.

As the description above clearly shows, there is no one-size-fits-all engine. Users must carefully select the right engine for the task at hand. Not surprisingly, the engines are used more and more in hybrid combinations to make use of the combined advantages. For example, combinations between virtual prototypes and emulation can speed-up the bring-up process for the operating system significantly, and in exchange, enabling designers to get to tests intended for software-driven verification much faster.

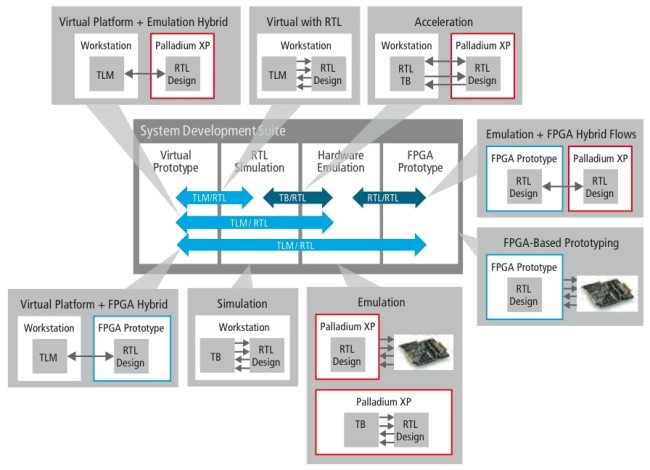

As the different execution engines grow closer together, efficient transfer of designs from engine to engine with efficient verification reuse as well as hybrid execution of engines will gain further importance. Figure 1 shows some of the combinations possible today.

Figure 1 Configurations of execution engines extend to hybrid-use models

Starting with RTL simulation in Figure 1 and going counter-clockwise, the different configurations can be summarized as follows:

- RTL simulation is primarily used for IP and sub-system verification and extends to full chip and gate-level sign-off simulation. Given the need for accuracy for analysis of interconnect, it is also used as engine performance analysis. It runs in the hertz to kilohertz range

- Emulation is used for in-circuit emulation in which the full design is run in the emulator and connected to its actual system environment using rate-adaptors called speed bridges to adjust for speed. Emulation is also used with synthesizable test-benches representing either actual tests for the hardware or representing the peripherals of the system environment mapped into the emulator as embedded test-bench. Emulation runs in the megahertz range and is primarily used for hardware verification, early software development, operating system and higher-layer software bring-up, and system validation on accurate, still maturing, and no-stable RTL.

- FPGA-based prototyping typically runs in the tens of megahertz range, which makes it suitable for software development with the design mapped into the prototype and software debugging using JTAG-attached software debuggers. The higher execution speed allows FPGA-based prototypes to directly interface to the system environment of the design. Given the longer time required to re-map the hardware into FPGAs, it is not used that much for hardware verification, given the emergence of automated front-end flows that can be shared between emulation and FPGA-based prototyping, as with the Cadence Palladium XP Series and Cadence RPP. FPGA-based prototypes find additional use as an adjacency for emulation to run hardware regressions. Its sweet spot is lower cost and faster pre-silicon software development and regressions and subsystem validation on mature and stable hardware with real-world interfaces.

- Emulation and FPGA-based prototypes can be combined, with the less mature portion of the RTL being used in the emulator utilizing the fast bring-up and re-used IP together with the already stable RTL in the FPGA-based prototype enjoying the higher speed range.

- Acceleration is the combination of RTL simulation and emulation, keeping the test-bench in RTL simulation on the host, enabling advanced debug and non-synthesizable test bench usage in combination with a higher speed for the DUT in emulation. Acceleration, as the other hybrids of hardware acceleration with simulation, is enabled using transactors as part of an accelerated verification IP (AVIP) portfolio. Acceleration is measured in speed differential to pure RTL simulation and users typically see between 25x and 1000x speed improvement, depending on their design.

- Virtual prototypes using TLMs find their sweet spot in pre-RTL software development and system validation. Together with RTL simulation, enabled by connections through AVIP, they can be used for driver verification with accurate peripherals represented in RTL. Typical speeds depend on the RTL/TLM partitioning, but can reach speeds of tens of kilohertz or even faster if most of the design runs as TLM in the virtual prototype.

- Hybrids of virtual prototypes and emulation enable OS and above software bring-up as well as software-driven verification on accurate, still maturing, and non-stable RTL. Primary motivation is speed-up, which is measured as the differential of pure emulation and users typically see up to 60x speed improvement for OS bring-up and up to 10x speed-up for software-driven verification executing on top of an OS that is booted.

- Hybrids of virtual and FPGA-based prototypes are used for lower cost and faster pre-silicon software development and regressions and subsystem validation on mature and stable hardware. In contrast to hybrids with emulation, the primary motivation here is not speed but a better balance of capacity, which often poses a limitation to FPGA-based systems. Moving significant portions, such as a processor sub-system into a virtual prototype at the transaction-level, can save significant capacity and allow the overall design to fit into a hybrid of virtual prototype and FPGA-based prototypes.

Conclusion

The choice of the right hardware-accelerated engine is driven by its productivity, predictability, and use-model versatility, all impacting the key concern of users how to remove bugs.

This article is the second part of a pair of articles on emulation – the first mapped out the prototyping ecosystem.

Author

Frank Schirrmeister is Senior Director at Cadence Design Systems in San Jose, responsible for product management of the Cadence System Development Suite.

Company info

2655 Seely Avenue

San Jose, CA 95134

(408) 943 1234

www.cadence.com