Are you testing your test?

It is important when developing a System on Chip (SoC) to be able to tell when the verification process is complete and the design is ready to tape out. One of the most popular ways to do this is to use different forms of structural and functional coverage. This approach has been used in simulation and formal property verification (FPV) for many years and many teams base their decision to tape out on it. Coverage is useful, but it doesn’t answer the real question: “Are there any more bugs in the design, and if there are, will my test or set of assertions find them?” This is especially important in FPV because if all assertions are proven, it’s easy to believe that the verification process is complete.

Covered != verified

The problem with coverage metrics is that achieving 100% coverage doesn’t mean the design is 100% correct or even 100% verified. For example, achieving 100% RTL line coverage in simulation means that every line in the RTL is executed during the test. But executed does not necessarily mean correct.

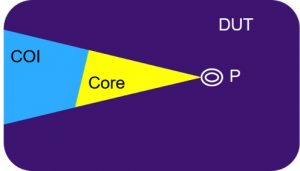

In FPV, the corresponding metric is structural cone of influence (COI) coverage. Any logic that is not in the COI of an assertion (P in Figure 1, below), is clearly not tested. As with simulation coverage, the opposite is not true. Just because some part of the design is in the COI of an assertion doesn’t mean it is correct. Logic that is in the COI may have been optimized away by the formal engines and therefore may remain unverified.

Figure 1 Logic inside and outside the COI and formal core of a property (Source: Synopsys)

A better metric to determine what is verified in FPV is formal core coverage. The formal core of a property is the union of all the registers, inputs and constraints that are necessary to prove the assertion. This is much more accurate than structural COI coverage or structural simulation coverage metrics. The logic is not just executed – it is shown to affect the proof or falsification of the assertions.

If you achieve 100% formal core coverage, does it mean you are done and there are no bugs in your design? Unfortunately, not.

For example, the design below is a counter that can be loaded with a value from input pins when the load signal is asserted – otherwise it just counts up. An assertion (check_load below) to verify that the counter register takes the correct value when the load signal is asserted will cause the counter to be covered. However, this doesn’t cover many other aspects of the counter behaviour that need to be verified, such as whether the reset value is correct and whether the counter will roll over or stop when it reaches its maximum value.

module counter (input clk, reset,

input load,

input [7:0] load_data,

output reg [7:0] cnt_value);

always @(posedge clk) begin

if (reset)

cnt_value <= 8’b0;

else if (load)

cnt_value <= load_data;

else

cnt_value <= cnt_value + 2;

end

check_load: assert property (@(posedge clk)

load |=> cnt_value == load_data);

endmodule

All simulation coverage metrics measure whether the stimulus reaches all logic in the design. In FPV, formal core coverage measures whether all the logic in the design affects the outcome of at least one assertion. Neither form of coverage says anything about the correctness of the design’s behaviour, nor answers the key question: “Are there any more bugs in the design?” To do that you must analyze the effectiveness of the assertions, that is, you must test your test.

Testing the test

Another way to look at verification coverage is to say that 100% coverage implies that any bug will be found by the test or verification environment (assertions). Verification engineers want to know that if there are bugs in the design, they will be found.

How can you know whether this is the case?

Coverage will only tell what is not verified so we need another approach. One approach is to insert a bug (also known as a mutation) and see whether any of the assertions fail. If they do, great! The test found the bug. If no assertion fails, there is a hole in your formal test environment or set of assertions, and you need to add some assertions or improve the existing ones.

Manually inserting bugs to assess the quality of the test environment works but quickly runs into problems. Where do you insert bugs? What type of bugs do you insert? It’s human nature to insert bugs in the parts of the design with which you are most familiar, that is, where the assertions are, so you are still likely to miss bugs. It’s also likely that you’ll only insert a limited range of bug types.

Automatic mutation testing

A much better method is to use a tool such as the Formal Testbench Analyzer (FTA) app in VC Formal to insert hundreds or thousands of artificial bugs (mutations) into your design and see whether any of the assertions fail. Some of the mutations the tool can insert in the design include: changing operators e.g. a + to a –; changing a ‘for’ loop to start at a different value or increment by a different value; and making one branch of an ‘if’ statement dead.

For example, the design in the example above incorrectly increments by 2 instead of 1 but the verification environment does not detect this error. By inserting a mutation on the line “cnt_value <= cnt_value + 2;” we can easily find some unverified areas of the design since no assertion fails because of this mutation.

When manually inserting mutations in the design it is necessary to verify all assertions for every mutation inserted, to ensure that each mutation causes at least one assertion to fail. The FTA tool, on the other hand, will track the effect of every mutation so you can check all assertions and all faults in one run, speeding up run-time by orders of magnitue.

Summary

Coverage is a necessary but not sufficient metric to determine whether SoC verification is complete. It determines whether there is untested logic in the design, but doesn’t determine whether bugs will be found by the test environment. To get rid of this weakness in many verification strategies, it is also important to test your test. Only by using a thorough automated bug insertion (mutation) methodology can you be sure that your formal testbench (assertions) will test your entire design, find all the bugs and so shorten the time to tape out.