Doc Formal: Introducing the ADEPT FV flow

In an industry which responds to technology changes so swiftly, the changes in process take a long time to come through. We are designing cutting-edge technology in our chips that are driving the revolution in the Internet-of-Things using age-old processes that do not respond that well to the requirements of modern-day design and verification. We cannot afford to build chips that are vulnerable from a security point of view or require massive re-engineering (due to finding late bugs). In this article I want to describe an agile design verification flow that uses formal verification to assist you in end-to-end design bring-up and verification. We call this flow ADEPT FV and it describes a concept formal verification (FV) flow that can be used by both designers and verification engineers to tape out designs without bugs.

But first we need to briefly revisit what a traditional DV flow looks like, and how FV itself is defined.

Formal in current practice

Formal verification means different things to different people. Most of the time you tend to find that the use model is defined at extremities – “I use it for linting (autochecks)”; “I use it for SoC connectivity checking”; “For register validation”; “For synthesis verification”, and so on. In some cases people mean block-level formal verification using assertions and in some rare exotic cases using formal sequential equivalence checking to verify that arithmetic works correctly. The bottom line is that no two people using formal verification can agree on its full scope and semantics. If you ask the following questions – What’s the overall use model for formal? How can a designer/verification engineer make use of formal to go from designer bring-up all the way to signing off SoCs with formal? – you will find that the answers are not easy to find.

At my company Axiomise, we are driving the adoption of next generation formal verification methodologies in order to make FV’s use in-the-field productive, scalable and predictable.

In a traditional DV flow the different phases of Verification Strategy, Planning, Execution and Sign-off are usually sequential. More importantly, verification is not considered to be a problem for designers. Rather assumed in a conventional DV flow, there is the overarching assumption that designers build designs and verification engineers verify them. While some separation of tasks and priorities is useful, especially when avoiding common mode errors, verification should be considered a DV activity to be done from designer bring-up to full verification and sign-off accomplished by a good mix of designers and verification engineers. Whereas bugs are tracked systematically through the life cycle of a project, coverage collection, and analysis is usually done late in the DV flow and in some cases there is little or no correlation between bugs found and coverage metrics.

I argue that there are three main goals of verification. I like to think of them as the three axes of verification and the exercise itself a multi-dimensional one.

- Establishing bug presence.

- Establishing bug absence.

- Establishing, in a metric-driven way, code build quality (structural coverage) and verification quality (how complete we are with respect to functional specifications)

So let’s look at an agile flow that combines the goals of the three axes in a uniform and consistent manner using formal to obtain an end-to-end DV flow that can and should be used by designers as well as verification engineers alike. Although it is certainly not a unique one, I believe you will find this flow useful in practice and should indeed consider adapting it to your own needs.

What’s unique about the ADEPT FV flow?

Our flow departs at the very outset from the standard waterfall models traditionally used in design verification and focusses on increasing design assurance from the very first hour of design bring-up, with a focus on bug hunting, establishing exhaustive proofs and coverage. We are certainly not suggesting that once you have come out of one given phase, you cannot go back to it. On the contrary, our flow requires you to run all phases first sequentially (from early design phases) and then in parallel as the design evolves. If you are scrubbing the design to find functional bugs for example in what we call the ‘Erase’ phase, you would still run the avoid and detect flows iteratively. Establishing proofs in our ‘Prove’ bug absence phase does mean you should continue to ensure you do not reintroduce any of the bugs previously caught in the Avoid, Detect and Erase phases or introduce new bugs of the same type as the design goes through continuous evolution. Thus, this flow encourages DV engineers to be agile in the way they approach design verification.

The Axiomise ADEPT FV Flow

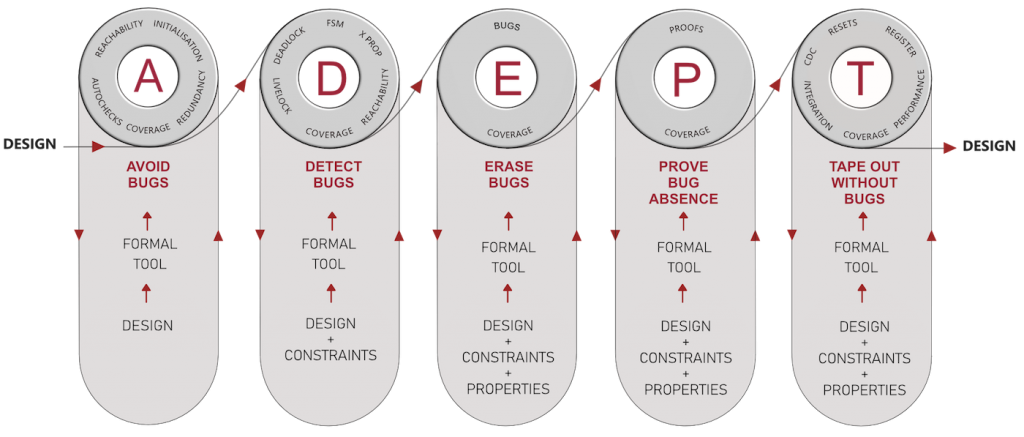

As shown in Figure 1 above, the ADEPT FV flow describes how to:

- AVOID BUGS

- DETECT BUGS

- ERASE BUGS

- PROVE BUG ABSENCE

- TAPE-OUT WITHOUT BUGS

Let’s look at each of those stages in turn.

Avoid

The very first phase Avoid describes how to avoid introducing bugs in the first place. This is made possible by using formal tools to pipe-clean designs as they are built. As soon as some design code is written (let’s say after 30 minutes-to-an-hour of design activity), one can simply compile the design in a formal tool to easily pick up issues in code-build quality. These include syntactic bugs such as dangling wires, undriven nets, un-initialised flops, type-checking (checking if wires and registers e.g., are used correctly), out-of-range checks, all while conclusively establishing through a proof which parts of the code lines are reachable and which are not. A design is input in the Avoid phase to a formal tool and information on bugs, bug absence and coverage is obtained. If there are no bugs reported, expected lines of code are reachable/unreachable, and toggle coverage matches the expected metric then you are good to go. This could mean going to the next phase or continuing in this phase with further design development. Equally, if the results on coverage, bugs and absence of bugs do not look right then you know that youy need to debug and fix the code. The vertical red arrows shown on the boundary of the capsule in Figure 1 indicates that you stay within the avoid phase until you are done.

Some tools can also identify which lines of code are redundant – meaning the lines are reachable but the wire/register being assigned is never used downstream. Finally, most formal tools can run toggle check to tell you which flops are stuck at 0/1. Together with this and reachability analysis, an initial coverage (structural) model is obtained which allows users to visualise all of the information about bugs, absence of bugs and coverage in a GUI. Although no functional testing has been done in this phase, common pitfalls are being avoided upfront and potential lethal bugs flushed out. Not only that, a coverage model is obtained without having to write a single line of test bench code. All you need in the Avoid phase is a design and a formal tool, and some basic know-how in driving the tool – all of which is available from EDA vendors as well as independent organizations such as Axiomise.

Please note that having redundant code or unreachable code may not necessarily be the result of genuine design bugs but consequence of building reusable design code meant to run in different configurations; where in some configurations some bits of the code are meant to be active and others not. In either case, it is a good idea to validate these in the Avoid phase so you are not caught by last minute surprises close to tape-out – a common phenomena seen when people do not use formal in this way.

Detect

This phase is a sort of starting point of a form of functional verification where we identify constraints on design interfaces. Once they have been formally coded in the tool, the tool can be run in an automated manner to address deadlocks, livelocks, FSM analysis, X-propagation errors and reachability issues.

Deadlocks exist when a design is stuck in a one state and is unable to come out of it. Livelocks however are defined as a state where the design moves between two or more states and continues to circulate in those states. Both these checks require the presence of constraints on the interfaces in order to avoid spurious errors reported by the tool. At Axiomise, we offer help in carrying out this activity. We build flows for our customers that can help identify such issues in the design. The idea is to automatically extract such checks so the user does not have to write them.

FSM analysis is a useful way to check and visualise the execution of all designer-specified FSMs. Most decent formal tools can identify issues in FSM reachability (meaning all transitions in FSM are reached), and establish that there are no deadlocks in FSMs.

Reachability analysis is necessary in the ‘Detect’ phase as well because user-defined constraints can make some of the design code unreachable and that can in turn mask some design bugs. Thus, identifying unreachable design code in this phase is necessary.

As with the Avoid phase so long as you are not fully satisfied with the results of carrying out the deadlock, livelock, X-prop and reachability analysis you continue in this loop. Coverage at this point is again a metric-oriented view on which bits of the code are reachable, which flops toggle and which lines of code are unreachable. Again, having the ability to identify deeper issues such as X-propagation bugs, FSM bugs, deadlocks/livelocks and to prove that they do not exist at this stage in the flow is a great and easy win for overall design assurance. Finally, coverage analysis will reveal code coverage as well as functional coverage with respect to the deadlock/livelock checks.

Erase

At this stage in a DV flow, we expect the designer to have a robust build quality free from the bugs discussed above. We now want to probe deeper to identify if the design code matches the functional requirements which are coded in the formal tool as user-defined properties expressed as assertions and/or covers in some formal language such as SVA or PSL (though other assertion languages exist).

Our goal in the ‘Erase’ bugs phase is to scrub the design free of any functional bugs. If formal verification is not used, one would typically employ directed testing, constrained random, or a combination of both. We are not saying you cannot use the dynamic simulation techniques in this phase but we are describing what you would do in this phase using a combination of formal with dynamic simulation or formal used in isolation. In cases where formal verification is used, the focus is on hunting bugs. The strategy is to come up with user-defined assertions and cover properties that can explore all reachable states of the design and, though you may not easily obtain an exhaustive proof, you can certainly find undesired design execution behaviour (aka functional bugs). Formal verification is known for its ability to find deep corner-case bugs something we specialise in using and in training our customers to use.

If constraints change in this phase, then it is expected that you would run a reachability analysis and check that the constraints are not squeezing out the legal stimulus by being over-constrained. Identifying over-constraints and preventing them in the first place is again an art that can be mastered with training. If no bugs are seen (as in no assertion/cover fails) at this stage, it is a good time to inject manual bugs in the design to assess the robustness of the assertions/covers and constraints. Equally, in parallel, one can run coverage analysis available with most tools to identify which bits of the design code are reachable and observable. A line of code is observable if mutating it can cause one or more checks (asserts/covers) to fail. This kind of analysis is today built into formal tools, however, I must caution that the metrics reported by different formal tools are different and it may not be possible to merge all of them (though this should not deter the users from using this in the first place).

Prove

Establishing that the design is free from all kinds of functional bugs (i.e., mathematically proving bug absence) with respect to functional requirements is at the heart of the deep formal methodology in which Axiomise specializes. Building exhaustive proofs requires one to start well in the first place identifying functional requirements, formalizing them correctly and efficiently, and making extensive use of abstractions and problem-reduction techniques. Then you can guarantee high predictability that proofs will converge yielding a ‘pass’ (proving bug absence) or a ‘fail’ (showing bug presence). Identifying what to do with partial (bounded or inconclusive) proofs, understanding the scope of exhaustive proofs and its relationship with constraints, identifying vacuity and the role of covers all requires a delicate balance of good craftsmanship that can be acquired through our training programmes.

Coverage models obtained automatically from formal tools provide guidance (note the word ‘guidance’) on the quality of verification. Gaps in verification identified by missing coverage can be genuine gaps; however merely obtaining a 100% coverage metric (even functional) may not mean that you have no bugs. In that respect, formal verification is no different from dynamic simulation based on constrained random where 100% functional coverage may not necessarily mean 100% bug freedom! However, in this ‘Prove’ phase, if we do have exhaustive proofs of bug absence with a sensible and adequately constrained test bench, it genuinely means you have no bugs in the design with respect to the property (requirement) which has an exhaustive proof. It does not however mean that there are no other bugs, there may well be.

Example: So, if a user proves an ordering property for a badly constrained FIFO which limits the number of legal writes/reads to two for a 64-deep FIFO, it only means that the 2-deep FIFO does not have an ordering bug. It does not mean that the 64-bit deep FIFO is bug free. However, one can easily write a check to validate if the FIFO is indeed writable/readable on all locations (writing, for example, a cover property to check that a FIFO can fill up and drain) to identify this bug (over-constraint). Once its fixed, we can prove for a 64-deep FIFO correctly that it does not have a reordering bug or now you may even find one as more states are correctly reachable due to the constraint being adjusted properly.

We will be discussing the Erase and Prove phases in more detail in an upcoming DVCon USA paper, “Smart Formal for Scalable Verification”, and in a Synopsys-sponsored tutorial on “Tacking the complexity in Control and Datapath Designs with Formal Verification”.

Tape Out

The last phase in ADEPT FV places much emphasis on using push-button capabilities in the apps now available for formal tools to check for clock domain crossing, reset, and register checking bugs. But it is also about establishing integration checking using pin-to-pin connectivity checking as well as interface-to-interface functional checking through assertions/covers. Moreover, the ‘Tape Out’ phase is about going deeper into the system verification aspects, such as performance analysis and exploiting formal to conclusively establish the worst-case bounds on performance. The list shown in the figure is certainly not exhaustive and we can diversify into exploring other system level issues. Although coverage may not be reported for all the apps in this phase, there is no reason to not obtain coverage where relevant (e.g., in integration checking).

Summary

ADEPT FV is a new agile DV flow that focusses on the three axes of verification: bug presence, bug absence, and coverage. It can be used to obtain end-to-end design assurance using formal verification mitigating the wide gap in the industry with regard to formal verification’s use at extremities and puts formal in the right place – in the hands of designers and verification engineers where it can make them adept in the use of the technology.

The flow is agile as we keep regressing all our verification phases in parallel throughout the design development cycle responding to design changes catching bugs, proving bug absence and building coverage model along with the design development.

Ashish Darbari is CEO of formal verification consultancy and training provider Axiomise.

Ashish Darbari is CEO of formal verification consultancy and training provider Axiomise.