Portable stimulus and UVM

Accellera’s Portable Test and Stimulus standard provides powerful features for verification that is not meant to replace UVM but augment existing verification flows. Here is how portable stimulus and UVM interact.

As the Portable Test and Stimulus Standard was being developed, one of the most frequently asked questions about it was: is it intended to be a replacement for UVM?" The answer is an emphatic "No!" To understand why, we need to examine the way that PSS interacts with verification tools. In order to understand the different role that the Accellera Portable Test and Stimulus (PSS) standard plays versus UVM, let's consider this brief statement about what PSS is intended to accomplish, taken from the 1.0a version of the standards document:

“The goal is to allow stimulus and tests, including coverage and results checking, to be specified at a high level of abstraction, suitable for tools to interpret and create scenarios and generate implementations in a variety of languages and tool environments, with consistent behavior across multiple implementations.”

From this, we can see that a PSS model is not itself executable. Instead it requires a tool to analyze an abstract model and generate implementations (tests) from it. Tests generated for UVM environments are simply one of the implementations that can be generated from the model. Just as a UVM testbench is separated into executable elements, like uvm_sequences, that execute in a testbench made up of uvm_components, like environments and agents, we can use a PSS model to generate the executable pieces of a UVM testbench. Those generated pieces still need the rest of the UVM environment in which to run.

Let's look at a simple UVM sequence for random test generation:

Simple UVM random sequence

class cb1_seq_item extends uvm_sequence_item;

`uvm_object_utils(cb1_seq_item);

rand bit [4:0] field1;

rand bit [4:0] field2;

constraint c1 {field1 < 10;}

constraint c2 {field2 < 10;}

function new(string name="cb1_seq_item");

super.new(name);

endfunction // new

...

endclass

class cb1_rand_sequence extends uvm_sequence#(cb1_seq_item);

`uvm_object_utils(cb1_rand_sequence);

cb1_seq_item item;

function new(string name="cb1_rand_seq");

super.new(name);

item = cb1_seq_item::type_id::create("item");

endfunction // new

task body();

start_item(item);

if(!item.randomize())

`uvm_fatal(“BAD_RAND”,”Item failed randomization”);

finish_item(item);

endtask // body

endclass // cb1_sequenceThe cb1\_rand\_sequence creates a sequence item in its constructor, and then the body task uses the standard UVM idiom to randomize the item and send it to the driver. The item itself contains two random fields, each of which is constrained to be less than 10. If we were to run a UVM test that started this sequence on the appropriate sequencer, the test would generate a single transaction with random data in it, and then complete. If we were tracking functional coverage for the data fields, our cross coverage would be 1 percent. (The arithmetic of that is left as an exercise for the reader). By itself, this would not be a particularly useful test, but it could be helpful in creating a more complex scenario.

Such a scenario in UVM might involve a virtual sequence that calls the cb1_rand_sequence, possibly among other operations that it may perform:

UVM virtual sequence

class cb1_rand_vsequence extends uvm_virtual_sequence;

`uvm_object_utils(cb1_rand_vsequence);

cb1_rand_sequence test_seq;

cb1_env m_env;

function new(string name="cb1_seq");

super.new(name);

endfunction // new

virtual task body;

test_seq = cb1_rand_sequence::type_id::create("test_seq");

for(int i = 0; i< 100; i++) begin

test_seq.start(m_env.m_agent.m_seqr);

end

endtask

endclassIn cb1_rand_vsequence, we invoke the cb1_rand_sequence 100 times, each of which will generate a random value for the underlying sequence item. Recall that SystemVerilog constrained-random semantics do not prevent the same values from being repeated.

It is the virtual sequence that is called from the UVM test to make stuff happen:

class cb1_rand_test extends cb1_test_base;

`uvm_component_utils(cb1_rand_test);

cb1_rand_vsequence test_vseq;

...

task run_phase(uvm_phase phase);

phase.raise_objection(this, "Main");

test_vseq = cb1_rand_vsequence::type_id::create("test_vseq");

test_vseq.m_env = m_env;

test_vseq.start(null);

phase.drop_objection(this, "Main");

endtask

endclassWhen executed in a typical SystemVerilog simulator, the cb1_rand_vsequence achieves 68 percent coverage on the cross of item.field1 and item.field2.

The Portable Stimulus Standard equivalent of the simple cb1_random_sequence is an action, which is the PSS language construct that represents behaviors.

Simple PSS action

struct cb1_struct {

rand bit [4:0] field1;

rand bit [4:0] field2;

constraint c1 {field1 < 10;}

constraint c2 {field2 < 10;}

}

action cb1_a {

rand cb1_struct data;

}The action is declared using the action keyword and is delimited by braces { }, as are most constructs in PSS. This action contains a random data structure with the same fields and constraints as the sequence item in UVM. The actions are assembled by the processing tool into a graph that defines the scheduling relationships and other constraints between the actions that comprise the model. As the graph is solved to generate a test, each action node in the graph is traversed, which causes its random fields to be randomized. so this action represents a single randomization of the data structure, just as we saw in the cb1\_rand\_sequence from our UVM example. However, the PSS model says nothing more about what should be done with the randomized struct in the target test implementation.

To tell the processing tool how to turn this basic PSS model into an actual implementation, we must supply a target implementation in our action declaration:

Full declaration of the basic action

action cb1_a {

rand cb1_struct data;

exec body SV = """

begin

cb1_transfer_sequence test_seq =

cb1_transfer_sequence::type_id::create("test_seq");

test_seq.item.field1={{data.field1}};

test_seq.item.field2={{data.field2}};

test_seq.start(m_env.m_agent.m_seqr);

end

""";

}The exec body block is used to supply the implementation of an action. An action that includes an exec block is referred to as an “atomic action” in PSS. In a target-template exec block we pass values from the abstract model into the generated test using the braces notation.

The processing tool inserts the contents of the exec block into the resulting test according to the scheduling defined in the PSS model's graph. This includes randomized values for the fields of the data struct. When targeting a UVM environment, the realization of a PSS model will be a UVM virtual sequence, so the contents of the exec block will be inserted into a virtual sequence according to the schedule.

UVM Virtual Sequence Implementation

class cb1_infact_xfer_seq extends cb1_rand_vsequence;

// Register sequence with UVM factory

`uvm_object_utils(cb1_infact_xfer_seq)

function new(string name="cb1_infact_xfer_seq");

super.new(name);

endfunction // new

task body();

begin

cb1_transfer_sequence test_seq =

cb1_transfer_sequence::type_id::create("test_seq");

test_seq.item.field1=64'd 7;

test_seq.item.field2=64'd 3;

test_seq.start(m_env.m_agent.m_seqr);

end

endtask

endclassThe contents of the body method are exactly the same as the contents of the exec block from the PSS model, except the pre-randomized values are inserted into the generated code.

In order to prevent the additional randomization of the item in cb1\_rand\_sequence, we need to create a new cb1\_transfer\_sequence "helper sequence" in UVM:

UVM Helper Sequence for PSS Implementation

class cb1_transfer_sequence extends cb1_rand_sequence;

`uvm_object_utils(cb1_transfer_sequence);

function new(string name="cb1_xfer_seq");

super.new(name);

endfunction // new

task body();

start_item(item);

finish_item(item);

endtask

endclassThe item field is still present from the base class, but since the values are filled from the virtual sequence, we can just do start\_item()-finish\_item() to execute the pre-randomized transaction. The modularity of UVM allows us to use the generated cb1\_infact\_xfer\_seq virtual sequence in the same cb1\_rand\_test that we used for UVM by using a simple factory override.

> vsim cb1_tb +UVM_TESTNAME=cb1_rand_test \

+uvm_set_type_override=cb1_rand_vsequence,cb1_infact_xfer_seqIf all we use as our PSS model is the atomic action, then we're in the same boat as our cb1_rand_sequence in UVM, where we're only doing a single transaction. There the cross-coverage of the 100 possible combinations of the two data fields is 1 percent. Instead, we can create a compound action in PSS that will repeatedly use the cb1_a action to create scenarios more like the cb1_rand_vsequence above.

Compound Action in PSS

action cb1_loop {

cb1_a cb1;

activity {

repeat(100) {

cb1;

}

}

}The activity statement in PSS describes the actual scheduling graph of actions in the model. In this example, we use the repeat construct to traverse the cb1_a action 100 times. With this model, a PSS processing tool can analyze the resulting graph and create a virtual sequence in UVM that will achieve 100% coverage. This is the major difference between a procedural stimulus description, like UVM sequences, and a declarative stimulus description, like PSS.

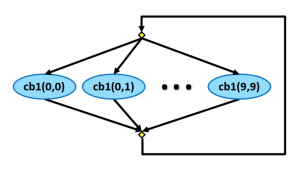

Image A PSS tool can generate multiple scenarios from a single model

Instead of a graph that loops 100 times over the same action, the real graph, which the PSS tool will statically analyze prior to generating the target implementation, is a loop where each iteration selects one instance of the action, to each of which the tool may apply a unique combination of data values. SystemVerilog and UVM cannot statically analyze the procedural stimulus sequences a priori to allow this optimization.

Thus, there are 100 unique scenarios defined by our relatively simple PSS model. We can generate a test that will achieve full coverage in the minimum 100 iterations by generating each unique scenario in the resulting implementation. The declarative nature of a PSS model enables the user to capture a large number of possible scenarios using the activity graph in an efficient and compact manner. A coverage-focused PSS tool will then use the coverage goals to bound the solution space of the activity graph to generate the minimal set of target-specific implementations for the scenarios required to reach those coverage goals. Once the coverage goals have been met, additional implementations may be generated that go beyond the coverage goals but are still legal according to the scheduling and other constraints defined in the model.

As we've seen in this example, there are significant advantages to using a PSS model to define the test intent for your verification, but the PSS model will only replace a portion of your UVM testbench. Specifically, it will generate a set of UVM virtual sequences that you can run from a UVM test that will cause optimized stimuli to be run on your existing UVM environment. You can also generate C code from the same PSS model, so you can reuse the test intent as your verification effort moves from a UVM simulation environment to a processor-based environment in emulation or on an FPGA prototype, for example.

DVCon Europe 2019 will have sessions on both PSS and UVM. For more information on the technical program, please visit the DVCon Europe 2019 website.

Author

Tom Fitzpatrick has been an active Accellera member since its inception, holding many key leadership positions in Accellera’s technical working groups. He is also the recipient of the 2019 Accellera Technical Excellence Award. For more information on the Accellera Portable Stimulus Standard, visit the Portable Stimulus Working Group page. For more information on the Accellera UVM standard, visit the UVM Working Group page.