Introducing the Compute Express Link (CXL) standard: the hardware

A guide to the emerging Compute Express Link (CXL) standard, which links CPUs and accelerators in heterogenous computing environments.

The desire to extract meaning from the vast amounts of unstructured image, audio, video and other data that we now produce is resulting in much greater use of hardware accelerators with large local memories. These application-specific computers need to co-exist with general-purpose machines in a heterogeneous computing environment, in which they must share memory to work efficiently. Unless a fix is found, large amounts of data will have to be copied between host CPUs and these accelerators, creating a substantial constraint on overall system performance.

Industry’s response is the Compute Express Link (CXL), an open interconnect standard for enabling efficient, coherent memory access between a host, such as a CPU, and a device, such as a hardware accelerator, that is handling an intensive workload.

A consortium to enable this new standard was recently launched at the same time as CXL 1.0, the first version of its interface specification, was released.

CXL will be implemented in heterogenous computing systems that include hardware accelerators addressing specific topics, such as artificial intelligence and machine learning.

The new standard’s current focus on providing an optimized solution for servers means it may not be ideal for CPU-to-CPU or accelerator-to-accelerator connections, or for connecting racks in data centres. In the mid-term, it may also need to update its transport layer in order to serve certain longer-distance connections.

In the short term, though, backers of the standard such as Intel expect to launch products using CXL in 2021, including in processors, FPGAs, GPUs and SmartNICs. It is also likely that an interoperability and compliance program will be developed to help its adoption.

This article, the first of three, describes some of the key features of the hardware that underpins CXL.

PCI Express (PCIe) has been around for many years, and the recently completed version of the PCIe 5.0 base specification enables CPUs and peripherals to connect at speeds of up to 32 gigatransfers per second (GT/s). However, PCIe has some limitations in an environment with large shared memory pools and many devices that need high bandwidth.

PCIe doesn’t specify mechanisms to support coherency and can’t efficiently manage isolated pools of memory, as each PCIe hierarchy shares a single 64bit address space. In addition, the latency of PCIe links can be too high to efficiently manage shared memory across multiple devices in a system.

The CXL standard addresses some of these limitations by specifying an interface based on the PCIe 5.0 physical layer and electrical schema. Through the implementation of three separate protocols that are combined and carried across the physical layer, CXL provides extremely low latency paths for memory access and coherent caching between host processors and devices that need to share memory resources, such as accelerators and memory expanders.

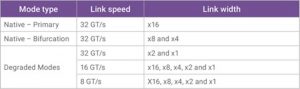

CXL’s primary native mode uses a PCIe 5.0 PHY operating at 32GT/s in a x16 lane configuration (Table 1). To allow for bifurcation, 32GT/s is also supported in x8 and x4 lane configurations. Anything narrower than x4 or slower than 32GT/s is referred to as a degraded mode.

Table 1 CXL supported operating modes

While CXL has performance advantages for many applications, some devices don’t need its close interaction with the host, instead just signalling job submission and completion events, often while working on large data objects or contiguous streams. For such devices, PCIe works well for an accelerator interface, and CXL doesn’t offer benefits.

Further information

Introduction to the CXL protocols

Introduction to CXL device types

Author

Gary Ruggles, senior product marketing manager, Synopsys