A vision of autonomous driving

You can buy a car today that drives itself on the highway, and in a few years you will be able to buy one that can drive you almost anywhere that you want to go – autonomously. Between now and then, though, there are a number of steps that the automotive industry needs to take.

Today’s high-end cars already offer a steadily growing set of features, such as advanced driver assistance, lane-keeping, and forward-collision warning systems. The development of these systems is improving passenger and pedestrian safety – and acting as a pathfinder on the route to full autonomy.

The key common feature of these systems is their use of cameras and advanced vision-processing systems to capture, integrate, analyze and act upon the vast amounts of data available from the visual field surrounding a vehicle. Camera resolutions are being enhanced, with a shift from VGA resolutions up to HD, and we’re also seeing an increase in the number of cameras used to provide improved driver awareness of what is going on around their vehicles.

Figure 1 Number and use of cameras in cars today (Source: Synopsys)

Why are higher resolutions being used? They matter when small portions of the visual field have to be examined. For example, a car traveling at 70MPH will cover more than 300 feet in three seconds but at that distance, a person will not be easily distinguished from the background in a VGA image. Shifting up to a 2Mpixel camera will make it possible to recognize a pedestrian in time for the car to warn the driver or even take evasive action, if needed.

How about the number of cameras in a car? Current estimates predict that within a few years the average car will have 15 or more cameras. Viewing the input from all of these, while difficult for the driver, will be easy and useful for the car’s electronic systems.

How will these systems evolve? At the moment, a camera in the passenger mirror can show you what is in the lane next to you. The output of this camera could also be examined with a vision processor to determine if there is anything in the lane and warn you if a vehicle is there before you change lanes. This may seem unnecessary if you can see the full blind spot with the camera, but drivers can be distracted, while the vehicle’s electronics cannot be.

In this example, the camera’s output is shared between the driver and the vehicle’s systems, but there will also be cameras on future cars whose outputs are only seen by the vehicle’s systems – in part to avoid overloading the cognitive powers of the driver. It’s also worth noting here that cameras are quite likely to be working alongside other sensor inputs, from radar, LIDAR or infrared systems, whose outputs will also have to be integrated with vision data streams.

The ability to interpret data from multiple inputs should significantly increase the capabilities and accuracy of automotive systems, at the cost of increasing the computational load on the vision processor.

Automotive electronics engineers are therefore facing a multifaceted challenge. They need to implement complex vision-based driving assistance and autonomy systems, using an increasing number of high-resolution cameras and other sensors distributed around their vehicles – inside a static or shrinking power budget. The data that these cameras and sensors are generating needs to be captured, integrated and analyzed at real-time speeds using sophisticated algorithms. All of this has to happen inside a design envelope that says that the cost of the vehicle electronics can’t rise – and neither can the power budget to operate it.

One immediate consequence of these constraints is that automotive electronics designers are looking for high-performance vision-processing systems that can be situated in the vehicle alongside the cameras, to avoid buffering and shipping large amounts of real-time data to and from a central processor over heavy, expensive wiring looms.

It seems clear that a lot of the data processing in these driver assist and autonomy systems will have to be localized with the camera or sensor. But what sort of algorithms will these vision systems be running?

In recent years, automotive vision applications have started using convolution neural network (CNN) technology, which operates much like our brains do to identify objects and conditions in visual images. Using offline training programs, CNN graphs can be built to recognize any object or multiple objects and to classify them, and these graphs can then be programmed into a vision processor. The CNN vision capability is more accurate than other vision algorithms, and is approaching the accuracy and recognition capabilities of humans.

Synopsys has been investing in vision processors for years, and the DesignWare EV6x family is designed to offer a new level of performance for vision applications. The processors support HD video streams of up to 4K resolution, within a power and cost envelope that is realistic for automotive and other embedded applications.

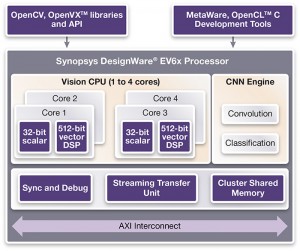

Figure 2 The DesignWare EV6x architecture (Source: Synopsys)

The EV6x processors combine a specialized vision CPU with a dedicated CNN engine that can detect objects or perform scene segmentation on HD video streams of up to 4K resolution.

The vision CPU operates in parallel with the CNN engine to increase throughput and efficiency and supports the fusion of vision data with other sensor inputs. Each of up to four vision CPU cores has its own 32bit scalar RISC and 512bit wide vector DSP.

The CNN engine is programmable, and with up to 880 MACs per cycle, offers accurate and efficient support for object detection, including advanced capabilities such as semantic segmentation. Training of the CNN is done offline and the resulting graph is then programmed into the CNN engine with graph-mapping tools.

Development tools for the processors include an OpenVX runtime, OpenCV, an OpenCL C compiler and the MetaWare development toolkit. The standard OpenVX kernels have been ported to the processors, and can be combined with functions in the OpenCV library to build vision applications. The MetaWare C/C++ compiler can be used with the OpenCL C vectorizing compiler to ease programming.

Automotive vision is developing quickly because of its potential to enhance safety and simplify driving, and enabled by powerful new processors such as Synopsys’ EV6x family. The novelty of advanced driver assistance systems and, eventually, full autonomy will soon wear off and, in a few years, become technology that we take for granted.

Michael Thompson is the senior manager of product marketing for the DesignWare ARC processors at Synopsys

Michael Thompson is the senior manager of product marketing for the DesignWare ARC processors at Synopsys