Flexible embedded vision processing architectures for machine-learning applications

Machine-learning strategies for vision processing are evolving rapidly, as cloud service providers such as Facebook and Google invest heavily in automating tasks such as object recognition, segmentation and classification. Many deep-learning algorithms, based on multiple-layer convolutional neural networks (CNNs), were developed to work on standard CPUs or GPUs housed in data centers. The utility of these algorithms has grown so quickly that they are now finding applications in embedded systems, such as in vision systems for autonomous vehicles.

The challenge for SoC designers is to specify the right processor for their vision-processing task, within the much tighter power, performance and cost constraints of an embedded system. Designers need to integrate the new machine-learning strategies with support for today’s algorithms and graphs, while being confident that the decisions they make today will scale appropriately to service more complex algorithms and the rapidly increasing computational requirements of higher quality video streams.

A heterogeneous architecture for heterogeneous tasks

An embedded vision processor now needs to support the established algorithms of machine vision, as well as emerging CNN-based algorithms. This demands a combination of traditional scalar, vector DSP and CNN engines, integrated on one device with synchronization units, a shared bus and shared memory, so that operands and results can be pipelined from processor to processor as efficiently as possible.

It is also important that an embedded processor architecture is flexible, so that it can adapt to serve today’s rapidly evolving algorithms, and scalable, so that a single architecture and code base can serve as the basis of multiple generations of embedded vision processing tasks.

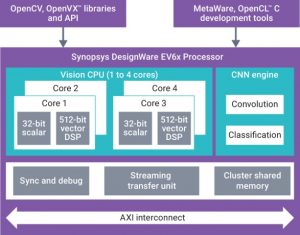

Figure 1 The DesignWare EV6x embedded vision processor IP (Source: Synopsys)

The EV6x family of embedded vision processing IP offers multiple forms of flexibility and scalability, depending on how it is configured during design, and programmed in use.

For example, the heart of the EV6x architecture is the Vision CPU, which includes a 32bit scalar processor and a 512bit vector DSP. The scalar and vector processors can include configurable or optional floating-point units. The three-way 512bit wide vector DSP can be configured in a single or dual MAC configuration. Dual MAC configuration enables two of the three lanes to be multiply-accumulations, while in single MAC configuration only one of the three 512bit lanes can be a multiply. The EV6x configuration can be flexible:

- 32bit MACs (single config) => 16 MAC/cycle

- 16bit MACs (single config) => 32 MAC/cycle

- 16bit MACs (dual config) => 64 MAC/cycle

- 8bit MACs (dual config) => 128 MAC/cycle

The vector DSP unit also has its own crossbar and vector memory. For greater throughput, users can specify up to four cores, made up of the scalar and vector processors, in the Vision CPU.

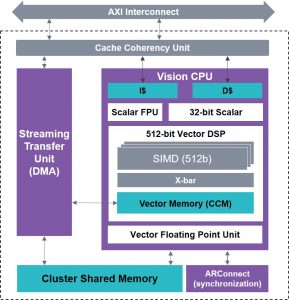

Figure 2 The Vision CPU of the EV6x architecture (Source: Synopsys)

If users specify multiple Vision CPUs, their work can be coordinated by a cache coherency unit, a streaming transfer unit for DMA, shared memory and an ARConnect synchronization block. Multiple EV6x processors can be connected to each other or to a host processor over an AXI bus for even greater scalability.

The scalability of the Vision CPUs gives the flexibility to handle sequential frames of vision data in different ways: with a single CPU, frames have to be sequenced through it one at a time, but with two cores, two frames can be handled in parallel.

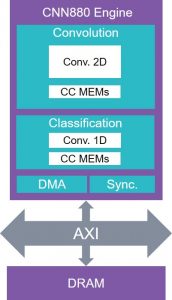

Figure 3 The CNN engine of the EV6x processor IP (Source: Synopsys)

The EV6x’s CNN engine handles real-time image classification, object recognition, detection and semantic segmentation. The base configuration of the CNN engine has 880MACs, but it can be configured with 1760 or 3520 MACs for more computationally demanding tasks. The engine handles 2D convolutions for the convolution and pooling layers of CNN algorithms, and 1D convolution for classification. The CNN engine receives data from an external memory via its built-in DMA. The CNN engine can also communicate to the other Vision CPUs in the system via an optional Clustered Shared Memory or the AXI bus.At 1.28GHz, on a 16nm finFET process under typical conditions, a fully configured CNN engine should deliver 4.5TMAC/s.

While neural networks are often trained on computers using 32bit floating-point numbers, in embedded systems, using smaller bit resolutions will result in smaller power and area consumption. For many CNN tasks, such as object detection, 8bit resolution appears to be enough so long as the hardware supports effective quantization techniques. Some newer use cases such as super resolution and denoising – which are signal-processing tasks – benefit from 12bit of CNN resolution.

Figure 4 (below) illustrates this point by showing how the same de-noising algorithm performs when using different number representations. It shows the visual difference between the version of the algorithm that used a 32bit floating-point representation and versions that used 12bit or 8bit fixed-point representations. There’s little difference between the floating-point and 12bit versions, but the 8bit version is visually degraded.

Figure 4 The effect of different number representations on a denoising algorithm

The EV6x CNN engine supports all these insights by using a 12bit MAC, rather than 16bit, to strike a balance between accuracy and die area (a 12bit MAC takes about half the area of a 16bit MAC). There’s closely coupled memory for both the convolution and classification engine, and it is designed to store coefficients in compressed form. The CNN is configured to support all popular CNN graphs, such as AlexNet, GoogLeNet, ResNet, SqueezeNet, TinyYolo, and SSD. A lot of research is being done on reducing memory sizes and the number of computations of these graphs to further improve area and power. It is also possible to ‘prune’ neural networks, that is to ignore those nodes whose weightings are relatively low, to save unnecessary calculations. And both feature maps and weighting coefficients can be compressed to reduce the bandwidth needed to load them from memory.

Having optimized hardware to implement vision tasks requires development tools that can take advantage of all the hardware features. Development tools for the EV6x processor include support for OpenCV libraries, the OpenVX framework, OpenCL C compiler, C/C++ compiler and a CNN mapping tool. The CNN mapping tool is vital to exploring different configurations of the EV6x, since it can automatically partition graphs across multiple engines. This gives designers the opportunity to explore issues such as whether to run multiple graphs at once, on one or more CNN engines, the hardware cost of reducing an algorithm’s latency, and issues such as the synchronization overhead of each approach.

Trading power and performance in embedded vision

Another key trade-off in embedded vision is computational performance versus power consumption. This is a complex area to explore. Some customers are interested in how much performance they can get for less than 1W power budget (even as low as 200mW). Others want to know what performance they can extract from a given die size (perhaps two or four square millimeters on their die). In some application areas, such as automotive, customers want as much performance as possible within a multiple-watt power budget: some are looking for 50TMAC/s from a 10W budget.

Answering these kind of questions means exploring system issues. For example, you can constrain the power consumption of the processor by reducing its operating frequency, at the cost of lower performance. However, if you’re prepared to dedicate more die area to local memory, this may reduce the systemic power consumption involved in going off-chip for coefficients and feature maps, and therefore provide scope for pushing the operating frequency back up.

Benchmarking

The large number of computations required to execute traditional vision processing and/or CNN algorithms makes benchmarking very difficult. What ‘apples to apples’ comparisons can truly be made? The best way to compare benchmarks between processors is to make sure that an FPGA development platform is used. If you aren’t running algorithms on actual hardware with real memory bandwidth concerns, it is harder to trust simulated results.

Support for compressed coefficients and feature maps is another important variable to take into account in benchmarking. If a processor isn’t using compression, then the implication is that its performance could be improved by doing so.

The EV6x is a digital design so it will easily be applied to multiple process nodes. However, the processor nodes chosen can impact area and power as well as maximum performance.

The future

Pressure from cloud service providers such as Facebook and Google to get better at finding objects in an image, segmenting them, and classifying them, has driven very rapid improvements in CNN architectures. Hardware flexibility will continue to be as important as performance, power and area as new neural networks are introduced. Synopsys’ DesignWare EV6x Embedded Vision Processors are fully programmable to address new graphs as they are developed, and offer high performance in a small area and with highly efficient power consumption.

Gordon Cooper is a product marketing manager for Synopsys’ embedded vision processor family. Cooper has more than 20 years of experience in digital design, field applications and marketing at Raytheon, Analog Devices, and NXP.

Gordon Cooper is a product marketing manager for Synopsys’ embedded vision processor family. Cooper has more than 20 years of experience in digital design, field applications and marketing at Raytheon, Analog Devices, and NXP.