The impact of AI on autonomous vehicles

The automotive industry is facing a decade of rapid change as vehicles become more connected, new propulsion systems such as electric motors reach the mainstream, and the level of vehicle autonomy rises.

Many car makers have already responded by announcing pilot projects in autonomous driving. These vehicles will need new cameras, radar and possibly LIDAR modules, plus processors and sensor-fusion engine control units – as well as new algorithms, testing, validation, and certification strategies – to enable true autonomy. According to market analysts IHS Market, this will drive a 6% compound annual growth rate in the value of the electronic systems in cars from 2016 through to 2023, when it will reach $1650.

Part of this growth will be driven by a steady increase in the level of autonomy that vehicles achieve over the next 10 to 15 years.

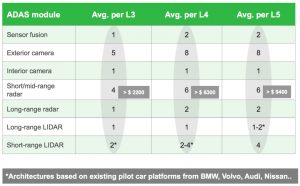

Figure 1, below, shows the number and value of sensors that leading car makers believe will be necessary to achieve three different levels of autonomy. Part of the reason for this cost increase is due to the need for redundant sensors at higher levels of autonomy.

Figure 1 Sensor numbers increase as vehicles become more autonomous (Source: IHS Markit)

The rise of artificial intelligence and deep learning in automotive design

One of the key enablers of vehicle autonomy will be the application of deep-learning algorithms implemented on multi-layer convolutional neural networks (CNNs). These algorithms show great promise in the kind of object recognition, segmentation, and classification tasks necessary for vehicle autonomy.

AI will enable the detection and recognition of multiple objects in a scene, improving a vehicle’s situational awareness. It can also perform semantic analysis of the area surrounding a vehicle. AI and machine learning will also be used in human/machine interfaces, enabling speech recognition, gesture recognition, eye tracking, and virtual assistance.

Before vehicles reach full autonomy, driver assistance systems will be able to monitor drivers for drowsiness, by recognizing irregular facial or eye movements, and erratic driver behavior. Deep-learning algorithms will analyze real-time data about both the car, such as how it is being steered, and the driver, such as heart rate, to detect behavior that deviates from expected patterns. Autonomous cars using sensor systems trained with deep learning will be able to detect other cars on the road, possible obstructions and people on the street. They will differentiate between various types of vehicles, and different vehicle behaviors, such as a parked car from one that is just pulling out into traffic.

Most importantly, autonomous vehicles driven by deep-learning algorithms will have to demonstrate deterministic latency, so that the delay between the time at which a sensor input is captured and the time the car’s actuators respond to it is known. Deep learning will have to be implemented within a vehicle’s embedded systems to achieve the low latency necessary for real-time safety.

IHS Markit projects that AI systems will be used to enable advanced driver assistance systems (ADAS) and infotainment applications in approximately 100 million new vehicles worldwide by 2023, up from fewer than 20 million in 2015.

Applying deep learning to object detection

Neural-network techniques are not new, but the availability of large amounts of computing power have made their use much more practical. The use of neural networks is now broken down into two phases: a training phase during which the network is tuned to recognize objects; and an inference phase during which the trained network is exposed to new images and makes a judgement on whether or not it recognizes anything within them. Training is computationally intensive, and is often carried out on server farms. Inference is done in the field, for example in a vehicle’s ADAS system, and so the processors running the algorithm have to achieve high performance at low power.

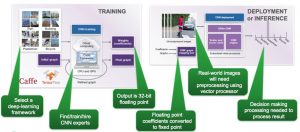

Figure 2 Training and deployment of a CNN (Source: Synopsys)

The impact of deep learning on design and automotive bills of materials

Some of the key metrics in implementing AI in the automotive sector are TeraMAC/s, latency and power consumption. A TeraMAC/s is a measure of computing performance, equivalent to one thousand billion multiply-accumulate operations per second. Latency is the time from a sensor input to an actuator output, and needs to be on the order of milliseconds in automotive systems, to match vehicle speeds. Power consumption must be low, since automotive embedded systems have limited power budgets: automotive designers may soon demand 50-100 TeraMAC/s computing performance for less than 10W. These issues will be addressed by moving to next-generation processes, and by using optimized neural-network processors.

Other key aspects of implementing AI and deep learning in automotive applications will include providing fast wireless broadband connections to access cloud-based services, and guaranteeing functional safety through the implementation of ISO 26262 strategies.

Putting deep learning to work

As Figure 2 shows, the output of neural-network training is usually a set of 32bit floating-point weights. For embedded systems, the precision with which these weights are expressed should be reduced to the lowest number of bits that will maintain recognition accuracy, to simplify the inference hardware and so reduce power consumption. This is also important as the resolution, frame rates and number of cameras used on vehicles increases – which will increase the amount of computation involved in object recognition.

Some of the challenges in applying deep learning are how to acquire a training data set, how to train the system on that data set, and efficient implementation of the resultant inference algorithms.

A neural network’s output during inference is a probability that what it is seeing is what you trained it on. The next step is to work out how to make decisions about that information. The simplest case for recognizing a pedestrian is now understood, and the next challenge is to work out how that pedestrian is moving. A lot of companies are working on being able to make these higher-level neural network decisions.

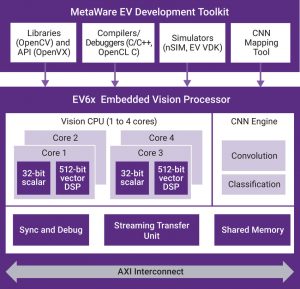

To support these companies, Synopsys has developed embedded-vision processor semiconductor IP, the EV6x family, that combines standard scalar processors and 32bit vector DSPs into a vision CPU, and is tightly integrated with a CNN engine. The vector processor is highly configurable, can support many vision algorithms, and achieves up to 64 MAC/cycle per vision CPU. Each EV6x can be configured with up to four vision CPUs. The programmable CNN engine is scalable from 880 to 3,520 MACs and can deliver up to 4.5 TMAC/s performance when implemented in a 16nm process.

Figure 3 The EV6x embedded vision processor architecture (Source: Synopsys)

The CNN engine supports standard CNN graphs such as AlexNet, GoogLeNet, ResNet, SqueezeNet, and TinyYolo. The EV6x embedded vision processor IP is supported by a standards-based toolset that includes OpenCV libraries, OpenVX framework, OpenCL C compiler, and a C/C++ compiler. There is also a CNN mapping tool to efficiently map a trained graph to the available CNN hardware. The tool offers support for portioning the graph in different ways, for example to pipeline sequential images through a single CNN engine, or to spread a single graph across multiple CNN engines to reduce latency.

AI and functional safety

AI and neural networks hold great promise in enabling advanced driver assistance and eventually vehicle autonomy. In practice, however, the automotive industry is increasingly demanding that vehicle systems function correctly to avoid hazardous situations, and can be shown to be capable of detecting and managing faults. These requirements are governed by the ISO 26262 functional safety standard, and the Automotive Safety Integrity Levels (ASIL) it defines.

Figure 4 Defining the various levels of Automotive Safety Integrity Level (Source: Synopsys)

Deep-learning systems will need to meet the requirements of ISO 26262. It may be that for infotainment systems, it will be straightforward to achieve ASIL level B. But as we move towards the more safety-critical levels of ASIL C and D, designers will need to add redundancy to their systems. They’ll also need to develop policies, processes and documentation strategies that meet the rigorous requirements of ISO 26262.

Synopsys offers a broad portfolio of ASIL B and ASIL D ready IP, and has extensive experience in meeting the requirements of ISO 26262. It can advise on developing a safety culture, building a verification plan, and undertaking failure-mode effect and diagnostic analysis assessments. Synopsys also has a collaboration with SGS-TUV, which can speed up SoC safety assessment and certifications.

Conclusions

AI and deep learning are powerful techniques for enabling ADAS and greater autonomy in vehicles. Although these techniques may be freely applicable to non-safety-critical aspects of a vehicle, there are challenges involved to meet the functional safety requirements for the safety-critical levels of ISO 26262. Selecting proven IP, developing a safety culture, creating rigorous processes and policies, and employing safety managers will all help meet the safety requirements. Extensive simulation, validation, and testing, with a structured verification plan, will also be necessary to meet the standard’s requirements.

Further information

Get more detail on this topic by downloading the White Paper, here.

Author

Gordon Cooper is embedded vision product marketing manager at Synopsys

Gordon Cooper is a product marketing manager for Synopsys’ embedded vision processor family. Cooper has more than 20 years of experience in digital design, field applications and marketing at Raytheon, Analog Devices, and NXP.

Gordon Cooper is a product marketing manager for Synopsys’ embedded vision processor family. Cooper has more than 20 years of experience in digital design, field applications and marketing at Raytheon, Analog Devices, and NXP.