Migrating from single to multicore processing on QorIQ technology

The single core processor is reaching its performance ceiling, due to energy, thermal and power concerns. To address these issues that cause design difficulties, many embedded designers are migrating embedded applications from single core to multicore. This article provides an outline for a software strategy to progress from one core to two and beyond using Freescale’s QorIQ product line built on the Power Architecture technology.

Moore’s Law losing steam

We have all seen it coming over the last few years—the doubling of transistors and frequency every 18 months is not staying on its planned trajectory. As transistor sizes shrink, more can fit on the same silicon die. But frequency is not keeping in step with Moore’s Law and that is hindering performance gains in many embedded applications.

Simply put, a single core can be thought of as a single threaded application. By definition, no matter the number of executable tasks, only one core can execute the task. The software developer will have to use all the tools available in object-oriented coding to ensure the processor is optimally exercised. And if more performance is required, some assembly tweaking may be necessary.

When megahertz was the only metric that mattered, demand for multicore processors was limited. An embedded application could simply line up all the tasks sequentially and run them in a single thread, counting on processor pipelines and clock frequencies to shove through the application in a brute force fashion. Thermal solutions scaled up to contain the heat generated from so many clock transitions, but power supply designs were struggling to keep up with the current demands of those high frequency processors.

Power versus frequency is not a smooth linear curve up and to the right as the dynamic power equation suggests (Pdynamic = aCV2f). Upon further investigation, CMOS devices adhere to the cube-root rule which implies that power is proportional to frequency cubed. In real life, this produces a hockey stick due to the dependency on frequency: the faster the clock, the higher the power. In addition, the smaller the geometries of the transistors, the greater the static power due to leakage where zero work is accomplished. Both factors add to total power such that they cause greater amounts of energy to be utilized. Five gigahertz transistors are not a realistic option and embedded markets cannot count on greater frequencies if energy reduction is becoming a mandatory requirement for design.

Due to governmental regulations and public pressure, power and energy consumption are scrutinized at all system levels. From the passive LRC circuit to the active and dormant states of the SoC, an embedded system designer must ensure these mandates are met at a reasonable cost. Marketing of these systems has also changed tremendously and they are playing up their ‘green’ elements. Core frequencies are not the main metric of differentiation any longer. Today, energy savings and the user interface are the specs considered worth printing on the box and datasheet. Clock frequencies have become a footnote.

All components in a system must work together to lower overall energy usage, and a SoC design must especially use all available technological advances to minimize power. One such mechanism is lowering the frequency. However, if an application requires more processing, how can one get the cycles from the processor and minimize the power? The answer is multicore.

Frequency does not equal performance

In today’s embedded markets, customers are beginning to view the megahertz as an outdated metric for measuring silicon vendors. Benchmarks based solely on megahertz lead to misleading results and poor architectural decisions during the design phase. For example, running a simple application benchmark fully resident in a CPU’s L1 cache will always yield better results with faster clock speeds. However, no matter how embedded an application is, interrupts, processor stalls and external memory accesses happen in all applications.

Embedded applications have specific benchmarks based on the particular market. Sometimes it is packets per second, or pages per minute, or kilowatt hours. But whatever the pertinent metric, it is imperative that the silicon vendor provides meaningful data based on real-life applications and not contrived code sequences that will never be executed in the real world. How that meaningful data is provided does not have to—and should not—come from clock frequencies.

Power and performance no longer a tradeoff

At the start of an application’s development there is a tremendous design focus on performance until specifications are satisfied. Then, the focus of software developers migrates to feature enhancement and bug fixes. New architectures require new performance tuning, but once accomplished, performance easily scales with CPU frequencies. The successful software developer is now finding another way to increase his or her application’s performance.

Software development is a key component in a successful transition to a multicore device. The most integral parts of an architecture are often those that have taken the most time to develop. These are valuable pieces of intellectual property that a company may be unwilling to abandon for a new core architecture or to open up and modify for porting to a multicore device. With the help of Freescale Semiconductor and QorIQ processors, an embedded designer can gain the desired performance and ease the investment of software development during a transition of architecture.

The ‘simple’ approach is to multithread your application to offload hotspots and tasks that can run in parallel. This is obvious in theory, but monumental in practice. There are decades of investment rolled up in an embedded application built on the sequential nature of single core processors.

We are at an inflexion point where migrating to multicore is a decision where an embedded design manager must determine when and if they port their software to the multicore processor. There are several dependencies that must be considered: the operating system (OS), the hardware, the software, the user-space programming model, the complexity, the upgradeability, and the time required to do all this in a future-proof fashion. Architecting for dual-core properly can ensure that more than two cores can be trivially enabled.

Design for multicore

Embedded hardware and software designers need to ask themselves some very important questions:

- Which tasks in your application are a higher priority from the perspective of your company’s value-add?

- Work on those functions first.

- Which tasks require which peripherals?

- Partition the IOs accordingly.

- Which tasks require maximum clock speed?

- Determine core frequencies based on real application performance requirements, not arbitrary benchmarks.

- Which tasks require sharing between cores?

- May complicate the memory maps.

- Which tasks can happen in parallel with other tasks?

- Determine tasks that are somewhat orthogonal to other tasks.

- Which tasks require more throughput?

- Determine whether the output is easily replicable.

- Which tasks require less latency?

- Determine hotspots and opportunities for performance gains within an application.

- Which tasks have specific OS requirements?

- Determine the real-time requirements, the legacy demands and the end-user requirements for their value-add.

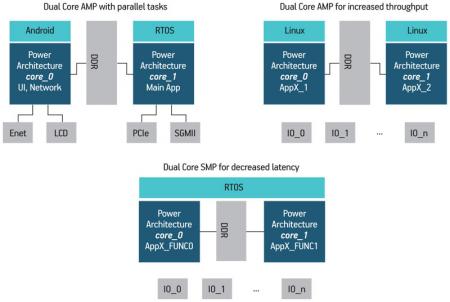

Each answer will help to determine a roadmap for your software development teams that allows for the incremental investment of resources in multicore. Some of the questions will help you gain insight into your specific design requirements, while others will help determine the overarching design strategy. Here, we will focus on three of the questions above, while Figure 1 (p. 29) shows a block diagram approach to implementing a multicore system.

Figure 1

Implementing a multicore system

Which tasks can run in parallel with other tasks?

If an application requires a user interface, network connection and main application, then a simple partitioning could put the UI and network on core 0 and the main application on core 1. This option allows you to decide which OS is required for each core. Is it symmetric multiprocessing (SMP) with the same OS running across both cores? Is it asymmetric multiprocessing (AMP) where each core runs its own OS? If it is AMP, is it important that the cores communicate or share data? In all cases, splitting the lower priority task between cores is a more easy and manageable method for tackling multicore.

Which tasks require more throughput?

If your application can benefit from more throughput, the preferred system topology is simply to run multiple instantiations of the same application on several cores. As long as the output is not dependent on the other cores’ output, the results are the same as if you were running redundant applications on different boards. Again, there is the OS question as in the previous example, but one comparison is that to produce more widgets, you often simply activate more assembly lines. Utilizing an idle core is manning another assembly line.

Which tasks require less latency?

If your application can benefit from less latency, the system topology is equally simple, but the software development can become a challenge. Software developers will need to determine the hotspots in the applications that can also run in separate threads and offload those tasks to the other core or cores. This can require a higher investment in resources and schedule, but the benefits of reducing latency far outweigh the pain and cost of transition.

Moving from dual core to more-than-two can be a relatively trivial exercise, if the software architecture team is cognizant of semiconductor device roadmaps. A strong relationship between the embedded designer and the silicon vendor can ensure architectural alignment.

Why Multicore?

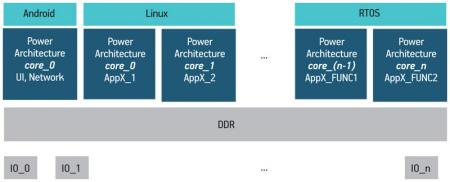

Going back to the questions above, determine the dependencies that are important to your application, plan, then attack. Spread the entire embedded application across all the cores efficiently. For example, use one core for services, use a second for control, and use the rest for data (Figure 2 shows a more-than-two-core example also). You will not see a 5GHz processor in today’s technology that will give your current single threaded application new life. You will, however, see multiple 1GHz cores on a single SoC that can give you the equivalent of 5GHz of processing in a smaller power envelope, cooler package, and with more integrated capabilities.

Figure 2

Moving to more than two cores

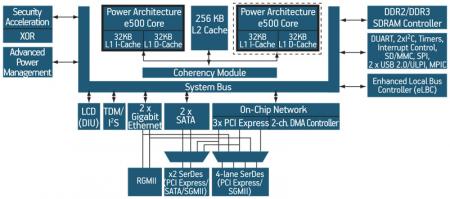

Real data has been taken on the QorIQ p1022 processor (Figure 3) that shows if an application is architected to take advantage of the second core, performance increases as much as if a 1GHz processor were running at 1.6GHz. This is a 60% increase in performance with a small passive heatsink, and a much lower overall power than a single core.

Figure 3

QorIQ p1022 block diagram

Why QorIQ technology?

With QorIQ technology, the cost of devices, combined with higher levels of integration, greatly lessen the bill of materials cost versus single core predecessors. Likewise with QorIQ, the transition to multicore can happen gradually and assuredly, allowing software development teams the time needed to carefully and methodically migrate to multicore processors.

There is a transition in the embedded industry that application designers must make. Faster transistors are not the answer to better performance. Smarter use of fast-enough transistors provides the necessary factors to delivering a higher performing application to a satisfied customer. Freescale’s QorIQ product line not only ensures the power for performance tradeoff is negligible, it also provides the necessary hardware built on Power Architecture technology that allows for efficient and effective software solutions to your multicore application.

There is no longer any question as to whether embedded applications will move to multicore devices. The question now is, ‘When?’ When do we transition our hardware to multicore and when do we transition our software to multicore? The former is a small monetary investment, whereas the latter can really scare an embedded designer away from multicore. Strong support and an arsenal of examples of thorough documentation, can be coupled to the appropriate strategy to take this fear away.

Freescale Semiconductor

6501 William Cannon Drive West

Austin

TX 78735

USA

T: + 1 800 521 6274

W: www.freescale.com/qoriq