Why AI needs security

Artificial intelligence (AI) is creating new waves of innovation and business models, powered by new technology for deep learning and a massive growth in investment. As AI becomes pervasive in computing applications, so too does the need for high-grade security in all levels of the system. Protecting AI systems, their data, and their communication is critical for users’ safety and privacy, and for protecting businesses’ investments.

Where and why AI security is needed

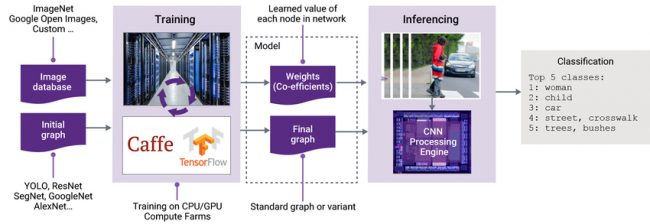

AI applications built around artificial neural networks operate in two basic stages – training and inference (Figure 1). During the training stage, a neural network ‘learns’ to do a job, such as recognizing faces or street signs. The resulting dataset of weights, representing the strength of interaction between the artificial neurons, is used to configure the neural net as a model. In the inference stage, this model is used by the end application to infer information about the data with which it is presented.

Figure 1 The training and inferencing stages of deep learning and AI (Source: Synopsys)

The algorithms used in neural net training often process data, such as facial images or fingerprints, which comes from public surveillance, face recognition and fingerprint biometrics, financial or medical applications. This kind of data is usually private and often contains personally identifiable information. Attackers, whether organized crime groups or business competitors, can take advantage of this information to gain economic or other benefits.

AI systems also face the risk of being sent rogue data to disrupt a neural network’s functionality, for example by encouraging the misclassification of facial-recognition images to allow attackers to escape detection. Companies that protect training algorithms and user data will be differentiated in their fields from companies that face the reputational and financial risks of not doing so. Hence, designers must ensure that data is received only from trusted sources and that it is protected during use.

The models themselves, represented by the neural-net weights that emerge during the training process, are expensive to create and form valuable intellectual property that must be protected against disclosure and misuse. The confidentiality of program code associated with the neural-network processing functions is less critical, although access to it could help an attacker reverse-engineer a product. More importantly, the ability to tamper with this code could result in the disclosure of any assets stored as plaintext inside the system’s security boundary.

Another strong driver for enforcing personal data privacy is the General Data Protection Regulation (GDPR) that came into effect within the European Union on 25 May 2018. This legal framework sets guidelines for the collection and processing of personal information. The GDPR sets out the principles for data-management protection and the rights of the individual, and large fines may be imposed on businesses that do not comply with the rules.

As data and models move between the network edge and the cloud, communications also need to be secured and authenticated. It is important to ensure that data and/or models are protected and can only be downloaded and communicated from authorized sources to authorized devices.

AI security solutions

Product security must be incorporated throughout the product lifecycle, from conceptualization to disposal. As new AI applications and use cases emerge, devices that run these applications must be able to adapt to an evolving threat landscape. To meet high-grade protection requirements, security needs to be multi-faceted and deeply embedded in everything from edge devices that use neural-network processing system-on-chips (SoCs), through the applications that run on them, to communications to the cloud and storage within it.

System designers adding security to their AI product must consider a few foundational functions for enabling security in AI products, to protect all phases of operation: offline, during power up, and at runtime, including during communication with other devices or the cloud. Establishing the integrity of the system is essential to creating trust that it is behaving as intended.

Secure bootstrap

Secure bootstrap, an example of a foundational security function, establishes that the software or firmware of the product is intact. This integrity ensures that when a product is coming out of reset, it does what its manufacturer intended – and not something that a hacker has altered it to do. Secure bootstrap systems use cryptographic signatures on the firmware to determine their authenticity. While predominantly firmware, secure bootstrap systems can use hardware features such as cryptographic accelerators and even hardware-based secure bootstrap engines to achieve greater security and faster boot times. Secure boot schemes can be kept flexible by using public-key signing algorithms enabled by a chain of trust that is traceable to the firmware provider. Public-key signing algorithms also make it possible for a code-signing authority to be replaced by revoking and reissuing the signing keys, if those keys are ever compromised. Security in this case relies on the fact that the root public key is protected by the secure bootstrap system and so cannot be altered. Protecting the public key in hardware ensures that the root of trust identity can be established and cannot be forged.

Key management

The best encryption algorithms can be compromised if the keys are not protected with key management, which is another foundational security function. For high-grade protection, the secret key material should reside inside a hardware root of trust. Permissions and policies in the hardware root of trust enforce a requirement that application-layer clients can only manage the keys indirectly, through well-defined application programming interfaces (APIs). Continued protection of the secret keys relies on creating ways to authenticate the importation of keys and to wrap any exported keys with another layer of security. One common key management API for embedded hardware secure modules (HSM) is the PKCS#11 interface, which provides functions for managing policies, permissions, and the use of keys.

Secure updates

A third foundational function relates to secure updates. AI applications will continue to get more sophisticated and so data and models will need to be updated continuously. The process of distributing new models securely needs to be protected with end-to-end security. Hence it is essential that products can be updated in a trusted way to fix bugs, close vulnerabilities, and evolve product functionality. A flexible, secure update function can even be used to allow post-consumer enablement of optional features of hardware or firmware.

Protecting data and coefficients

After addressing foundational security issues, designers must consider how to protect the data and coefficients in their AI systems. Many neural network applications operate on audio, still images, video streams, and other real-time data. There are often serious privacy concerns with these large data sets and so it is essential to protect that data when it is in working memory, or stored locally on disk or flash memory systems. High-bandwidth memory encryption (usually based on AES algorithms) backed by strong key-management solutions is required. Similarly, models can be protected through encryption and authentication, backed by strong key-management systems enabled by hardware root of trust.

Securing communications

To ensure that communications between edge devices and the cloud are secured and authentic, designers use protocols that incorporate mutual identification and authentication, such as client-authenticated Transport Layer Protocol (TLS). The TLS session handshake performs identification and authentication, and if successful the result is a mutually agreed shared session key to allow secure, authenticated data communication between systems. A hardware root of trust can ensure the security of the credentials used to complete identification and authentication, as well as the confidentiality and authenticity of the data itself. Communication with the cloud will require high bandwidth in many instances. As AI processing moves to the edge, high-performance security requirements are expected to propagate there as well, including the need for additional authentication, to prevent tampering with the inputs to the neural network, or with any AI training models.

Neural network processor SoC example

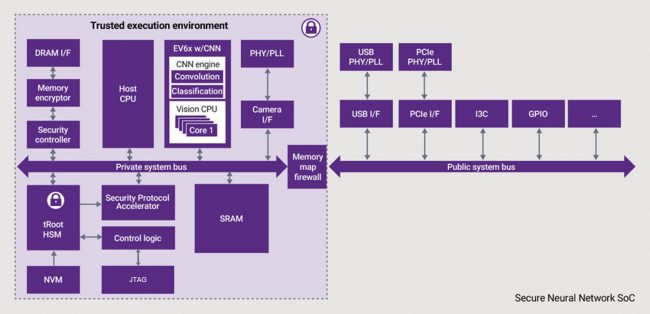

Building an AI system requires high performance with low-power, area-efficient processors, interfaces, and security. Figure 2 shows a high-level architectural view of a secure neural network processor SoC for AI applications. Neural network processor SoCs can be made more secure when implemented with proven IP, such as Synopsys DesignWare IP.

Figure 2 A trusted execution environment with DesignWare IP helps secure neural network SoCs for AI applications (Source: Synopsys)

Embedded vision processor with CNN engine

Some of the most vigorous development in machine learning and AI at the moment focuses on enabling autonomous vehicles. The EV6x Embedded Vision Processors from Synopsys combine scalar, vector DSP and convolutional neural network (CNN) processing units for accurate and fast vision processing in this and other application areas. They are fully programmable and configurable, combining the flexibility of software solutions with the high performance and low power consumption of dedicated hardware. The CNN engine supports common neural-network configurations, including popular networks such as AlexNet, VGG16, GoogLeNet, YOLO, SqueezeNet, and ResNet.

Hardware secure module with root of trust

Synopsys also offers a highly secure tRoot hardware secure module with root of trust for integrating into SoCs. It provides a scalable platform to enable a variety of security functions in a trusted execution environment, working with one or more host processors. Such functions include secure identification and authentication, secure boot, secure updates, secure debug and key management. tRoot also secures AI devices using unique code-protection mechanisms that provide run-time tamper detection and response, and code-privacy protection, without the cost of dedicated secure memory. This feature reduces system complexity and cost by allowing tRoot’s firmware to reside in non-secure memory space. Commonly, tRoot programs reside in shared system DDR memory. The confidentiality and integrity provisions of tRoot’s secure instruction controller make this memory private to tRoot and impervious to attempts to modify it by other subsystems on or off chip.

Security protocol accelerator

The Synopsys DesignWare Security Protocol Accelerators (SPAccs) are highly integrated embedded-security solutions with efficient encryption and authentication capabilities to provide increased performance, ease-of-use, and advanced security features such as quality-of-service, virtualization, and secure command processing. SPAccs can be configured to address the security needs of major protocols such as IPsec, TLS/DTLS, WiFi, MACsec, and LTE/LTE-Advanced.

Conclusion

Providers of AI solutions are investing significantly in R&D, and so the neural network algorithms, and the models derived from training them, need to be properly protected. Concerns about the privacy of personal data, which are already being reflected in the introduction of regulations such as GDPR, also mean that it is increasingly important for companies providing AI solutions to secure them as well as possible.

Synopsys offers a broad range of hardware and software security and neural network processing IP to enable the development of secure, intelligent solutions that will power the applications of the new AI era.

Further information

- DesignWare Root of Trust Solutions

- DesignWare Security Protocol Accelerators

- DesignWare EV6x Embedded Vision Processors

Author

Dana Neustadter is product marketing manager for security IP at Synopsys