Hardware trojan attacks and countermeasures

A hardware trojan is a form of malicious circuitry that damages the function or and trustworthiness of an electronic system. Although there are no publicly disclosed examples of a hardware trojan being deployed or exploited without the knowledge of the IC designer, researchers and security experts have identified a number of ways in which such malicious functions can be added to a design and then used.

Some ‘trojans’ have been shown to exist but they have all taken the form of backdoors placed in the hardware deliberately by the designers without taking sufficient precautions to prevent the backdoor from being exploited or discovered by third parties, such techniques such as pipeline emission analysis.

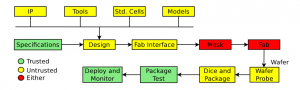

Today’s disaggregated supply chain is seen as being particularly vulnerable to third-party trojan insertion, providing many points at which malicious logic could be added to a design surrepticiously. In a paper for the 2009 IEEE High-Level Design Validation and Test Workshop, Rajat Subhra Chakraborty, Seetharam Narasimhan and Swarup Bhunia of Case Western Reserve University presented a number of scenarios under which trojans could enter an IC-design project. Almost all steps were potentially vulnerable, as shown in Figure 1.

Figure 1 Degrees of trust in the disaggregated supply chain (Source: Chakraborty et al)

Trojans could vary widely in what they are able to do. Very simple but difficult-to-detect trojans could be used to weaken the security of a cryptoprocessor by reducing the effective entropy of a random-number generator or by providing information on the internal operation of the processor to an attacker. More complex trojans could subvert the intended behavior of an IC. Other attacks might take the form of a denial of service, shutting the device down once a trigger has been activated.

As SoCs become more complex and incorporate a wider variety of IP cores, the attacker’s ability to hide a trojan increases. The risk also increases for late-stage insertions that the attempt to inject the trojan also causes the entire IC to fail because of electrical interactions, such as excessive power consumption or crosstalk. As effective trojans can be implemented in fewer than 200 gates – and potentially just a couple of gates – detecting them among the tens or hundreds of thousand of logic gates even on an older process such as 180nm is extremely difficult. This was demonstrated in a red-team versus blue-team scenario conducted by researchers at ETH Zurich, Switzerland and Graz University of Technology, Austra in 2013, in work presented at the HASP13 conference.

How can hardware trojans be inserted?

The most recent CSAW Embedded Systems Challenge organised by NYU Poly called for successful RTL insertion by a number of teams in the face of countermeasures, principally the FANCI technique developed by Adam Waksman and colleagues at Columbia University. However, RTL is not the only delivery mechanism for a hardware trojan. It could, in principle, be a system-level object that is then converted to rogue RTL or the trojan could be inserted during layout or even mask preparation.

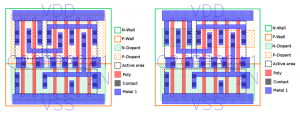

Georg Becker of the Horst Görtz Institute for IT Security in Bochum, Germany and colleagues presented at the CHES 2013 conference a technique by which trojan functions could be inserted by manipulating the physical design immediately ahead of manufacture. Because the only change was dopant polarity within a transistor – n-type for p-type or vice versa – the researchers claimed it would be extremely difficult to detect, especially if carried out post-signoff. A foundry could potentially make the changes immediately before mask production.

Figure 2 Resized p-well used to affect the behavior of an AOI cell (Source: Becker et al)

Previous work by Yuriy Shiyanovskii and coworkers at Case Western Reserve University indicated that dopant concentrations could be changed to reduce lifetime through effects such as hot carriers and NBTI, but this would only allow irreversible denial-of-service attacks.

In the case of the Becker et al attack, an inverter could be rigged to provide the wrong output – such as Vdd at all times by switching the p-dopant mask for an n-type version – or have its strength altered by reducing the width of the PMOS transistor. Although the changes that are possible are extremely limited, the researchers argued that this would be sufficient to weaken cryptographic circuitry. One proof-of-concept attack was a random number generator similar to that used by Intel in its Ivy Bridge processors. The team effectively fixed inputs feeding into two flip-flops in such a way that the chip would still pass built-in self-test (BIST) checks – scan test was removed from the Ivy Bridge random number generator for security reasons.

A second proof-of-concept attack was used to make specific side-channel attacks easier, but not weaken the design against the most common types of attack.

Although layout-stage trojans are the least likely to be detected their limitations and the requirement for just one injection point within the foundry makes them difficult to deploy. Rogue RTL can be injected at a number of points, particularly if multiple third-party IP cores are combined in a single design. In principle, a single design could fall victim to multiple trojans created by different groups of attackers.

Are there countermeasures?

Research into trojan countermeasures has been in progress since the mid-2000s but received a boost in the US at the beginning of 2014 with the decision by the Semiconductor Research Council (SRC) to create and fund the Trustworthy and Secure Semiconductors and Systems (T3S) consortium. The group announced $9m to be used for research over an initial three-year period. In May 2014, SRC convened a workshop to canvas opinions from academia and industry on the direction the countermeasures work should take.

Although trojan countermeasures are far from being able to deal with all possible forms of attack, researchers have identified promising avenues of work.

In principle, hardware Trojans themselves are vulnerable to detection by test post-manufacture or by coverage-oriented techniques during the design phase. For example, RTL inserted by an attacker outside normal development processes is unlikely to be targeted by functional tests developed by legitimate members of the team. The Trojan may appear as unreachable code within a larger system. However, because the team may not have access to tests run on IP supplied at RTL by third parties, coverage analysis will be unhelpful.

The FANCI technique developed by Adam Waksman and colleagues at Columbia University uses a coverage-like approach in that it identifies logic that is almost unreachable, but it does not require access to any verification stimuli itself.

FANCI uses static, Boolean functional analysis to tag wires that have the potential to be malicious. “The key insight behind our work – one that has been observed in prior works – is that backdoors are nearly always dormant (by design) and thus rely on nearly-unused logic, by which we mean logic that almost never determines the values of output wires,” the authors wrote.

However, attackers aware of techniques such as FANCI can alter the trojan logic to make it appear more benign.

Side-channel techniques such as pipeline emission analysis have been used to uncover backdoors placed in IC by the design team. The same techniques can be used to unmask trojans injected by third parties, although it has the downside of not being usable until the IC has been manufactured and potentially entered the supply chain.

One option is to combine detection and repair, as proposed by Matthew Hicks and coworkers from the University of Illinois at Urbana-Champaign and the University of Pennsylvania. This strategy, called BlueChip, uses checks at design time to head off attacks during runtime. During design, any logic not touched by any verification test is tagged and removed, to be replaced by an exeption handler. This handler is included to allow system execution to continue to prevent the system from crashing but also allowing software to track its progress and potentially take action.

Another approach developed by Adam Waksman and Simha Sethumadhavan at Columbia University is to assume that onchip IPs from third parties cannot be trusted and to scramble their inputs to make it difficult for any trojans buried inside to obtain information they need to perform their actions. The technique may be enough to silence the triggers the trojan needs to activate. However, the technique would not work for ‘analog’ triggers, where onchip PVT sensors are used to put the chip into its malicious state by being supplied with a high voltage combined with a low temperature.

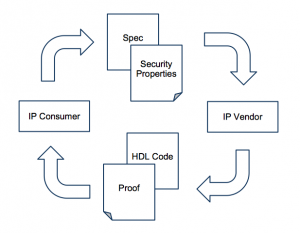

Figure 3 Communication between IP consumer and producer using formal proofs of behavior (Source: Love et al)

In 2011, Eric Love, Yier Jin and Yiorgos Makris of Yale University proposed the use of formal proofs to make it possible to develop trusted IP instead. The proof would, in effect, show that a contract to develop the IP to handle a given set of constructs had been honored by the IP developer. The technique is analogous to the use of proof-carrying code (PCC) developed in the software world.

Using this technique, both the IP aggregator and the IP producer agree on a formal mathematical description of the functions of the core using a theorem-proving language. In addition to writing the HDL, the IP producer also generates a formal proof that the specified hardware satisfies all of the contractually required properties. This can then be checked by a theorem prover when the IP is delivered. However, this assumes that the proof is sufficiently comprehensive to rule out any injected trojans and that the IP aggregator knows enough about the IP core’s specifications to be able to agree on a set of assertions.

In the case of off-the-shelf cores, an accredited third party may need to be recruited to declare that the IP producer’s core is, indeed, safe.