What can FPGA-based prototyping do for you?

This extract from the Synopsys and Xilinx-authored “FPGA-Based Prototyping Methodology Manual” outlines a number of valuable strategies supported by brief project case studies.

The FPMM

The FPGA-Based Prototyping Methodology Manual (FPMM) is a comprehensive and practical guide to using FPGAs as a platform for SoC development and verification. It is organized into chapters which are roughly in the same order as the tasks and decisions which are performed during an FPGA-based prototyping project. You can read it start-to-finish or, since the chapters are designed to stand alone, you can start reading at any point that is of current interest to you. While the book’s examples refer to Synopsys and Xilinx products, the methodology is applicable to any FPGA-based prototyping project using any tools, boards or FPGAs. Here, we present Chapter Two of the FPMM in its entirety.

Introduction

As advocates for FPGA-based prototyping, we may be expected to be biased toward its benefits and blind to its deficiencies. That is not our intent. The FPGA-Based Prototyping Methodology Manual (FPMM) aims to give a balanced view of the pros and cons of FPGA-based prototyping because we do not want people to embark on a long prototyping project if their aims would be better met by other methods (e.g., a SystemC-based virtual prototype).

Let’s take a deeper look at the aims and limitations of FPGA-based prototyping and its applicability to system-level verification and other goals. By staying focused on the aim of the prototype project, we can simplify our decisions regarding platform, IP usage, design porting, debug and other aspects of design. In this way we can learn from the experience others have had in their projects by examining some examples from prototyping teams around the world.

FPGA-based prototyping for different aims

Prototyping is not a push-button process. It requires a great deal of care and consideration at its different stages. As well as explaining the effort and expertise involved, we should also offer some incentive as to why we should (or maybe should not) perform prototyping during our SoC projects.

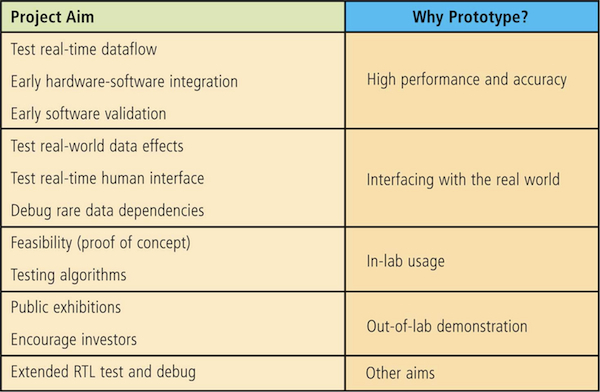

In conversation with prototypers over many years, one question we liked to ask was, “Why do you do it?” There were many answers, but we were able to group them into the general reasons shown in Table 1.

Table 1

General aims and reasons to use FPGA prototypes (Source: Synopsys/Xilinx – click image to enlarge)

For example, ‘Real-world data effects’ might describe a team that is prototyping in order to have an at-speed model of a system available to interconnect with other systems or peripherals, perhaps to test compliance with a particular new interface standard. Their broad reason to prototype is ‘interfacing with the real world’ and prototyping does indeed offer the fastest and most accurate way to do that in advance of real silicon being available.

A structured understanding of these project aims and why we should prototype will help us to decide if FPGA-based prototyping is going to benefit our next project.

Let us, therefore, explore each of the aims in Table 1 and how FPGA-based prototyping can help achieve them. In many cases, we shall also give examples from the real world and the authors wish to thank in advance those who have offered their own experiences as guides to others in this effort.

High performance and accuracy

Only FPGA-based prototyping provides both the speed and accuracy necessary to properly test many aspects of the design. We put this reason at the top of the list because it is the most likely underlying reason for a team to be prototyping, despite the many given deliverable aims of the project.

For example, the team may aim to validate some of the SoC’s embedded software and see how it runs at-speed on real hardware, but the underlying reason to use a prototype is for both high performance and accuracy. We could validate the software at even higher performance on a virtual system, but we lose the accuracy that comes from employing real RTL.

Real-time dataflow

Part of the reason that verifying an SoC is hard is because its state depends upon many variables, including its previous state, the sequence of inputs and the wider system effects (and possible feedback) of the SoC outputs. Running the SoC design at real-time speed connected into the rest of the system allows us to see the immediate effect of real-time conditions, inputs and system feedback as they change.

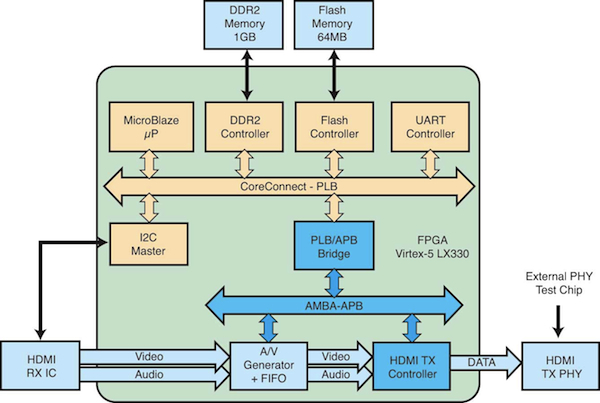

A very good example of this addressed real-time dataflow in the HDMI prototype and was performed by the Synopsys IP group in Porto, Portugal. Here, a high-definition (HD) media data stream was routed through a prototype of a processing core and out to an HD display, as shown in the block diagram in Figure 1.

Figure 1

Block diagram of an HDMI prototype (Source: Synopsys/Xilinx – click image to enlarge)

Notice that across the bottom of the diagram there is audio and HD video dataflow in real time from the receiver (from an external source) through the prototype and out to a real-time HDMI PHY connection to an external monitor.

By using a presilicon prototype, the team could immediately see and hear the effect of different HD data upon its design, and vice versa. Only FPGA-based prototyping allows this real-time dataflow, giving great benefits not only to this type of multimedia application but to many other applications where real-time response to input dataflow is required.

Hardware-software integration

In the above example, readers may have noticed that there is a small MicroBlaze CPU in the prototype along with peripherals and memories, so all the familiar blocks of an SoC are present. In this design the software running in the CPU is used mostly to load and control the A/V processing. However, in many SoC designs it is the software that requires most of the design effort.

Given that software has already come to dominate SoC development, it is increasingly common that the software effort is on the critical path of the project schedule. It is software development and validation that govern the actual completion date when the SoC can usefully reach volume production. In that case, what can system teams do to increase the productivity of software development and validation? To answer this question, we need to see where software teams spend their time.

Modeling an SoC for software development

Software is complex and hard to perfect. We are all familiar with the software upgrades, service packs and bug fixes in our normal day-to-day use of computers. However, in the case of software embedded in an SoC, this perpetual fine-tuning of software is less easily achieved.

On the plus side, the system with which the embedded software interacts, its intended use modes and the environmental situation are all usually easier to determine than for more general-purpose computer software. Furthermore, embedded software for simpler systems can be kept simple itself and so is easier to fully validate. For example, an SoC controlling a vehicle subsystem or an electronic toy can be fully tested more easily than a smartphone running many apps and processes on a real-time operating system (RTOS).

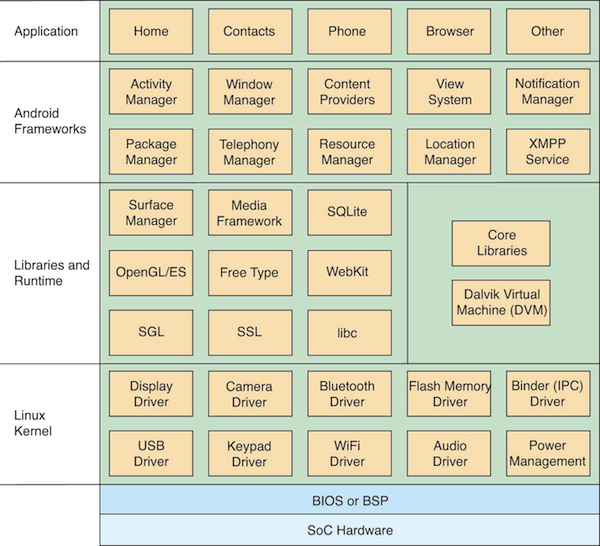

If we look more closely at the software running in such a smartphone, for example the Android software shown in Figure 2, then we see a multilayered arrangement, called a software stack (the diagram is based on an original in the book Unlocking Android by Frank Ableson, Charlie Collins and Robi Sen).

Figure 2

The Android software stack (Source: Unlocking Android – click image to enlarge)

Taking a look at the stack, we should realize that the lowest levels — i.e., those closest to the hardware — are dominated by the need to map the software onto the SoC hardware. This requires absolute knowledge of the hardware at an address and clock-cycle level of accuracy. Designers of the lowest level of a software stack, often called platform engineers, have the task of describing the hardware in terms that the higher levels of the stack can recognize and reuse. This description is called a board support package (BSP) by some RTOS vendors and is also analogous to the BIOS (basic input/output system) layer in day-to-day PCs.

The next layer up from the bottom of the stack contains the kernel of the RTOS and the necessary drivers to interface the described hardware with the higher-level software. In these lowest levels of the stack, platform engineers and driver developers will need to validate their code on either the real SoC or a fully accurate model of the SoC. Software developers at this level need complete visibility of the behavior of their software at every clock cycle.

At the other extreme for software developers, the top layer of the stack, we find the user space, which may be running multiple applications concurrently. In a smartphone, these could be a contact manager, a video display, an Internet browser and, of course, the phone subsystem that actually makes calls. Each of these does not have direct access to SoC hardware and is actually somewhat divorced from any consideration of the hardware. The applications rely on software running on lower levels of the stack to communicate with the SoC hardware and the rest of the world on its behalf.

We can generalize that, at each layer of the stack, a software developer only needs a model with enough accuracy to fool his own code into thinking it is running in the target SoC. More accuracy than necessary will only result in the model running more slowly on the simulator. In effect, SoC modeling at any level requires us to represent the hardware and the stack up to the layer just below the current level to be validated. Optimally, we should work with just enough accuracy to allow maximum performance.

For example, application developers at the top of the stack can test their code on the real SoC or on a model. In this case, the model need only be accurate enough to fool the application into thinking that it is running on the real SoC; it does not need cycle accuracy or fine-grained visibility of the hardware. However, speed is important because multiple applications will be running concurrently and interfacing with real-world data in many cases.

This approach of the model having ‘just enough accuracy’ for the appropriate software layer leads to a number of different modeling environments being used by different software developers at different times during an SoC project. It is possible to use transaction-level simulations, modeled in languages such as SystemC, to create a simulator model that runs with low accuracy but at high enough speed to run many applications together. If handling of real-time, real-world data is not important, then we might be better considering such a virtual prototyping approach.

However, FPGA-based prototyping becomes most useful when the whole software stack must run together or when real-world data must be processed.

Example: prototype usage for software validation

Only FPGA-based prototyping breaks the inverse relationship between accuracy and performance inherent in modeling methodologies. By using FPGAs we can achieve speeds up to real-time and yet still be modeling at a level of full RTL cycle accuracy. This enables the same prototype to be used not only for the accurate models required by low-level software validation but also for the high-speed models needed by the high-level application developers. Indeed, the whole SoC software stack can be modeled on a single FPGA-based prototype.

A very good example of this software validation using FPGAs was seen in a project performed by Scott Constable and his team at Freescale Semiconductor’s Cellular Products Group in Austin, Texas.

Freescale was very interested in accelerating SoC development because short product life cycles in the cellular market require that products get to market quickly not only to beat the competition, but also to avoid becoming rapidly obsolete. Analyzing the biggest time sinks in its flow, Freescale decided that most benefit would be achieved by accelerating cellular 3G protocol testing. If it could be performed presilicon, the company would save considerable months in a project schedule. Given product lifetimes of only one or two years, this is very significant indeed.

Protocol testing is a complex process that even at high real-time speeds requires a day to complete. Using RTL simulation would take years and even running on a faster emulator would take weeks; neither was a practical solution. FPGAs were chosen because they represented the only way to achieve the necessary clock speed to complete the testing in a timely manner.

Protocol testing requires the development of various software aspects of the product, including hardware drivers, operating system and protocol stack code. While the main goal was protocol testing, the use of FPGAs meant that all of these software developments would be accomplished presilicon, greatly accelerating various end-product schedules.

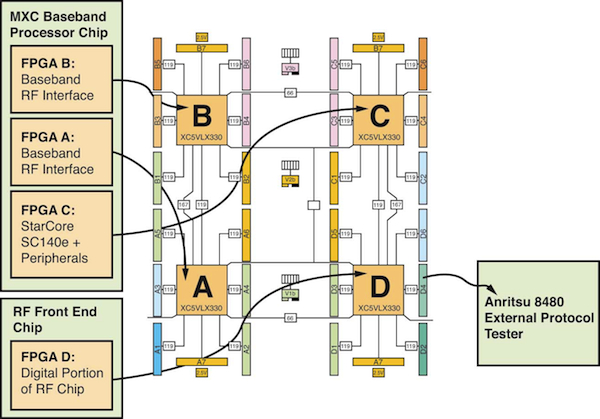

Freescale prototyped a multichip system that included a dual-core MXC2 baseband processor plus the digital portion of an RF transceiver chip. The baseband processor included a Freescale StarCore DSP core for modem processing and an ARM926 core for user application processing, plus more than 60 peripherals.

A Synopsys HAPS-54 prototype board was used to implement the prototype, as shown in Figure 3.

Figure 3

The Freescale SoC design partitioned into a HAPS-54 board (Source: Synopsys/Xilinx/Freescale Semiconductor – click image to enlarge)

The baseband processor was more than 5 million ASIC gates and Scott’s team used Synopsys Certify tools to partition this into three of the Xilinx Virtex-5 FPGAs on the board, while the digital RF design was placed in a fourth.

Freescale decided not to prototype the analog section but instead delivered cellular network data in digital form directly from an Anritsu protocol test box.

Older cores use some design techniques that are very effective in an ASIC, but which are not very FPGA friendly. In addition, some of the RTL was generated automatically from system-level design code, which can also be fairly unfriendly to FPGAs owing to overly complicated clock networks.

In Freescale’s case, some modifications therefore had to be made to the RTL to make it more FPGA compatible, but the rewards were significant. Besides accelerating protocol testing, by the time engineers received first silicon they were able to:

- release debugger software with no major revisions after silicon;

- complete driver software;

- boot up the SoC to the OS software prompt; and

- achieve modem camp and registration.

The team was able to reach the milestone of making a cellular phone call through the system only one month after receipt of first silicon, accelerating the product schedule by more than six months.

Scott Constable said, “In addition to our stated goals of protocol testing, our FPGA system prototype delivered project schedule acceleration in many other areas, proving its worth many times over. And perhaps most important was the immeasurable human benefit of getting engineers involved earlier in the project schedule, and having all teams from design to software to validation to applications very familiar with the product six months before silicon even arrived. The impact of this accelerated product expertise is hard to measure on a Gantt chart, but may be the most beneficial.

“In light of these accomplishments, using an FPGA prototype solution to accelerate ASIC schedules is a no-brainer. We have since spread this methodology into the Freescale Network and Microcontroller Groups and also use prototypes for new IP validation, driver development, debugger development and customer demos.”

This example shows how FPGA-based prototyping can be a valuable addition to the software team’s tool box and brings a significant return on investment in terms of product quality and project time scales.

Interfacing benefit: test real-world data effects

It is hard to imagine an SoC design that does not comply with the basic structure of having input data upon which some processing is performed in order to produce output data. Indeed, if we push into the SoC design we will find numerous sub-blocks that follow the same structure, and so on down to the individual gate level.

Verifying the correct processing at each of these levels requires us to provide a complete set of input data and to observe that the correct output data are created as a result of the processing. For an individual gate this is trivial, and for small RTL blocks it is still possible. However, as the complexity of a system grows it soon becomes statistically impossible to ensure completeness of the input data and initial conditions, especially when there is software running on more than one processor.

There has been huge research and investment in order to increase the efficiency and coverage of traditional verification methods and to overcome the challenge of this complexity. At the complete SoC level, we need to use a variety of different verification methods to cover all the likely combinations of inputs and to guard against unlikely combinations.

This last point is important because unpredictable input data can upset all but the most carefully designed critical SoC-based systems. The very many possible previous states of the SoC coupled with new input data, or with input data of an unusual combination or sequence, can put an SoC into a nonverified state. Of course, that may not be a problem and the SoC recovers without any other part of the system, or indeed the user, becoming aware.

However, unverified states are to be avoided in final silicon and so we need ways to test the design as thoroughly as possible. Verification engineers use powerful methods such as constrained-random stimulus and advanced test harnesses to perform a wide variety of tests during functional simulations of the design, aiming to reach acceptable coverage. However, completeness is still governed by the direction and constraints given by the verification engineers and the time available to run the simulations themselves. As a result, constrained-random verification is never fully exhaustive though it does greatly increase confidence that we have tested all combinations of inputs, both likely and unlikely.

In order to guard against corner-case combinations, we can complement our verification results with observations of the design running on an FPGA-based prototype in the real world. By placing the SoC design into a prototype, we can run at a speed and accuracy that compare very well with final silicon, allowing ‘soak’ testing within the final ambient data.

One example of this immersion of the SoC design into a real-world scenario was the use made of FPGA-based prototyping by DS2 in Valencia, Spain.

Example: immersion in real-world data

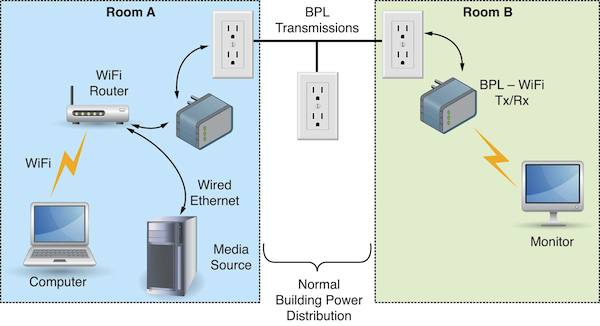

Broadband-over-power line (BPL) technology uses normally undetectable signals to transmit and receive information over electrical-mains power lines. A typical use of BPL is to distribute HD video around a home from a receiver to any display via the mains wiring, as shown in Figure 4.

Figure 4

Broadband-over-powerline technology used in a WiFi range extender (Source: Synopsys/Xilinx/DS2 – click image to enlarge)

At the heart of DS2’s BPL designs lie sophisticated hardware and embedded software algorithms, which encode and retrieve the high-speed transmitted signal into and out of the power lines. These power lines can be very noisy electrical environments, so a crucial part of the development is to verify these algorithms in a wide variety of real-world conditions.

Javier Jimenez, ASIC design manager at DS2, explained what FPGA-based prototyping did for the company: “It is necessary to use a robust verification technology in order to develop reliable and high-speed communications. It requires very many trials using different channel and noise models, and only FPGA-based prototypes allow us to fully test the algorithms and to run the design’s embedded software on the prototype. In addition, we can take the prototypes out of the lab for extensive field testing. We are able to place multiple prototypes in real home and workplace situations, some of them harsh electrical environments indeed. We cannot consider emulator systems for this purpose because they are simply too expensive and are not portable.”

This usage of FPGA-based prototyping outside of the lab is instructive because we see that making the platform reliable and portable is crucial to success.

Benefits for feasibility lab experiments

At the beginning of a project, fundamental decisions are made about chip topology, performance, power consumption and on-chip communication structures. Some of these are best performed using algorithmic or system-level modeling tools, but some extra experiments could also be performed using FPGAs. Is this really FPGA-based prototyping? We are using FPGAs to prototype an idea but it is different than using algorithmic or mathematical tools because we need some RTL, perhaps generated by those high-level tools. Once in FPGA, however, early information can be gathered to help drive the optimization of the algorithm and the eventual SoC architecture. The extra benefit that FPGA-based prototypes bring to this stage of a project is that more accurate models can be used, which can run fast enough to interact with real-time inputs.

Experimental prototypes of this kind are worth mentioning, as they are another way to use FPGA-based prototyping hardware and tools in between full SoC projects, hence deriving further return on our investment.

Prototype usage out of the lab

One truly unique aspect of FPGA-based prototyping for validating SoC design is its ability to work standalone. This is because the FPGAs can be configured, perhaps from a flash EEPROM card or other self-contained medium, without supervision from a host PC. The prototype can therefore run standalone and be used for testing the SoC design in situations quite different than those provided by other modeling techniques, such as emulation, which rely on host intervention.

In extreme cases, the prototype might be taken completely out of the lab and into real-life environments in the field. A good example of this might be the ability to mount the prototype in a moving vehicle and explore the dependency of a design to variations in external noise, motion, antenna field strength and so forth. For example, the authors are aware of mobile-phone baseband prototypes that have been placed in vehicles and used to make on-the-move phone calls through a public GSM network.

Chip architects and other product specialists need to interact with early-adopter customers and demonstrate key features of their algorithms. FPGA-based prototyping can be a crucial benefit at this very early stage of a project, but the approach is slightly different from mainstream SoC prototyping.

Another very popular use of FPGA-based prototypes out of the lab is for preproduction demonstrations of new product capabilities at trade shows. Let’s consider a use of FPGA-based prototyping by the research-and-development division of the BBC, the UK public broadcaster, that illustrates both out-of-lab usage and use at a trade show.

Example: A prototype in the real world

The powerful ability of FPGAs to operate standalone is demonstrated by a BBC Research & Development project to launch DVB-T2 in the United Kingdom. DVB-T2 is an open standard that allows HD television to be broadcast from terrestrial transmitters.

Like most international standards, the DVB-T2 technical specification took several years to complete and some 30,000 engineer-hours involving researchers and technologists from all over the world. Only FPGA-prototyping offered the flexibility required in case of changes along the way. The specification was frozen in March 2008 and published three months later as a DVB Blue Book on June 26.

Because the BBC was using FPGA-based prototyping, in parallel with the specification work, an implementation team, led by BBC R&D’s Justin Mitchell, was able to develop a hardware-based modulator and demodulator for DVB-T2.

The modulator was based on a Synopsys HAPS-51 card with a Virtex-5 FPGA from Xilinx. The HAPS-51 card was connected to a daughtercard that was designed by BBC R&D. This daughtercard provided an ASI interface to accept the incoming transport stream. The incoming transport stream was then passed to the FPGA for encoding according to the DVB-T2 standard and passed back to the daughtercard for direct upconversion to UHF.

The modulator was used for the world’s first DVB-T2 transmissions from a live TV transmitter, starting on the same day that the specification was published.

The demodulator, also using HAPS as a base for another FPGA-based prototype, completed the working end-to-end chain and this was demonstrated at the IBC broadcast technology exhibition in Amsterdam in September 2008, all within three months of the specification being agreed upon. This was a remarkable achievement and helped to build confidence that the system would be ready to launch in 2009.

BBC R&D also contributed to other essential strands of the DVB-T2 project, including a very successful plugfest in Turin in March 2009, at which five different modulators and six demodulators were shown to work together in a variety of modes. The robust and portable construction of the BBC’s prototype made it ideal for this kind of plugfest event.

Justin Mitchell had this to say about FPGA-based prototyping: “One of the biggest advantages of the FPGA was the ability to track late changes to the specification in the run-up to the transmission launch date. It was important to be able to make quick changes to the modulator as changes were made to the specification. It is difficult to think of another technology that would have enabled such rapid development of the modulator and demodulator and the portability to allow the modulator and demodulator to be used standalone in both a live transmitter and at a public exhibition.”

What can’t FPGA-based prototyping do for you?

We started with the aim of giving a balanced view of the benefits and limitations of FPGA-based prototyping, so it is only right that we should highlight here some weaknesses to balance against the previously stated strengths

First and foremost, an FPGA prototype is not an RTL simulator. If our aim is to write some RTL and then implement it in an FPGA as soon as possible in order to see if it works, then we should think again about what is being bypassed. A simulator has two basic components; think of them as the engine and the dashboard. The engine has the job of stimulating the model and recording the results. The dashboard allows us to examine those results. We might run the simulator in small increments and make adjustments via our dashboard; we might use some very sophisticated stimulus —but that’s pretty much what a simulator does. Can an FPGA-based prototype do the same thing? The answer is no.

It is true that the FPGA is a much faster engine for running the RTL “model,” but when we add in the effort to set up that model, then the speed benefit is soon swamped. On top of that, the dashboard part of the simulator offers complete control of the stimulus and visibility of the results. We shall consider ways to instrument an FPGA in order to gain some visibility into the design’s functionality, but even the most instrumented design offers only a fraction of the information that is readily available in an RTL simulator dashboard. The simulator is therefore a much better environment for repetitively writing and evaluating RTL code and so we should always wait until the simulation is mostly finished and the RTL is fairly mature before passing it over to the FPGA-based prototyping team.

An FPGA-based prototype is not ESL

Electronic system-level (ESL) or algorithmic tools such as Synopsys’ Innovator or Synphony allow designs to be entered in SystemC or to be built from a library of predefined models. We then simulate these designs in the same tools and explore their system-level behavior including running software and making hardware-software trade-offs at an early stage of the project.

To use FPGA-based prototyping we need RTL, therefore it is not the best place to explore algorithms or architectures that are not often expressed in RTL. The strength of FPGA-based prototyping for software is when the RTL is mature enough to allow the hardware platform to be built; then software can run in a more accurate and real-world environment. There are those who have blue-sky ideas and write a small amount of RTL for running in an FPGA for a feasibility study. This is a minor but important use of FPGA-based prototyping, but is not to be confused with running a system-level or algorithmic exploration of a whole SoC.

Continuity is the key

Good engineers always choose the right tool for the job, but there should always be a way to hand over work-in-progress for others to continue. We should be able to pass designs from ESL simulations into FPGA-based prototypes with as little work as possible. Some ESL tools also have an implementation path to silicon using high-level synthesis, which generates RTL for inclusion in the overall SoC project. An FPGA-based prototype can take that RTL and run it on a board with cycle accuracy. But once again, we should wait until the RTL is relatively stable, which will be after completion of the project’s hardware-software partitioning and architectural exploration phase.

So why use FPGA-based prototyping?

Today’s SoCs are a combination of the work of many experts, from algorithm researchers to hardware designers, to software engineers, to chip layout teams — and each has its own needs as the project progresses. The success of an SoC project depends to a large degree on the hardware verification, hardware-software co-verification and software validation methodologies used by each of the above experts. FPGA-based prototyping brings different benefits to each of them.

For the hardware team, the speed of verification tools plays a major role in verification throughput. In most SoC developments it is necessary to run through many simulations and repeated regression tests as the project matures. Emulators and simulators are the most common platforms used for that type of RTL verification. However, some interactions within the RTL or between the RTL and external stimuli cannot be re-created in a simulation or emulation, owing to long runtime, even when TLM-based simulation and modeling are used. FPGA-based prototyping is therefore used by some teams to provide a higher-performance platform for such hardware testing. For example, we can run a whole OS boot in relatively real-time, saving days of simulation time it would take to achieve the same thing.

For the software team, FPGA-based prototyping provides a unique presilicon model of the target silicon, fast and accurate enough to enable debug of the software in near-final conditions.

For the whole team, a critical stage of the SoC project is when the software and hardware are introduced to each other for the first time. The hardware will be exercised by the final software in ways that were not always envisaged or predicted by the hardware verification plan in isolation, exposing new hardware issues as a result. This is particularly prevalent in multicore systems or those running concurrent real-time applications. If this hardware-software introduction were to happen only after first silicon fabrication, then discovering new bugs at that time is not ideal, to put it mildly.

An FPGA-based prototype allows the software to be introduced to a cycle-accurate and fast model of the hardware as early as possible. SoC teams often tell us that the greatest benefit of FPGA-based prototyping is that when first silicon is available, the system and software are up and running in a day.

Acknowledgments

The authors gratefully acknowledge significant contributions from Scott Constable of Freescale Semiconductor, Austin, Texas; Javier Jimenez of DS2, Valencia, Spain; and Justin Mitchell of BBC Research & Development, London. This extract originally appeared in Xilinx’s Xcell Journal, Issue 75, and Tech Design Forum also thanks them for the reuse of the graphics in this article.

About the authors

Doug Amos (damos@synopsys.com) is business development manager in the Solutions Marketing division of Synopsys. René Richter (Rene.Richter@synopsys.com) is corporate application engineering manager in Synopsys’ Hardware Platforms Group. Austin Lesea (Austin@xilinx.com) is principal engineer at Xilinx Labs.

About the FPMM

To learn more about the FPMM and to download it as an e-book, go to www.synopsys.com/fpmm. Print copies are available from Amazon.com, Synopsys Press and other bookstores. Copyright © 2011 by Synopsys, Inc. All rights reserved. Used by permission.

Company details

Synopsys Inc.

700 East Middlefield Road

Mountain View

CA 94043

USA

T: +1 650 584-5000 or +1 800 541-7737

W: www.synopsys.com

Xilinx Inc

2100 Logic Drive

San Jose

CA 95124

USA

T: +1 408 559-7778

W: www.xilinx.com