Coding standards for secure embedded systems

Embedded systems are increasingly coming under attack as they hook up to the internet. Coding standards have emerged that make it easier to build code that is secure from the bottom up.

The security of networked embedded devices is becoming a major concern. The adoption of networking in embedded systems is widespread and includes everything from domestic audio/video systems to supervisory control and data acquisition (SCADA) systems. Users are generally aware that viruses, worms and malware are attempting to infiltrate their personal computers, but many are oblivious to the fact that the devices and infrastructure they have come to rely on in their everyday lives are also coming under similar attack. Systems need to be hardened so that they are impervious to attack without relying on the user “doing the right thing” to protect them.

Unfortunately, “security by obscurity”, where an attempt is made to secure a system by hiding its design and implementation details, has often been considered adequate to protect non-critical systems such as consumer electronics. The deployment of large numbers of consumer devices means that the chances of this security being maintained are negligible as many, many people have the opportunity to investigate its behaviour.

Figure 1 Tram in Lodz. (Photograph: Igal Koshevoy)

An example of such a failing occurred in 2008 in Poland when a teenager modified a remote control unit so that he could change the signals for the tram system. His actions lead to the derailing of at least four trams, resulting in twelve people being injured. The signals for the trams had been designed so that the driver could control them by using a remote control. The system was considered to be secure as the hardware was not commercially available and no thought was given to the injection of commands from any other external source, resulting in the use of data without encryption or validation.

Two schemes

There are many aspects of effective network security. But foundations are important, so we will concentrate on one issue: security within the firmware of the devices themselves and the role that coding standards can play in helping to achieve this. According to research by the National Institute of Security Technology (NIST), 64% of software vulnerabilities stem from programming errors. Two schemes to help address this and related issues have emerged from US-based projects.

The US federally funded organization CERT is developed coding standards that are intended to improve the core security of applications developed using C, C++ and Java. Currently, though, it is CERT C that is the primary focus. In October 2008, version 1.0 of the standard made its debut at the Software Development Best Practices exhibition in Boston.

CWE, on the other hand, is a strategic software assurance initiative run by the public interest, not-for-profit MITRE Corporation under a US federal grant, co-sponsored by the National Cyber Security Division of the US Department of Homeland Security. It aims to accelerate the adoption of security-focused tools within organizations that produce connected devices in order to improve the software assurance and review processes that they use to ensure devices are secure.

CWE maintains the Common Weakness and Enumeration database which contains an international, community-developed, formal list of common software weaknesses that have been identified after real-world systems have been exploited as a result of latent security vulnerabilities. The core weaknesses that lead to the exploits were identified by examining information on individual exploits recorded in the Common Vulnerabilities and Exposures (CVE) database (also maintained by MITRE) after their discovery in laboratories and live systems.

The CWE database can be used to highlight issues that are a common cause of the errors that lead to security failures within systems, making it easier to ensure that they are not present within a particular development code-base. The database contains information on security weaknesses that have been proven to lead to exploitable vulnerabilities and go above programming errors in firmware. These weaknesses could be at: the infrastructure level, such as a poorly configured network or security appliance; policy and procedure level, where devices or users share usernames or passwords; or the coding level. For coding issues, all of the software languages that are associated with contemporary enterprise deployments are considered and not just C, C++ and other languages commonly used in embedded systems.

The database does not capture known coding weaknesses that have not been exploited in the field. In other words, it holds information on actual exploits, not theoretical.

The CWE database groups the core issues into categories and is structured to allow it to be accessed in layers. The lowest, most complex layer contains the full CWE list. This contains several hundred nodes and is primarily intended to be used by tool vendors and security researchers. The middle layer groups CWEs that are related to each other. It contains tens of nodes and is aimed at software security and development practitioners. The top layer groups the CWEs more broadly. It contains a minimal set of nodes that define a strategic set of vulnerabilities.

After the Morris Worm

CERT was created by the Defense Advanced Resource Projects Agency (DARPA) in November 1988 in response to the damage caused by the Morris Worm. Although intended purely as an academic exercise to gauge the size of the Internet, defects within the worm’s code led to damaging denial of service events. This unintentional side-effect of the Morris Worm had repercussions throughout the worldwide Internet community and thousands of machines owned by many organizations were infected.

The SEI CERT/CC was primarily established to deal with Internet security problems in response to the poor perception of its security and reliability attributes. Over a period of 12 to 15 years the CERT/CC studied cases of software vulnerabilities and compiled a database of them. The Secure Coding Initiative, launched in 2005, used this database to help develop secure coding practices in C.

Coding standards are used to encourage programmers to uniformly follow the set of rules and guidelines, established at project inception, to ensure that quality objectives are met. Compliance with these standards must be ensured if these goals are to be achieved, especially as many security issues result from coding errors that they target.

The CERT C Secure Coding Standard provides guidelines for secure coding in the C programming language. Following these guidelines eliminates insecure coding practices and undefined behaviors that can lead to exploitable software vulnerabilities. Developing code in compliance with the CERT C secure coding standard leads to higher quality systems that are robust and more resistant to attack.

This compliance should be a formal process – ideally tool-assisted, but manual is also possible – as it is virtually impossible for a programming team to follow all the rules and guidelines thought the entire code-base. Adherence to the standards is a useful metric to apply when determining code quality.

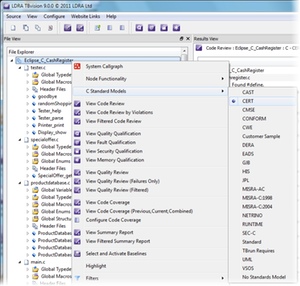

Figure 2 LDRA TBvision can perform static checks based on coding standards

The primary aim of CERT C is to enumerate the common errors in C language programming that lead to software defects, security flaws, and software vulnerabilities. The standard then provides recommendations about how to produce secure code. Although the CERT guidelines shares traits with other coding standards, such as identifying non-portable coding practices, the primary objective is to eliminate vulnerabilities.

And so, what is software vulnerability? The CERT/C describes a ‘vulnerability’ as a software defect which affects security when it is present in information systems. The defect may be minor, in that it does not affect the performance or results produced by the software, but nevertheless may be exploited by an attack from an intruder that results in a significant breach of security. CERT/C estimates that up to ninety percent of reported security incidents result from the exploitation of defects in software code or design.

The aim of the Secure Coding Initiative is to work with developers and their organizations to reduce the number of vulnerabilities introduced into secure software by improving coding practices through the provision of guidelines and training. To this end, one of the collaborations CERT has formed is with the SANS (SysAdmin, Audit, Network, Security) Institute, a leading computer security training organization.

Problem classification

The problems can be broadly classified in to two groups based on the content that is received over the network.

Firstly, the device can react incorrectly to valid data, possibly because it contains forged or spoofed security credentials. Information flow analysis can help to identify regions of code which do not adequately validate such data.

Secondly, the device can react inappropriately when it receives invalid data. Such failures are often associated with data and/or information flow errors within the code, with the incorrect handling of error conditions leading to unexpected control flow path execution as the data is processed. These paths may result in the incorrect initialization of variables if the expected paths are not taken, often due to assumptions that have been made about the expected data values.

Incorrect processing of input data can lead to stack or buffer overrun errors, with the risk that this leads to the execution of arbitrary code injected as part of a deliberate attack on a system. It is common for the initial processing of network data to take place within the kernel of an operating system. Thus means that any arbitrary code execution is likely to take place at an elevated privilege level (akin to having “superuser” or “admin” rights) within the system, giving it the potential to access all data and/or hardware regardless of whether it is related to the communications channel that was used to initiate the attack. From this, it is obvious that any vulnerability in low-level communications software may lead to the exposure of sensitive data or allow the execution of arbitrary code at an escalated privilege level.

It is possible for an external agent to deliberately target and exploit vulnerabilities within a networked system. This could allow eavesdropping of sensitive data, denial of service attacks, redirection of communications to locations containing malware and/or viruses, man-in-the-middle attacks, etc. In fact, a quick Internet search will show that organizations such as CERT, SANS, etc. have many reports of network vulnerabilities that facilitate such attacks. These include integer overflows leading to arbitrary code execution and denial of service, and specially crafted network packets allowing remote code execution with kernel privileges and local information disclosure.

Update gap

While many of the identified vulnerabilities are eliminated from future code releases, it is common for deployed systems not to benefit from this work via updates. PC drivers are generally (at least within corporate environments) updated as part of the rollout of routine security updates. However, embedded devices are often “ignored” as the updates are often more difficult to apply and some devices are simply “forgotten about.”

The CERT C guidelines define rules that must be followed to ensure that code does not contain defects which may be indicative of errors and which may in turn give rise to exploitable vulnerabilities. For example, guideline EXP33-C says “Do not reference uninitialized memory,” protecting against a common programming error (often associated with the maintenance of complex code). Consider the following example:

int SignOf ( int value )

{

int sign;

if ( value > 0 )

{

sign = 1;

}

else if ( value < 0 )

{

sign = -1;

}

return sign;

}

The SignOf() function has a UR dataflow anomaly associated with sign. This means that, under certain conditions (that is, if value has a value of ‘0’), sign is Undefined before it is Referenced in the return statement. This defect may or may not lead to an error. For example:

int MyABS ( int value )

{

return ( value * SignOf ( value ) );

}

MyABS() will work exactly as expected during testing, even though the return value of SignOf() will contain whatever value was in the storage location allocated to sign when it is called (zero multiplied by any integer value is zero). The code is said to be “coincidentally correct” as it works, even though it contains a defect. However, all uses may not be so fortunate:

void Action ( int value )

{

if ( -1 == SignOf ( value ) )

{

NegativeAction ( );

}

else

{

PostiveAction ( );

}

}

The code in Action() is most likely to call PositiveAction() when it is called with a value of ‘0’ (which is likely to be correct), as only a return value of (exactly) ‘-1’ will cause it to do otherwise. It is likely that a latent defect will be present in released code as there is little chance of it causing an error during testing, even if that testing is robust.

Such an error could potentially be manipulated by an attacker to invoke the incorrect behaviour (for example, by causing the stack on which sign is created to be pre-loaded with ‘-1’).

Although compliance with the CERT C guidelines can, in theory, be demonstrated by manual checking, this is not practical for large or complex systems. To that end, tools are available to automate the compliance checking process.

Conclusion

Adoption of security standards such as CERT or CWE allow security quality attributes to be specified for a project. Incorporation of security attributes in to the system requirements means that they can then be measured and verified before a product is put into service, significantly reducing the potential for in-the-field exploitation of latent security vulnerabilities and the elimination of any associated mitigation costs.

The use of an application lifecycle management (ALM) tool to automate testing, process artifact and requirement tracing dramatically reduces the resources needed to produce the evidentiary documentation required by certification bodies. The use of a qualified and well-integrated tool chain leverages the tool vendor’s experience, reputation and expertise in software security, helping to ensure a positive experience within the development team.

A whole-company security ethic supported by the use of standards, tools and a positive development environment ensures that security is a foundational principle. The resulting process of continual improvement helps to ensure that only dependable, trustworthy, extensible and secure systems are released for production.

About the authors

Paul Humphreys is a software engineer and Chris Tapp is a field applications engineer at LDRA.

Contact

LDRA

Portside

Monks Ferry

Wirral

CH41 5LH

UK

T: +44 (151) 649 9300

W: www.ldra.com