UVM coding guidelines offer clarity in a complex world

These 13 suggestions toward best practice address some of the most persistent challenges with the Universal Verification Methodology.

The Universal Verification Methodology (UVM) promised a simpler world where a common set of guidelines for testbenches and connected verification IP would make everything get along and make life easier. But UVM carries some baggage – it’s been on a long journey through previous standards such as OVM, VMM, eRM, and AVM.

A consequence is that in some situations, instead of unity, the UVM offers several ways of solving a problem. And non-UVM approaches could apply as well. So how do you know which strategy to use?

This article tries to answer this question by suggesting 13 style and coding recommendations. Although there is almost never a single right answer, we hope that some of these recommendations will become part of future versions of UVM.

UVM has many different classes and functions and provides a very powerful way to improve verification productivity. But as for all powerful tools, care must be taken. A chainsaw is a powerful tool, and it is always used with a mind to safety and with proper care. UVM should be approached in the same way, with certain characteristics in mind. The main watchwords here include:

- Clarity;

- Simplicity;

- Ease-of-modification; and

- Performance.

Thirteen UVM recommendations you can trust

Many of the following coding style recommendations are widely known, yet badly written code is still seen in UVM testbenches. You want to reuse code to leverage the efforts of others, but reusing poor code is just a way of repeating past mistakes.

- Group configuration values into config objects. These are classes extended from uvm_object. Don’t write individual values in the uvm_config_db.

The set-and-get of individual values is error prone, difficult to debug, and hurts performance. The uvm_config_db is a good way to pass virtual interface handles from the RTL code’s static domain to the testbench dynamic domain. However, it is poorly suited for passing individual configuration values down through the testbench. The configuration database organizes values based on strings with wildcards. As you store more and more entries, the overhead of this string matching becomes unacceptable. As an example, while a design house was integrating several testbench blocks, the build phase shot up to 24 hours of CPU time, even though the run phase took less than an hour. Profiling revealed the uvm_config_db caused the slowdown.

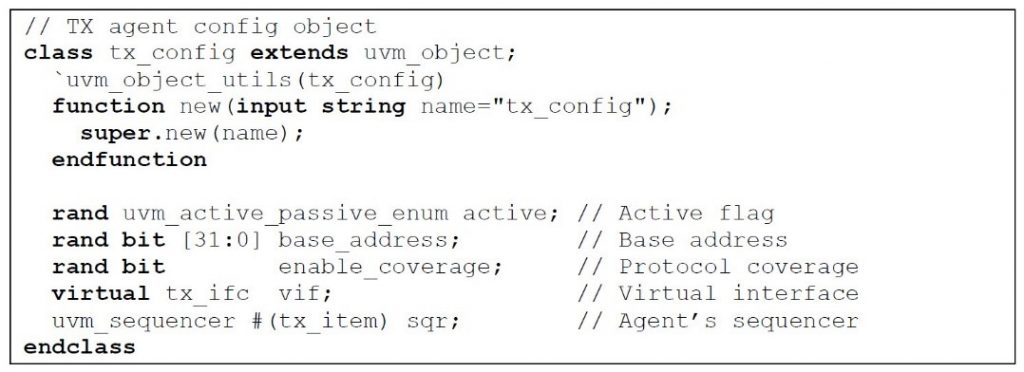

For example, your TX agent needs an active/passive flag, a base address, a flag to enable the coverage collector, a handle to a virtual interface, and a sequencer handle. Figure 1 shows a simple agent config object class.

- When uvm_config_db::get() fails to find a virtual interface or config object handle, you should stop simulation with a uvm_fatal message, not a lower severity.

The test class gets the virtual interfaces from the uvm_config_db. Each component gets its config object including the virtual interface, from the uvm_config_db. If these are not found, this is a testbench bug and simulation cannot continue. Do not use just the uvm_error macro as the simulation will continue and your code will fail when it uses a null handle. Now you are one more step removed from the original problem.

- In uvm_config_db::set() calls, only put wildcards on the end of instance names.

The uvm_config_db performs string matching to find an entry. If you use wildcards, you increase the number of unintended potential matches. The closer the wildcard character is to the front of the string, the greater number of matches. The worst case is an instance name of just “*”.

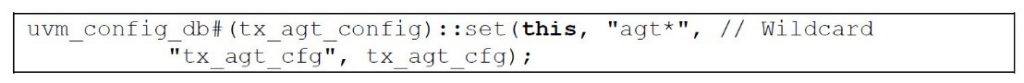

Wildcard instance names are handy when passing information across multiple lower levels. For example, the environment passes the agent config object, which contains the virtual interface, into the agent and its subcomponents, the monitor and driver. The environment makes the following call to pass the tx_agt_cfg handle. The wildcard name, agt*, means that the handle is visible to the agent and all child scopes as shown in Figure 2.

- Pass config objects inside your testbench with OOP-style set_config() methods, instead of the confusing uvm_config_db.

Once you convert your testbench from passing individual values to passing config objects, you can see the bigger picture, which is that a testbench is configured and built from the top down, guided by the configuration objects. These objects are created at higher levels and the handle is passed to lower levels. For example, the test passes the environment config object into the environment. This is a simple pattern so why burden yourself with the complexity of the uvm_config_db? Since the test already has a handle to the environment, just pass the handle directly with an OOP-style set() method.

- Minimize the use of UVM objections and calls to raise_objection() and drop_objection().

The primary purpose of the UVM objection is to keep the task-based phases executing, such as run_phase(). Without an objection, UVM ends the phase at the end of the current timeslot. However, if you excessively raise and drop objections, you can cause performance problems. Remember, a single, well planned objection works as well as dozen scattered ones.

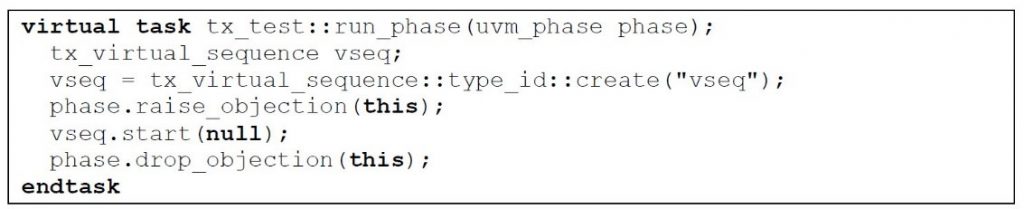

A test must raise an objection before starting a sequence to prevent the run phase from ending. A scoreboard might raise an objection while waiting for the last transactions. But don’t raise and drop objections inside a sequence as the test-level one is already doing its job. One test-level objection is enough to keep the phase running for the entire top-level virtual sequence, all its child sequences, and transactions (see Figure 3).

- When starting a sequence item, call the create(), start_item(), randomize(), and finish_item() methods instead of the `uvm_do* macros, the ‘training wheels’ of UVM.

The macros are great for beginners, and can help you write a simple sequence in just a few lines. But as soon as you want to do something more complex (e.g., changing the randomization results), you have to learn the individual steps. If you try to manually expand the macros, you can be overwhelmed as the macros are heavily layered, difficult to reverse engineer, and call obscure methods. The macros were designed to be easy for new users but run out of steam when you want to try something different. Once you understand the base methods, you can build sequences that perform complex actions. Learn the four steps and you will be able to create complex stimulus with ease.

- Write your sequence item classes quickly with the UVM field macros instead of the sequence item do_*() methods.

More code means more bugs. The field macros allow you to automatically create hundreds of lines of code with a single macro, such as uvm_field_int. Even a simple set of do_*() methods plus the convert2string() method requires dozens of lines of code. You can quickly create a base sequence item class that comfortably fits on one page and is easy to understand.

The default sprint() method is created automatically. Writing a convert2string() by hand can take an hour or three if you want to precisely line up every field. That is time not spent on creating new tests and sequences.

- Write your sequence item classes accurately with the sequence item do_*() methods instead of the field macros.

The do_*() methods allow you to precisely control how your transaction fields are manipulated. If your sequence item class has properties that are conditional on other fields, such as a type, you will only be able to copy and compare them by explicitly writing the do_copy() and do_compare() methods. The field macros cannot handle this case.

- When you need to access properties in the UVM base classes, call the provided set and get methods, instead of accessing them directly.

If your code calls these set-and-get methods, it stays independent of any specific implementation of UVM. The IEEE 1800.2 standard describes the behavior of the base class library, but each EDA vendor will create its own implementation the library. Each will have its own members. Sequences that need a handle to their associated sequencer should call get_sequencer(), and not use the m_sequencer property.

- Call the factory create() method for anything that you want to override, such as transactions and components.

Use the power of the uvm_factory to make your testbench classes more configurable. The factory allows you to inject new behavior into components without having to make any changes to the class. When you need to construct an object, always call class::type_id::create() or the `uvm_create macro.

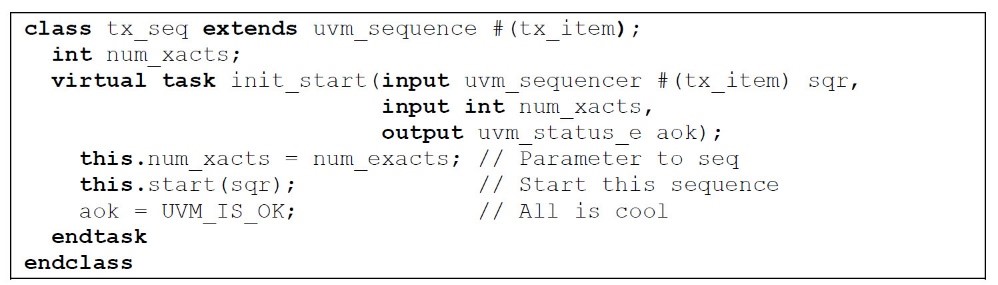

- Make your sequences easier to run with a user-defined virtual method. Define your own arguments including responses.

A configurable sequence needs parameters such as the sequencer handle, number of transactions, and randomization constraint weights. Pass these directly to a method in the sequence. You can make the sequence easier to run by wrapping these details in the method, as shown in Figure 4. Now the software engineers on the team can also create tests and virtual sequences by calling these methods, without having to learn UVM details.

- If your test runs multiple sequences, put them into a virtual sequence.

This novel combination is now reusable. A test is built from smaller steps to create, apply, and check stimulus. If you write a test that applies several flavors of sequences by manually starting each base sequence, that combination cannot be reused. If you instead combine them into a virtual sequence, your test and others can reuse it. Better yet, these sequences can be reused in higher level virtual sequences.

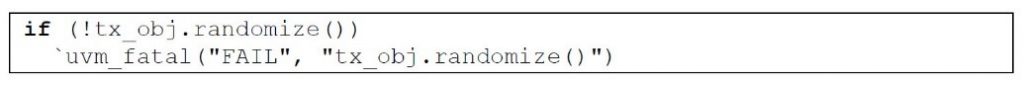

- Check SystemVerilog operations

An often overlooked fatal simulation problem is SystemVerilog randomization when there is a constraint conflict. If you try to randomize an object and it fails, by default the simulator does not print any message, and the random variables retain their previous values. So you might send the same transaction values repeatedly without realizing it. Some simulators have switches to stop simulation and create test cases—always run with these. Check the result from the randomize function with an if statement and write a fatal error with a meaningful message, as shown in Figure 5.

Conclusion

You should write your code as if someone is looking over your shoulder, asking what it does. That person could be another engineer, your manager, or even you yourself two months later… when you have to go back and debug a problem with an existing test or reuse a component on a new environment. Writing clear, well documented code may take more time today, but will save you countless hours later.

For a fuller treatment of these guidelines, their benefits, tradeoffs, and how they compare to other approaches, please download the award-winning technical paper, UVM — “Stop Hitting Your Brother” — Coding Guidelines.

About the authors

Rich Edelman is a verification technologist in the Mentor Graphics Design and Verification Technologies Division. He helps customers adopt and deploy UVM and OVM, and his verification interests range from DPI and transaction recording to register modeling, sequences, and class-based debug. Rich has published many conference papers, including a Best Paper on SystemVerilog DPI at DVCon, and various transaction recording papers with IPSOC. He holds a BSEE, a BSCS and an MSCS from Washington University in St. Louis.

Chris Spear is Principal Instructional Designer/Trainer in the Mentor Graphics Design and Verification Technologies Division. He brings over 25 years of EDA expertise to Mentor customers and has taught thousands of engineers. Chris wrote the 2012 best-seller SystemVerilog for Verification and helped to develop the IEEE SystemVerilog standard for random seeding and File I/O PLI packages. He holds a degree in electrical engineering from Cornell University.