Self-test strategies for embedded systems

Developers of electronic systems – both hardware and software – generally make the assumption that nothing will go wrong. They do this regardless of the knowledge that problems with electronics as a result of a design flaws or component failure and of malfunctioning software are common occurrences. There is a culture of denial resulting from a perception that little can be done about these issues without great expense or inconvenience. This is not the case.

An embedded system has sophisticated software that provides its core functionality. It is not a great leap to enhance this software to also monitor the health of the system itself. The starting points are the realization and admission that problems occur. It is then only a matter of devising self-test strategies.

Broadly speaking, there are four aspects of an embedded system that can fail:

- The CPU itself

- Peripheral electronics around the CPU

- Memory

- Software

Each needs to be considered in turn.

CPU failure

Clearly, the functioning of the CPU is critical to its ability to run any software, including self-test. Total failure of the chip is relatively uncommon and quite apparent when it does happen, but there are other aspects to CPU failure.

It is becoming increasingly common to implement embedded systems using multiple cores. This is a great opportunity to improve system integrity. It is likely that the cores will communicate with one another in the course of their usual operation. With a little thought, this communication can be used to enable each core to monitor the wellbeing of others.

Partial failure of a CPU is very unlikely. For example, it is hard to imagine a device suddenly ‘forgetting’ how to add two numbers. However, a subtle design flaw, one that may only affect a certain (probably early) batch of devices, is far from unknown. If undetected, such a flaw will be there as soon as the chip is used. It should theoretically be picked up by the quality control process for the components used to build the system, but a quick check for any known flaws during initialization may be prudent.

Peripheral failure

Typically, much of the electronics in an embedded system will be custom hardware. It is therefore difficult to give any general advice with regard to self-test. However, it is well worth considering what can be done, normally during initialization, to verify the integrity of peripheral hardware.

A starting point is to check whether a device is actually there: Is its address responding? An invalid address trap is a clear indication of some kind of failure. A common possibility with communications devices is a ‘loop-back’ mode where any data sent by the device is immediately returned. This offers some confidence that the device is basically working.

If a device returns some data values, can you make any intelligent guesses about their validity? Maybe there is a range of values that make sense and a value outside that range is a sign of trouble. Or perhaps the value is changing too fast or too randomly to be credible. Failing to ‘sanity check’ sensor information is a common and very dangerous error.

Another important consideration is timeouts. If the code is waiting for some data from a device – even one that it was talking to recently – it should not wait indefinitely. There should always be a realistic timeout in case the device has failed. Otherwise, of course, the software gets stuck in an infinite loop.

Memory failure

The amount of memory in modern systems has increased exponentially in recent years. It is a critical component of a system and it is surprising that failure is not more common. In fact, there are very common transient errors, where a single bit of RAM is flipped by a passing cosmic ray. Most of the time, this has no effect or may result in a ‘blue screen’ (and corresponding blue language), but nothing worse. Ultimately, there is nothing that can be done in software to mitigate this kind of failure, though some physical shielding may help.

There are broadly two types of memory in an embedded system: read-only memory (ROM that is usually implemented using flash memory) and read/write memory (anachronistically called ‘random access memory’ or RAM). Each is subject to possible failure and some integrity checking is sensible.

Although ROM is thought of as solid and unchanging, depending on the technology used to implement it, degradation or failure can occur. A simple precaution is to include some error checking data (parity, checksum or CRC) when the ROM is written and verify that data on system start-up.

Most systems have a much larger amount of RAM and this is used to store the code (copied from ROM on start-up) and data. The data in RAM is constantly in a state of flux, so error checking codes are not practical, but other kinds of testing make sense. Typical RAM failure modes are stuck bits (i.e. bits that are set to 0 or 1 and cannot be changed) or crosstalk (where writing to one bit affects one or more other bits).

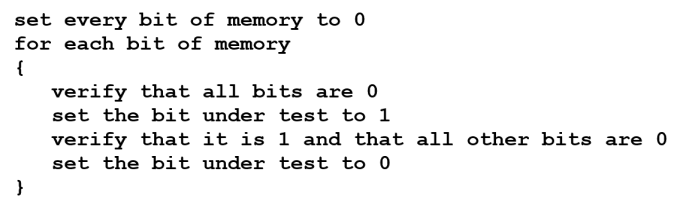

RAM failure is most likely to occur on power-up, so this is the time to perform some thorough testing. It is also an opportunity to perform a test before the RAM contains any meaningful data. A good procedure that may be performed by initialization code is a ‘moving ones’ (or ‘moving zeros’) test. Here is the algorithm:

This routine needs to be coded such that it does not use any memory, only registers. It is an interesting but achievable challenge.

With a large memory area, this test could take some time and cause a start-up delay that is unacceptable. If the architecture (chip configuration) of the memory is known, the test can be performed one chip at a time and this is much faster. This approach makes sense as crosstalk between chips is relatively unlikely.

Incidentally, as this test addresses all of memory, any faults with address decoding would be detected because an invalid address trap will occur.

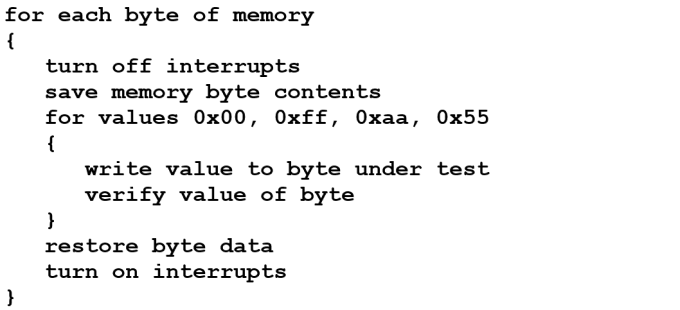

Once the device has initialized and the application code is running, it is no longer acceptable to run a destructive RAM test. However, the ongoing health of the RAM can be monitored by running a simple routine in the background (in a background – low priority – task, timer tick or idle loop), like this:

This algorithm is shown operating on bytes. However, for most devices it would be more efficient to work with words – only a small adjustment.

Although turning off interrupts may be undesirable, this routine only does so for a few instructions. This should have no impact on real-time integrity.

Software error conditions

Although a software developer writing self-test code to check out the system electronics may take some pleasure in looking for faults in his hardware counterpart’s design, some acceptance that software may also fail is required. Although software does not ‘break’ – it will not change from one day to another – design and implementation errors are almost inevitable, and mitigation is worthwhile.

Broadly speaking, software errors result in one of two possible problems:

- Code looping

- Data corruption (arrays or stacks)

Code looping

Although the outer loop of an embedded application (or each task thereof) may be an infinite loop, other loop structures should always be bounded. As mentioned earlier, a loop awaiting a response from an external source should always have a timeout to avoid the code being stalled.

A common practice to protect against looping code is to employ a ‘watchdog’. This is normally an additional hardware element that needs to be addressed periodically, otherwise it ‘bites’. This most likely results in a system reset. In a multi-tasking application, one task may function as a watchdog to the others.

Data corruption – arrays

Data corruption is harder to mitigate, as completely random errors can result. In C, part of the problem is pointers, which are one of the most powerful and useful features of the language, but also the most dangerous. Use of a null pointer is easily detected by including a suitable trap routine. However, a pointer that is pointing to the wrong place is impossible to detect. It is useful to have some coding guidelines that slightly limit the complex use of pointers. For example, pointers and pointers-to-pointers may be acceptable, but use no deeper levels of indirection.

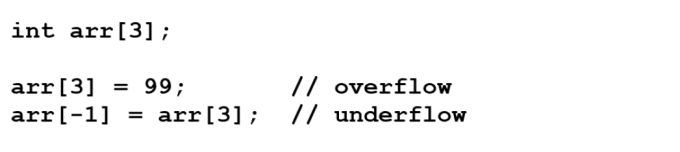

One type of data corruption that is quite common and can produce very obscure error conditions is made up of the overflow and underflow of arrays and stacks. Some precautions may be taken.

Arrays in C have an index that starts from zero. So, an array of size n has indices going from 0 to n-1. There is no checking that the index used on an array access complies with these limits because the overhead in such checking is seen as excessive. You can write code like this:

Although you are unlikely to explicitly reference index -1, it is easy to imagine accidentally decrementing an index variable by one too many. Accessing (the non-existent) element 3 is even easier to envisage and can also be caused by erroneous incrementing of an index variable.

The problem with this fault is that the corruption may not become apparent until the adjacent data structure is accessed. This may not occur for some time and may be in a completely different part of the application from where the corruption took place. This makes debug very challenging.

The solution (apart from more careful coding!) is to add ‘guard words’ at either end of the array. A guard word is an additional word (variable) that is pre-initialized with a unique value. These words are monitored by a background task perhaps and/or during debug. If the known value has been overwritten, overflow or underflow has occurred. However, as adjacent data has not been affected, there is a chance to find the coding error.

Choosing a unique value is interesting. An odd number would be best (so that it cannot be an address) and some obvious values like 1 or 0xffffffff should be avoided. Beyond that there is a two-billion-to-one chance of a clash and a missed error.

An easy way to add guard words is to have a coding standard that states:

- If an array of size N is required, declare one of size N+2

- Use array indices in the range 1 to N

Data corruption – stacks

In a multitasking application, there is normally a stack for each task, and these are commonly stored contiguously. Specifying the stack size can be challenging, as allocating too much wastes memory and too little risks overflow. There are tools to help determine the right size, but it is still somewhat problematic. Using guard words is a good way to detect stack overflow and, in the same way as with arrays, enables a problem to be detected before catastrophic damage is incurred. This kind of bug challenges even the most experienced developer, as one task’s erroneous code causes another completely unrelated task to misbehave for crash.

Failure recovery and reporting

Having looked at ways to detect a failure or impending problem, there is then the question of what to do about it. This tends to be very application specific. In some contexts, advising a user that a problem has been detected is all that is required. If the user interface is very simple, this may only be possible by flashing an LED or sounding an alarm.

Very often, if continuing system operation is a priority, the only sensible action is a system reset – get things back to a stable operating mode. If there is a way to record details of the problem so that this information may be communicated or recovered later, this is very much worth doing.

Real world examples

A manufacturer of heart pacemakers included a self-test procedure. If it finds a problem, it does a reset. The priority is the patient’s wellbeing. When the patient visits his or her medical consultant, the pacemaker provides information (using an inductive connection) about how the patient’s heart had been behaving. At the same time, any logged error information is uploaded and can be passed back to the manufacturer.

Another manufacture of industrial machinery took advantage of Internet connectivity to report any errors detected by the software. Operators were advised, and an email was sent by the machine to log a service call.

Conclusions

There are many practices that can help developers to create better and more reliable systems. One of those starts with an acceptance that problems occur and things go wrong. With some fairly simple additional software, devices can monitor their own health and action can be taken before complete failure occurs.

Colin Walls, embedded software technologist, has over twenty-five years of experience. A frequent presenter at conferences and seminars, and author of numerous technical articles and books on embedded software, Colin is a member the Mentor Embedded Systems Division.

Colin Walls, embedded software technologist, has over twenty-five years of experience. A frequent presenter at conferences and seminars, and author of numerous technical articles and books on embedded software, Colin is a member the Mentor Embedded Systems Division.