A new formal proof kit for RISC-V processors

Chip design is being democratised by the advent of open-source processor architectures such as RISC-V. So, more organizations are daring to get into processor design. They are undertaking designs that range across embedded microcontrollers, high-end desktop and server-grade CPUs, AI and machine learning. But if designing a new microprocessor has become economically more feasible thanks to open-source architectures, test and verification continue to be major hurdles. They are estimated to take up around 70% of overall chip development.

This cost does not factor in the cost of missed bugs and re-spins when functional defects in a chip are not caught before fabrication. Look at the fallout from the security bugs Spectre and Meltdown. They affected virtually every processor on Earth regardless of vendor or architecture, including those from big names such as Arm, Intel, and AMD.

How do we secure future chip design against malicious bugs that render systems vulnerable to hacking? How can we be sure that a chip will continue to work as intended for highly sensitive use-cases in aerospace and defence, and not misbehave due to both intentional and unintentional bugs that slipped through test and verification?

The main challenge is that conventional verification techniques grounded in simulation are inadequate. They do not give us the guarantees we need to be sure that the systems we build are safe and secure. Even faster (and far more expensive) verification methods such as emulation also cannot catch all the bugs and thereby deliver much-needed assurance over security and safety.

Formal verification is the only verification technique that provides ‘proofs’ of bug absence as well as provable evidence of bugs in designs and specifications.

Verifying the correctness of RISC-V processors

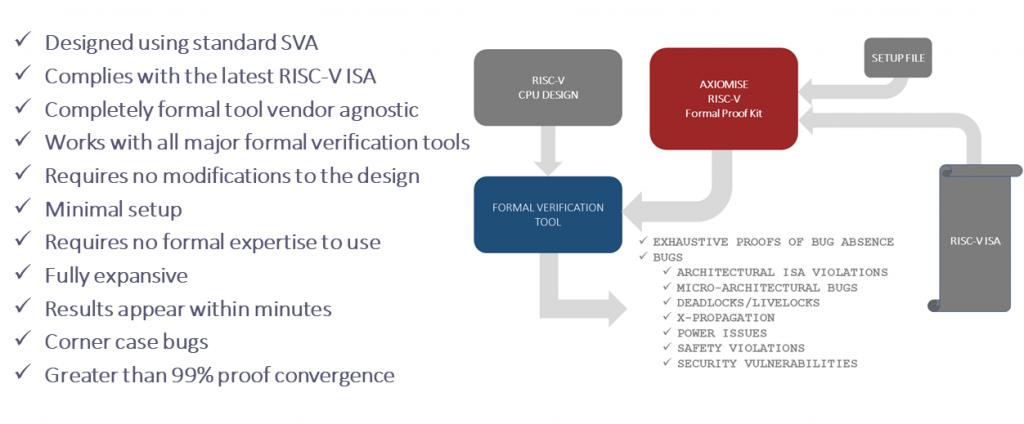

We have developed a formal proof kit using industry-standard, non-proprietary System Verilog Assertions for establishing ISA compliance based on the published RISC-V instruction set architecture (ISA). It is shown in Figure 1.

We can use this kit to determine if a given processor implementation conforms to the ISA semantics. Our solution is completely formal tool vendor-agnostic and requires no design modification. Rather, the only requirement is that the end-user is familiar with Verilog and/or VHDL. Moreover, our methodology is reusable for other RISC-V processors. Our methodology is built using the SVA open standard and is enhanced for performance with abstractions and a problem-reduction tool kit. We have deployed our methodology in practice to ensure that we can establish that all the functional, safety, security, and power requirements of a processor are turned into provably valid axioms (properties) of the design implementation.

Methodology

The general checking strategy relies on observing the path of all instructions from the point of issue to the point of completion using observation models where non-determinism allows for other instruction inter-leavings to intersperse between the issue and completion of the specific instruction being checked. This ensures that we can catch all kinds of bugs that are control and concurrency dominated and can cause an incorrect result to occur.

It is important to emphasize that checking has to be done end-to-end for every instruction with non-determinism. This is because, in practice, most bugs are missed due to the very directed nature of tests. They specifically explore a directed path from issue to completion without necessarily allowing for interference from other instructions.

For most instructions, we usually write one check that is built around observing the cause/effect relationship. The checks for LOADS and STORES are built with a full interface to the memory whereby we check that all variants of LOADS and STORES are checked – BYTE, WORD, HALF-WORD, aligned and misaligned. This allows us to examine all possible dependencies between different flavors of LOADS and STORES, especially when there is a sequence of these directed to the same address.

In some cases during development, we caught bugs we ourselves had introduced in the test development phase. Most of these were due to errors in our understanding of the RISC-V ISA. Thankfully with feedback from designer partners, we have been able to align this to what the ISA mandates.

Performance

All our proof checks converge – they produce either a ‘pass’ or a ‘fail’ outcome. When the outcome is a ‘pass’, it means a given check is valid for the implementation under all possible combinations of inputs and states. In the case of a ‘fail’, this indicates there is a mismatch between the design model and our checker model, which is then debugged to identify the issue’s root cause.

We have been able to exhaustively prove conformance of the design implementation against our proof kit checks for the RV32IC subset of RISC-V ISA for a family of RISC-V processors from the PULP platform group, using any available off-the-shelf formal verification tool from Cadence Design Systems, Synopsys, Mentor or OneSpin Solutions.

Some tools produced results within a few hours; a few others took about 72 hours. But the outcomes were the same for all: What proves in one is proven in another, and what fails in one remains fails in another. Within an hour, we can get proofs to converge on all the checks other than LOAD/STORES, with over 75% of the non-LOAD/STORE checks done in seven wall-clock minutes.

Bugs and proofs

We have also applied our methodology to the well-known 32-bit low-power processors zeroriscy and microriscy that are available in the public domain and that have been taped out in 40nm ASICs and FPGAs. We found 66 bugs and exhaustively proved a range of end-to-end functional properties that establish the correctness of the processor.

A lot of these bugs were due to a buggy debug unit which we understand is to be redesigned. We also found bugs related to malfunctioning interfaces, caught unintended logic interfering with the normal functioning of LOAD instructions, and identified power issues related to redundant clocking rather than the faulty debug unit.

In some cases, we analyzed the design for livelocks and were able to establish that it did not have any. We caught bugs in the design where a LOAD instruction was implementing a non-standard version of LOAD, causing the standard ISA-specified behavior to fail. We observed that standard interpretation of the STORE instruction was altered for specific micro-arch implementations without a specification saying so.

We recently analyzed another core, ibex, under development by lowRISC for ISA compliance. Using our proof kit, we have so far found six new bugs related to six branch instructions. It took us an hour to set up and find missing design files. Within a couple of hours, we had all the results. On a closer look, they all seem to be related to a debug unit which is again still under development.

Upon masking the debug logic in the design by introducing an assumption in our test environment, we proved that these bugs disappear, so that previously failing checks now held exhaustively. All ISA properties were exhaustively proven in this case as well.

Coverage and completeness

We used a range of coverage techniques harnessing mechanisms in the tools that we complemented with our techniques to ensure we built a complete solution. Being able to use different coverage solutions from different vendors has the benefit of exposing any discrepancies that you might miss when using only one specific tool.

We introduced hand mutations (bugs) in a design to check if our properties were sensitive to them. This way, we established that our checks were indeed doing the right thing. It is a very effective, efficient and economical method in terms of runtime performance to find problems with a testbench. We exploited this technique heavily. We then reviewed whether or not we have mapped all the instructions to checks in our test environment so we could determine if we were complete with respect to the ISA.

Conclusion

We aimed to carry out an end-to-end formal verification with the three particular limitations.

- That our work was carried out away from designers with limited access

- That we had no prior knowledge of the design microarchitecture; and

- That we had no comprehensive, detailed specification describing interfaces and internal state-machines.

In many ways, this work represents the reality of modern-day design verification where busy designers do not often document a detailed micro-architectural specification. Here, the first set of verification is often carried out locally by the designers using simulation, or the knowledge about the specification is passed by word-of-mouth to verification engineers who verify the design independently.

To the best of our knowledge, this is the first formal verification solution that is independent of a specific formal verification tool vendor, uses the SVA open standard that all tools support and requires minimal setup while guaranteeing more than 99% proof convergence.

You can examine our proof kit today, use the formal verification tool of your choice, start finding bugs in your RISC-V designs and build proofs of bug absence. Contact us to request a demo.

To read how our solution is different from others, and about our history of three decades in microprocessor verification, please click here.

Ashish Darbari is CEO of formal verification consultancy and training provider Axiomise.

Ashish Darbari is CEO of formal verification consultancy and training provider Axiomise.