Enabling greater reliability, scalability and flexibility of GPU emulation at AMD using a hybrid virtual-machine based approach

How AMD coupled a virtual PC and transaction-based emulation to accelerate the verification of its latest GPU

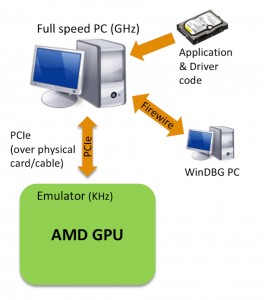

GPU design teams use hardware emulation so that they can work on tasks, such as driver development, that are usually handled once silicon is available, before tape-out. A typical GPU emulation environment will include a full-speed PC, running the system software, OS and driver, connected to the hardware emulator containing the GPU design using in-circuit custom hardware bridges. This enables software to run at high speed while interacting with the RTL design in the emulator, but has challenges such as reliability, scalability and flexibility.

AMD has developed a hybrid approach using Synopsys’ ZeBu Server 3 emulation hardware, a VirtualBox virtual machine, and ZeBu PCIe transactors. This increases the reliability, scalability and flexibility of the emulation environment, meets the key performance requirements and can be used in the same way as the traditional approach.

The traditional approach – the in-circuit Test PC

One key advantage of hardware emulation systems is that they can be connected to the real hardware with which the SoC under development will communicate, to show that they will interact properly.

The traditional approach is to take a GPU design, map it into an emulation system, and then connect it to a real PC containing the CPU, motherboard, disk drives, memory and so on. This is done using a physical PCIe bridge card, which has specialized cables to connect to the emulated design and a PCIe interface to connect to a slot on the motherboard.

Figure 1 In-circuit traditional emulation approach (Source: AMD)

This approach has been used for years to execute everything from low-level tests to high-level GFX driver code, such as Windows DX11 drivers.

This solution has efficiency, productivity and reliability challenges.

Physical disks have to be swapped in and out of the Test PC motherboard to change the operating-system images. This risks mistakes and physical damage to connectors. It doesn’t work well for remote users, and although the issue can be overcome using Ethernet-controlled disk switchers this introduces the need to develop scripting and control mechanisms for runtime configuration.

The Test PC approach also lacks ‘save and restore’ facilities, because it is a real device, with its own clocking and timing, and so its state is inaccessible. This means there is no way to freeze the PC, for example to stop the design and Test PC driver execution.

The Test PC approach also relies on complex target cabling, especially if designers need to work with many different Test PC installations. This increases the chances of introducing intermittent failures due to failing connectors, displaced cables, etc.

GPUs are used in many different motherboards and systems, which means building and maintaining custom Test PCs and managing which Test PCs are connected to which emulator. This reduces the team’s flexibility and ability to make the best use of its emulation resources, as well as making it much more difficult to move hardware emulation systems to remote data centres to ease their management and increase their utilization.

The VirtualBox transactor-based virtual Test PC

Emulation systems can communicate with software processes on an attached workstation through dedicated connections. This enables efficient transaction-based communication over a lower-level physical link, which is typically abstracted using standards such as SCEMI (Standard Co-Emulation Modeling Interface).

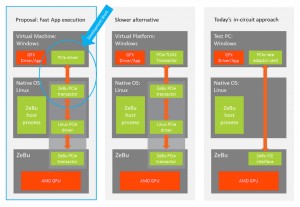

We use this capability to connect from the emulator to a virtual-machine software process running on the attached workstation, because this runs at closer to native hardware speeds than a completely modeled virtual-platform based approach.

The goal is to ensure that the performance of this approach is as close as possible to that of the physical, in-circuit Test PC, so we can explore the end-user experience when executing graphics drivers.

We chose the VirtualBox[1] virtual machine due to its open-source nature, which enables us to customize device model code.

We run VirtualBox on a Linux host workstation that is attached to the ZeBu Server3 system[2], and then run Windows as a guest OS within the VirtualBox environment

Figure 2 Virtual machine, virtual platform and in-circuit comparisons (Source: AMD)

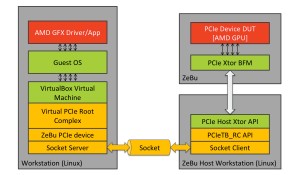

This means adapting the PCIe device driver in the VirtualBox virtual machine to communicate with a standard ZeBu PCIe transactor[3].

This was done by creating a socket-based interface between the ZeBu hardware transactor and the ‘ZeBu PCIe device’ component. This enables hot-plug capabilities and adaptations to different virtual machine architectures as required. The details of the architecture are in figure 3.

Figure 3 Detailed application-code-to-ZeBu DUT stack (Source: AMD)

The virtual machine is completed by having the virtual PCIe root complex connect to a custom ‘ZeBu PCIe device’ plugin, which presents itself as a fully capable device. This then connects to the socket server, which advertises its availability to the socket client. The client and server processes can run on the same or different workstations, which can reduce the load on the ZeBu host workstation.

The socket client communicates to a PCIe root-complex testbench layer, which sends transaction requests into the standard ZeBu PCIe transactor API. This transactor handles the hardware/software communication to the ZeBu emulation system, and enables the PCIe bus-functional model portion of this transactor to drive the pins of the DUT in the ZeBu – in this case, the GPU design.

To bring up the system, we started by ensuring that the GPU PCIe device was correctly enumerated in the virtual machine and that the guest OS (Linux for our testing purposes) could see it. Once complete, we attempted the PCIe initialization of the GPU device using low-level Linux-based diagnostic tests. Once the system was operating as expected, we ran low-level graphics tests, such as drawing textured triangles, 3D doughnuts and other key capability tests.

Adjustments of design, compile and performance improvements enabled us to show that the design passed the test suite. This verified that the Virtual Test PC solution was working on its own, and that it worked as expected with the emulated GPU design.

Addressing the engineering challenges

This project emulated a 240 million gate GPU, using between 50 and 70 FPGAs. Among the challenges of using ZeBu, which was added to AMD’s emulation capabilities at the same time as we were bringing up our GPU, were compile time, routing and design capacity.

We learned not to try and force a large design into as few FPGAs as possible, but to start conservatively to improve routing complexity and turn-around times. We also found that some of our flows generate flat RTL code, which doesn’t always map well to a group of FPGAs, and so will consider adding hierarchy to future designs to ease their compilation onto the emulator.

Shifting from an in-circuit to a transactor-based emulation approach took work. Different emulation systems use different techniques to make hierarchical connections, connect/disconnect drivers and drive target-system clocks, and so the introduction of ZeBu at AMD required some fresh thinking. To connect the design RTL to the transactor RTL, we had to match bit widths and connect to the exact interface (PCS vs PIPE). We learned it is important to review the design interface blocks, signals and clocks in the planning stages.

We chose VirtualBox in part because it is open source, but this meant there wasn’t much documentation on creating custom device models. There were also unresolved issues with running VirtualBox on some versions of Linux, which made it harder to align our environment with AMD’s preferred OSes.

With all of this new infrastructure and solutions, debug was challenging. We had to debug the VirtualBox implementation, the new device model, the socket interface, the transactor C and RTL code and, of course, the GPU design in the ZeBu system. We isolated variables wherever possible and used lots of logging to ease the process.

Conclusions

Moving to a Virtual Test PC approach to our emulation environment was challenging but worth it.

During initial testing we have shown that the environment can boot a guest OS (Linux), perform PCIe device enumeration (recognizing the ZeBu-emulated GPU), load the driver and perform upstream/downstream memory reads/writes to the GPU device. We have also run the 20 basic tests that AMD uses for GPU bring-up in this environment, which makes it a viable replacement for the traditional solution.

The new approach has addressed many of the challenges of the ‘in-circuit’ approach. Designers don’t have to swap physical disks or use disk switchers anymore, simply downloading the required disk image using the tools that support the virtual machine.

This approach also enables ‘save and restore’ because the VirtualBox image and the ZeBu Server 3 state are known, although care has to be taken to flush transactions through any part of the system that will not be saved.

Moving to a virtual approach has also done away with the need for complex cabling, other than between the ZeBu emulator and its host workstation/s. This increases reliability and creates a symmetrical and flexible solution that enables designs to be moved to the ZeBu system without the need to have multiple target solutions.

We also need less custom hardware for the Test PCs, where the differences between one motherboard and the next can be modeled in VirtualBox. It may still be necessary to work with a number of variants, for example if testing with newer AMD APUs that have advanced coherency features with the GPU.

The software configurability of the solution also means that it will be easier to move the ZeBu emulator and attached workstations into a data center, where it can be managed centrally and its resources allocated more efficiently.

Next steps

The next steps to improve this hybrid, virtualized approach to emulating our GPUs is to implement the Windows Kernel debugger (WinDBG) to enable more effective debug and interaction for driver engineers. We also want to develop ways to get the best performance for the ZeBu database and to apply the overall Virtual Test PC solution as early as possible in the design cycle. It would also be helpful to streamline the VirtualBox build process to support the desired Linux host OS variants.

Authors

Andrew Ross has more than 15 years of experience in design engineering roles primarily in the area of microprocessor design verification. Ross now works at AMD as a technical lead and manager in the emulation methodology group, providing solutions and methodologies for all emulation users and products within AMD.

Alex Starr is an AMD Fellow and methodology architect covering hardware emulation, verification and virtual platform technologies. Starr graduated from the University of Manchester in the UK before working for STMicroelectronics where he spanned architecture, RTL design and verification aspects of embedded microprocessors. For the last 10 years he has been working at AMD in design verification, infrastructure and hardware emulation roles. He led the introduction of hardware emulation as an essential strategic methodology for all the company’s products and continues to drive innovation and development in this area.

More information

[1] VirtualBox Virtual Machine: www.virtualbox.org

[2] ZeBu Server 3: http://www.synopsys.com/Tools/Verification/hardware-verification/emulation/Pages/zebu-server-asic-emulator.aspx

[3] ZeBu Transactor datasheets: http://www.synopsys.com/Tools/Verification/hardwareverification/emulation/Pages/zebu-datasheets.aspx