Power under control

In late 2001, Nick Baker and other members of the Ultimate TV team at Microsoft learned that the company was ending development work on the product. For a still youthful engineer whose curriculum vitae already took in some ill-fated early-days video card work at Apple and the short-lived 3DO games console, Baker could have been forgiven at least a brief “Not again” from his lips. But there was an upside. The Ultimate team was being moved to the second Xbox project, with Baker receiving the title of director of the console architecture group. For him, work began in earnest in January 2002.

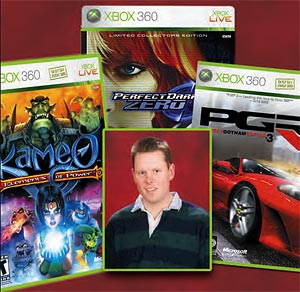

And this time Baker and his cohorts were going to emerge as very much the winners. A year on from the Xbox 360’s launch, it is still the only next generation console on the global market in volume – even though its developers thought that their best efforts would steal it just an edge (albeit a critical one) on the PlayStation3. The look of the finished product in itself tells part of the story. The original Xbox was designed to suggest tremendous amounts of power almost bursting out of the console’s casing. The sleeker, more elegant 360 is, says Baker, all about “power under control”. From day one, very similar thinking underpinned the project’s silicon strategy.

While Sony was banking on the admittedly envelope-busting Cell processor to underpin the PS3 (in essence a technololgy play) Microsoft, while it knew it would also be seeking custom chips, decided to follow the money and base its plans more firmly on the established technology roadmap.

Nick Baker

“Rather than saying, ‘This is what we want to build,’ or, ‘This is the kind of performance that we need to be next generation,’ it was rather the reverse.We put together a business model first and then asked how much silicon we could afford to put into the box,” Baker says. “We constructed that business model first, on the basis of how much shelf life we needed the product to have. Then, based on where we thought silicon, defect density and price were going, we used all those elements to guide the silicon feature set and the performance.”

A further element was determining who exactly was the primary end-user Microsoft needed to take account of for the silicon. “This is a consumer product, so it’s got to come off the line, come out of the box and simply work,” says Baker. “But, in terms of specification, we’re also in the position where our end-customer is really the developer not the consumer.My group has got to be thinking about how it can bring the best games to the platform.

“So, we had advisory boards and we got feedback on the architecture. We knew that we needed to make it simple to program. That was a given. But, knowing that we would be working with 90nm, we could see the emergence of multi-core computing and that was a huge challenge – we had to approach that issue and say [the Xbox 360’s engine] may not actually be one next-generation machine running at 10GHz. Yes, maybe we could go that way, but the PC is heading for multi-core.”

The other huge influence was the launch date – November 2005. At the time, no-one could afford to anticipate a delay in Cell’s arrival so this really was a drop dead date for delivery of the finished product. Balancing all these challenges led Microsoft to switch suppliers for the console’s two key semiconductors. IBM – ironically partnered with Sony and Toshiba in developing the Cell engine – displaced Xbox I’s Intel, and ATI, now part of AMD, took over from Nvidia. While Cell was going to be the state-of-the-art for custom silicon, what IBM provided for the Xbox 360 was PowerPC-based while taking advantage of a shift to multi-core. The resulting tri-core 3.3GHz CPU would nevertheless set its own performance record on going into volume production.

ATI did go down a more explicitly new route for its chip, applying embedded memory that might not feature on a PC graphics semiconductor to preserve performance. Here the deal winner was the oldest of them all, price.

Other silicon elements were DDR memory and a Southbridge that was developed jointly by Silicon Integrated Systems and Microsoft itself.

“So you have the silicon plan but now you also have another control issue,” says Baker, referring to the challenge inherent in having four geographically-dispersed design teams at a host of different companies, involving, according to one estimate, 700 people on the semiconductors and 2,000 in total. And for all the talk of ‘virtual’ global projects, this was something that the nature of the 360 meant had to be managed in a largely traditional way.

“We’re in Mountain View. You have IBM on the east coast. You have the people at ATI. And so on.We basically did a lot of getting down ‘n’ dirty with the design teams. The program managers were camped out at our partners. They practically lived there – full time with the partners rather than back here at Microsoft.We really needed to get integrated into those teams to the extent that some of our people ended up being accepted as part of the other company.” Another step taken was to prioritize the verification and then also to add in a far greater degree of emulation.

“System verification was something we had already done at 3DO and we’d honed our skills on it for several years, but this was the first time where I was involved in a project that took the step into emulation.We did that because of the scale of the project and also because of the schedule.We really needed to go for as few chip spins as possible,” says Baker.

So, a verification and emulation team went into operation at the same time as the silicon group started work, checking over shoulders to define a methodology. The V&E team was led by Baker’s colleagues Padma Parthasarathy and Vic Tirva. For sure, over the lifetime of the project, it led the 360 team down some interesting avenues – “To develop some of the backwards compatibility, at one point we had an x86 simulator running on a PowerPC simulator which ran on an x86 PC,” recalls Baker – but he nevertheless underscores its importance.

XBox 360

“We’d never tackled something like that before. There was a lot of skepticism. Everybody knew it was something you had to throw a lot of time and resources at to get it right, but we managed to do it with a relatively small team. And we actually pulled it off,” Baker says. “We had the OS software guys working on the emulator and bringing up the initial boot code on that well before the chips had taped out.We made that a priority.”

There were other challenges as well such as the needs to create bus models that would test off-chip functionality, to abstract nonsystem level activity up to ESL, and to manage a mixture of Verilog and VHDL designs. The full system simulation speed was less than one cycle per second, so the emulator was pulled on more and more as the design increased in size. “This was the largest emulation integration of its kind,” says Baker.

But it was worth it – although the challenges facing Baker did not end here. He also became heavily involved in the console manufacturing, liaising with Microsoft’s suppliers in China.

“I’d never had the chance to work on a production line before, but I did when we started ramping the system,” Baker recalls, adding that this became another learning experience.

“Before flying to China for the first time, I set about making sure that we had state-of-the-art test equipment available – scopes and logic analyzers. But I didn’t really think about the math.

“Tens of thousands of systems per month to excellent yield, means you still are looking at thousands of rejected systems. You can’t debug that. I’m glad the manufacturing team had that organized and I was able to use statistical analysis tools to tackle those issues in a new way – at least for me.”

Right now, Baker is involved on the revs for the 360 – indeed, Microsoft has three teams at work looking at different revisions to take advantage of new production techniques.

The experience has thus undoubtedly given Baker the right to consider himself much more of an all-rounder.Which all leaves one question that it seems almost obligatory to ask of someone who has had such a broad view of the chip design process.What more does he now want from EDA?

“Some of the things that always come to mind are around analog and digital. A tool that lets you combine analog and digital circuitry in the same chip and come out at the other end with predictable and accurate results – that still doesn’t seem to be 100% there,” he says.

“And when we look at the interesting challenges, for 90nm and below, managing power consumption is going to be tricky, so we want tools for that. You can’t rely on voltage scaling to bring down your power consumption.We need to address all levels of the design from the architecture, and tools that help us do that quickly.”