OpenCL: games technology comes to us all

OpenCL aims to open up the performance of graphics processors to other applications. It is also one more way in which compilation is being moved to runtime to make it easier to move code dynamically across heterogeneous platforms.

Tony King-Smith, vice president of marketing at graphics-processor (GPU) specialist Imagination Technologies, believe a major change is afoot in the architecture of computing. Speaking at the 2012 International Electronics Forums in Bratislava by video link, he claimed: “The tables are being turned with commodity technology driving high-end technology. GPUs are going into set-top boxes. Every CPU [chip] will have a high-performance GPU on them. We are entering an era of mass-market parallel processing. It’s not supercomputing yet but it’s providing hundreds of gigaflops.”

During the past decade, so-called shader-based GPUs have become the standard method for rendering 3D graphics thanks to changes in operating system architecture made by Microsoft, among others. The early 3D graphics pipelines were more or less hard-coded and followed a rigid sequence of steps that transformed a set of triangle coordinates sent by the host processor into shaded and lit rectangles. Whatever lighting model the 3D accelerator offered was the lighting model you had to use.

Changes that Microsoft made with the release of DirectX 8 helped put 3D graphics on a new path and ushered in the GPU: a programmable parallel processor that, although optimised for graphics, can be used for other work. Most GPUs are now highly programmable and, although they currently lack some of the attributes of a full microprocessor. The target application for GPUs has driven up their aggregate performance dramatically.

“To get the throughput needed for 3D, the GPU has to be a parallel processor. The rate of increase in processor power of GPUs is dwarfing that of CPUs,” says King-Smith.

Those increases in processing performance are helping to expand GPUs from their old home in gamers’ PCs to phones and other devices, although these were not the first beneficiaries of GPU-based computing. “It wasn’t us, it was people in academia who took our GPUs to use their performance,” Chris Schläger, director of the operating systems research center at AMD, explained at the Design and Test Automation Europe conference earlier in 2012.

Programming difficulties

GPUs have historically been difficult to program directly, relying on specialised low-level languages designed primarily for 3D graphics. The Cuda language, a version of C developed by GPU supplier nVidia, and Brook from AMD subsidiary ATI to a lesser degree, opened up the GPU to users in high-performance computing where they have been deployed for oil and gas surveying as well as weather forecasting. For example, Aberdeen-based ffA uses arrays of GPUs to analyse oil-well data in place of conventional supercomputers.

The issue with Cuda is that it is limited to nVidia GPUs – although the chipmaker has added x86 processors as compilation targets – and Brook, similarly, to AMD’s products. So, the GPU makers clubbed together with Apple to promote a less silicon-specific language – OpenCL – as the driver for GPU-powered computing, siting the standardization effort in the Khronos Group, which manages the similarly named OpenGL for parallelized 3D graphics.

“OpenCL offers an industry-standard way of programming the GPUs,” says King-Smith.

In just six months, Khronos prepared version 1.0 of the OpenCL standard. Naturally, Apple’s own operating systems support OpenCL but a number of manufacturers of GPUs, such as Imagination Technologies, have embraced it. OpenCL has attracted attention from other silicon suppliers. Makers of field programmable gate arrays (FPGAs) such as Altera expect OpenCL to expand the market for their devices into high-end computing.

Adacsys, a startup founded by Erik Hochapfel, is using Altera’s devices to make specialised computers for the finance and aerospace industries that will be programmed using OpenCL. Like King-Smith, Hochapfel expects a number of these systems to be deployed in the cloud to offer “application acceleration as a service. It means there will be no need for any hardware inhouse”, he claimed at the Sophia Antipolis Microelectronics conference in October 2012.

Dual role for OpenCL

OpenCL has, potentially, two important roles. One is to expand the range of processor types that can be used to execute code written in a high-level language. The second is to promote the concept of runtime compilation as a way of increasing the portability of code.

Instead of generating actual machine code, the OpenCL development tools can produce an intermediate format that is translated into actual GPU instructions at runtime. And the code does not have to run on a GPU. If one is not present in the system, the runtime environment will generate code that can run on the host processor or, potentially another form of accelerator able to run the core OpenCL operations.

A big problem today is keeping track of installed systems and providing code updates that are compatible especially if the design has been updated over time to keep ahead of component obsolescence. In these situations it can begin to make sense to look at development languages and tools that produce target-independent code. The rise of architectures that redeploy code to the most power-efficient target at a given time may expand the remit of target-independent code.

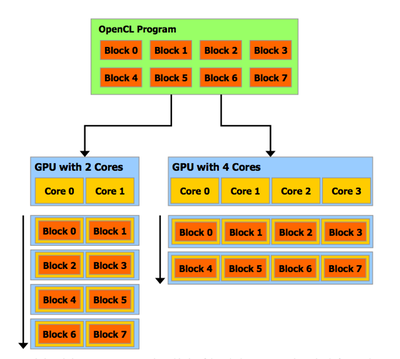

Figure 1How OpenCL applications can map onto two different GPU implementations (Source: nVidia)

Mobile-task platforms such as ARM’s Big-Little and nVidia’s Tegra 3 rely on code-compatible processors to allow software to move from a high-performance to a low-power core dynamically. But with target-independent code, an energy-management algorithm might decide on the fly to schedule code for an underused GPU rather than increase the clock speed and, therefore, the active power consumption of a CPU or collection of them. Alternatively, it might decide that activating the GPU incurs too high a cost and keep tasks running on the already active CPUs.

OpenCL functions take the form of compute kernels – tight, often vectorized loops that can be used to process multiple data elements in parallel. The host’s OpenCL runtime environment takes care of loading the kernels and data into the target processors, triggering execution and retrieving the results. Because GPUs are generally designed around processors with a small amount of fast local memory and with limited access to global memory, it generally makes sense to load the kernels in this way. OpenCL uses the concept of command queues to load and process kernels generated by the host program in sequence, working out how many threads – which will be matched to the number of processing elements – to generate on the fly.

Implementation inefficiency

OpenCL does not necessarily make the most efficient use of a given hardware platform. As an architecture-independent language there are compromises, which is why proprietary languages such as Cuda are likely to be reasonably popular in the future.

For situations where dynamic compilation incurs too much an overhead it is possible to generate binaries in advance and call compatible ones at runtime, although this will increase overall code size if the binary needs to run on a variety of targets.

FPGAs may seem to have a handicap when compared with much more logic-dense GPUs but there are key architectural differences to the way in which datapaths are implemented that will lead to a significant market for OpenCL-programmed FPGA-based targets. The key difference, assuming that the FPGA implementations deliver, will be latency.

On paper, the GPU looks like an arithmetic juggernaut. But, like any other processor, the GPU is a product of architectural compromise and assumptions about the nature of the workload. Being designed primarily for 3D graphics, the key assumption is that the processing is relatively latency insensitive. The quantity of data per update period – generally a screen refresh – is very high. This makes it possible to feed the execution units using an array of small local memories that can be filled and emptied using efficient block transfers. Heavy use of multithreading hides the latency of the local memory accesses.

Latency-sensitive algorithms and those that involve the maintenance of long history buffers, such as audio filters, do not map as well as graphics or image processing operations onto GPUs. The most efficient way to implement an audio filter, according to recent research from EPFL in Lausanne, is to dedicate each sample to a single thread and then put all the threads for the samples needed within the filter into blocks of execution units. This provides a good tradeoff between performance and storage efficiency. However, this demands that all the samples are sent in one block to the GPU, which increases overall latency. The pipelined execution of a typical FPGA implementation should avoid this latency although there are likely to be other tradeoffs.

Programming limitations

OpenCL itself has limitations, says Schläger: “It is a subset of C. You look at the limitations of the subset and you realize you lose a lot.”

The restrictions are largely imposed by conventional GPU architecture. The two most obvious are that OpenCL does not support standard C pointers. Arguably, this is a good thing but it restricts the way in which code can be written and makes it harder for applications to pass data by reference – data needs to marshalled and moved to and from the GPU’s address space. By bringing the GPU into a cache-coherent address space, Schläger says, applications could be made easier to write.

The other restriction, which is problematic for flexible mobile and embedded devices that may want to switch code dynamically between CPUs and GPUs is that the graphics engines do not context switch.

“A GPU can have 20,000 registers. You will have fun doing a context switch dumping those registers to the stack,” says Schläger. “That is something we are working on.”

Work is underway in groups such as the HSA Foundation, set up by AMD and others, to extend the architecture of GPUs to support more conventional programming paradigms and not only expand the ways in which OpenCL can be used but handle other dynamically compiled languages such as Microsoft’s C++ AMP and others that may appear in the future.