Wally Rhines looks beyond ‘endless verification’ to the system era

At the first DVCon China, Wally Rhines, chairman and CEO of Mentor – A Siemens business, offered a comprehensive overview of the challenges facing verification and how they are growing, nine years after he identified the threat of ‘endless verification’.

There was also an especially intriguing and local observation that Rhines made for the Shanghai audience: The adoption of a number of key verification technologies is greater and growing significantly faster in China than globally (we will look at that in more detail in a separate post).

Key takeaways

Rhines said that the current verification challenge has four main components:

- Despite design re-use, verification complexity continues to increase at 3-4X the rate of design creation.

- Increasing verification requirements drive new capabilities for each type of verification engine.

- Continuing verification productivity requires EDA to:

- (a) Abstract the verification process from the underlying engines;

- (b) Develop common environments, methodologies and tools; and

- (c) Separate the ‘what’ from the ‘how’.

- Verification for security and safety is providing another major wave of verification requirements.

Verification productivity – something old, something new

Across the four areas, several components making up the general productivity challenge are worth detailing. Some elements are familiar – but persistent – but other new ones are emerging.

It remains the case, Rhines noted, that we are not generating enough engineers.

“The supply of engineers is growing very slowly around the world, about 3.7% per year,” he said. “The US only grows the number of college graduates in electronic engineering by between 1% and 1.5% (annually). Still not enough to meet the demand.

“But interestingly, on some survey data that I’m going to share with you, the number of engineers that define themselves as verification engineers is growing much faster. The compound average growth rate in verification engineers since 2007 is about 10.6%, whereas the number of those who identify themselves as design engineers is growing at only about 3.6%.”

Meanwhile, the drivers behind verification complexity are familiar but arguably now being exacerbated by the growth in re-use and the integration of third-party and separately developed but internal blocks of intellectual property (IP).

Rhines illustrated that with a story from his time running Texas Instruments’ semiconductor group during the 1980s. Ericsson, a major client, wanted Rhines’ team to integrate a multi-chip solution for its cellphones on a single die.

“TI made the ASICs, we made the SRAM, we made the DSP. So, of course, I said ‘How difficult can this be? This is already verified. We’ve got a netlist. That is already verified. All we do is put them together. They had a very aggressive schedule. I agreed to it,” he said.

“And then I discovered a basic thing that most of us now know: Verification increases by a factor of 2 to the x where x is the number of memory bits, flip flops, latches and I/O. Just because you have verified the blocks doesn’t mean you have verified all of the states. And when you put those blocks together you have billions of additional states that now have to be verified or at least eliminated from verification.

“Needless to say, the design took much longer than I had anticipated, but it was a lesson that I remembered.”

What is now pushing this established problem even higher up the agenda is the complexity inherent in designing to a target system rather than simply target silicon.

Rhines was happy to acknowledge that when it comes to system verification, he is very much talking his own book, addressing one of the main reasons for Mentor’s recently-completed acquisition by Siemens.

“My title hasn’t changed. I’m president and CEO of Mentor Graphics. But I’m now part of a $90B company that considers this kind of [system] verification as the next wave of growth,” he said.

Rhines nevertheless noted that, at an IC design level, EDA and the industry generally has lifted productivity by five orders of magnitude since 1985. But, he added, “verifying chips is largely automated today and a very rigorous process, but verifying systems is at the same stage as integrated circuits were 40 or 50 years ago.”

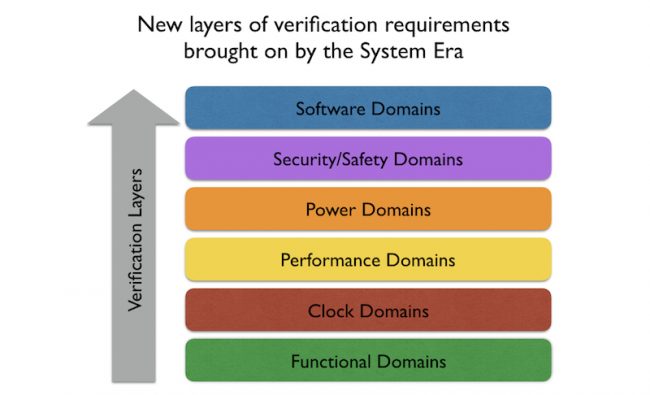

This system era trend is then creating greater burdens as you move up the verification stack even for ICs in and of themselves (Figure 1).

Targeted solutions

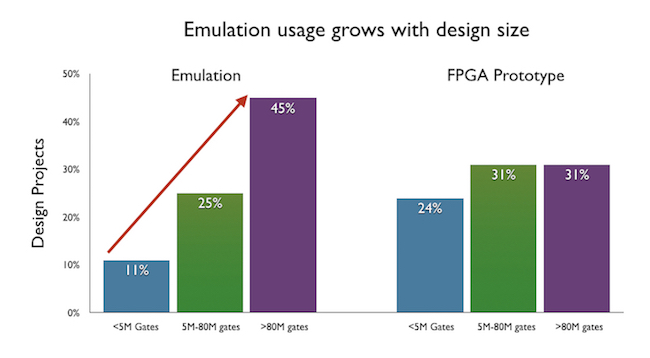

These problems are not without responses. As widely noted, emulation has rapidly taken up much of the strain on simulation, and users’ broader verification requirements. Moreover, the boxes have moved out of the lab and into data centers broadening the user base, allowing for much greater virtualization and expanding use-cases beyond simple ICE into advanced software test and more.

Quoting the Wilson Research Group’s 2016 double-blind survey on verification trends, Rhines said that for designs of greater than 80 million gates, almost half the respondents said they were using emulation (Figure 2).

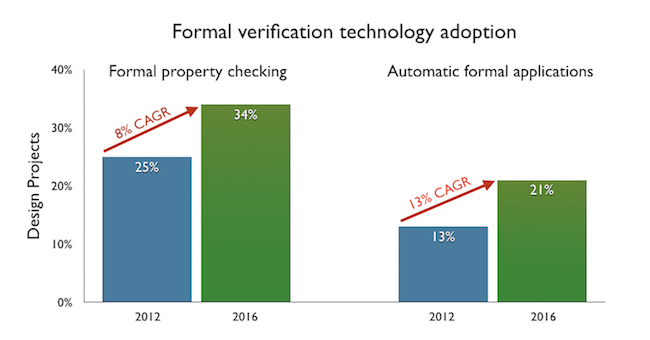

The use of formal verification is also becoming more common, with a major shift toward task-specific automation.

“What formal traditionally meant was that you had to become an expert in property checking, which is a very difficult sort of technology,” Rhines said. “So what the EDA companies have done is try and find specific things that they can focus formal verification on, so that it becomes a sort of push-button task: You don’t have to be a property checking specialist to do it.”

Mentor and other companies have aggressively moved into such automation, addressing issues such as clock domain crossing, deadlock and X propagation. Their efforts, Rhines said, are paying off: The CAGR for automated formal tools shown in Wilson’s research (Figure 3) is currently 13%, relative to 8% for formal overall.

But what’s next? On the immediate horizon: Portable stimulus. It was also subject of one of the standing-room only sessions during a well-attended DVCon China.

“This is from Bill Hodges [a principal engineer] at Intel. ‘You should not be able to tell if your [verification] job was executed on a simulator, emulator or a prototype,’” Rhines said. “That’s a key objective because right now we essentially write separate testbenches for all three – and that’s a big time sink.

“So this is my advertisement for Accellera and for its Portable Stimulus Group. Some of our people think it should be the ‘Portable Testbench Group’, but whatever you call it, it’s important because the objective is to get a simple input specification to enable the automation of test creation, make it reusable across all platforms and then have the tools generate any specifics you need for each particular platform.

“I encourage you, if you’re not involved, get your company involved because this one will be a big productivity gain for all of us.”

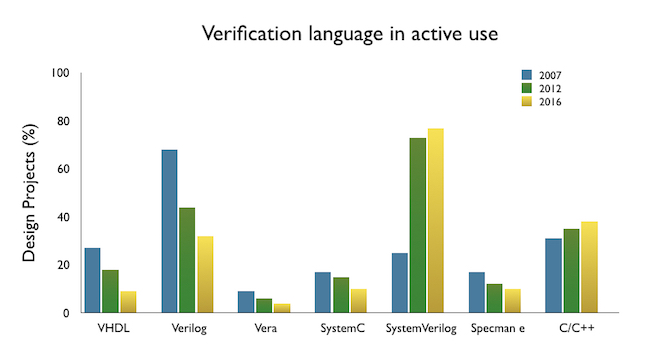

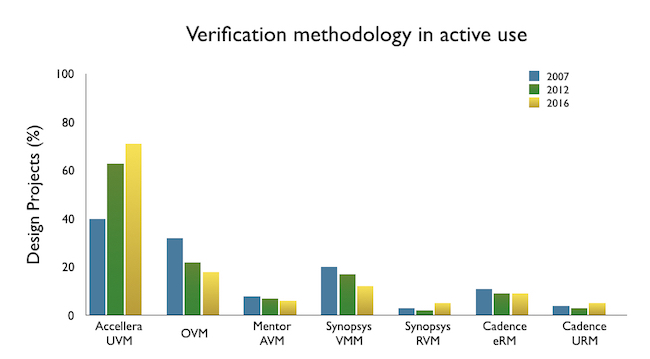

Staying with standards, Rhines also noted the continuing increase in adoption of both SystemVerilog and SystemC as design languages, and of OVM in class library standardization. According to the Wilson Research, System Verilog is being used on about 75% of all projects and OVM is at a broadly equivalent level.

So there are tools and enabling technologies in place, but what is around the corner.

Emerging challenges

While it is obvious to see security as an increasing challenge, it also presents a nuanced challenge for verification, Rhines noted.

“Most of us, if we’ve been doing verification for some time have focused on verifying that a chip does what it’s supposed to do. Soon, you’re going to have a much tougher problem. And that is verifying that a chip does nothing that it is not supposed to do,” he said.

Rhines considered an example in formal verification: “You would like to design a chip that has an encrypted access where you have to give a 128bit key to activate the chip, to make it secure. But what you need to do is more than just define the key storage and the encryption engine. You need to be able to formally prove that there are no other possible logical paths through which you can enter the chip.”

In terms of safety, Rhines cited the automotive standard ISO26262. It has become the poster child for the issue, although the same theme underpins older aerospace (DO254) and medical (IEC60601) standards.

Here, he also sees traceability becoming an increasing part of the verification and design process.

“One thing that has been around for a long time in the military and which has now made its way into automobiles and other products is the need to do requirements tracking,” he said. “In military designs this is already there – we’ve been making tools for 20 years where you can take a set of requirements and automatically trace where in the RTL was that requirement implemented and where in the testbench was it checked with a unit test.

“I anticipate that his is going to spread. More and more, it will be true of automotive designs as well.”

Roadmapping verification

Like so much else in the industry, the verification challenge needs a roadmap. From Rhines’ side, it seems that most of that is already clear and that the foundation technologies to meet it are in place. That is the good news.

But the bad news is that there are a few holes. System design is one – though obviously Mentor’s position is that it is in pole position to address that following the Siemens deal.

During DVCon China, we got the chance to sit down with Wally Rhines for an extended interview.

Part One focuses on Mentor’s plans now that the Siemens deal has closed.